Do NOT follow this link or you will be banned from the site!

Techradar

Microsoft has all but given up on Windows 11 SE – and it looks like the war against Chromebooks has been lost

- Microsoft has said Windows 11 SE will run out of support in October 2026

- This brings an end to this alternative spin on Windows 11

- However, Surface Laptop SE owners were previously promised a longer timeframe of support for their devices

Microsoft has announced that it's dropping support for Windows 11 SE in just over a year's time, leaving buyers of low-cost laptops running this spin on its OS in the lurch - and admitting defeat with this most recent initiative to take on Google's Chromebooks.

If you're unfamiliar with Windows 11 SE, it was designed as a (kind of) lightweight version of the desktop operating system. It was preinstalled on affordable laptops that were priced to do well in the education sector, trying to take a piece of the pie that Chromebooks dominate (SE seemingly stood for Student or School Edition).

Windows Central reports that Microsoft announced via its Learn portal that Windows 11 SE support is being shuttered in October 2026.

The company said: "Microsoft will not release a feature update after Windows 11 SE, version 24H2. Support for Windows 11 SE - including software updates, technical assistance, and security fixes - will end in October 2026. While your device will continue to work, we recommend transitioning to a device that supports another edition of Windows 11 to ensure continued support and security."

So, you won't be provided with Windows 11 25H2 later this year on your SE device, if you own one - version 24H2 is as far down the line as you'll get, and all updates will cease full-stop in just over a year.

Microsoft has been trying to take on Google's Chromebooks for a long time now, including efforts such as Windows 10X - which badly misfired and ended up being canned before it even arrived. Windows 11 SE was the most recent effort, emerging late in 2021, and it was showcased by Microsoft in its Surface Laptop SE. However, as we observed in our review of that machine, there was a problem here - the performance level of the notebook was rather poor.

The simple truth about Windows 11 SE is that while it was supposed to be a streamlined operating system for low-cost devices, this variant of the desktop OS was still too unwieldy. There just wasn't enough emphasis on trimming down Windows 11 so it performed better.

Indeed, much of the thrust of Windows 11 SE was about simplifying the computing experience for students - the interface, and locking the system to only admin-approved apps, plus cloud services - rather than actually streamlining the operating system so it ran well on lesser hardware. And let's be honest, the latter was the whole point, really, at least in terms of making affordable laptops to rival cheap Chromebooks (which run very slickly indeed, despite their low cost).

So, all in all, it's not surprising to see Microsoft shutter this effort in this manner. What is surprising, though, is how owners of Windows 11 SE machines, like the Surface Laptop SE, have now been left in the lurch by this announcement that support is being killed in a year.

As Neowin, which also picked up on this move, points out, the Surface Laptop SE has an end-of-service date (for firmware and drivers) of January 11, 2028. But with Microsoft now having revealed that Windows 11 SE won't be going on beyond October 2026, that's cutting this support window (pun not intended) short by over a year.

Those who own a Surface Laptop SE, who thought they were good for another couple of years, have effectively now been told they're going to be a year shorter on support. If Microsoft promised support for this showcase laptop through to 2028, then why not extend support for the dedicated OS it runs to that date, too? Strong-arming folks into moving early hardly seems fair here.

It seems an odd decision to make, and one that won't endear Microsoft to some people. Indeed, when it comes to Microsoft's next big shot at taking on Chromebooks - if there is one - those in the education sector might remember what's happened here, and be less trusting of new ideas from the software giant.

For those who do have a laptop running Windows 11 SE, and now need to plan on switching away sooner from that OS, maybe to a different flavor of Windows 11, this is possible, albeit somewhat problematic in some reported cases. Going by this Reddit thread, if you're running into trouble in this endeavor, you may want to try turning off Secure Boot in the laptop's BIOS to get a working installation of Windows 11 Home or Pro on an SE machine (you can switch the feature back on afterwards, apparently).

You might also like...- Fed up with your mouse cursor supersizing itself randomly in Windows 11? Thankfully this frustrating bug should now be fixed

- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Meta's mixed reality glasses make my Meta Quest 3 look like a boulder

- Meta and Stanford University researchers have developed new MR glasses

- These specs use holography to produce high-quality images

- Support rumors that Meta's headsets may turn into slim goggles

Meta’s newly published research gives us a glimpse at its future XR plans, and seemingly confirms it wants to make ultra-slim XR goggles.

That’s because Meta’s Reality Labs, alongside Stanford University, published a paper in Nature Photonics showcasing a prototype that uses holography and AI to create a super-slim mixed reality headset design.

The optical stack is just 3mm thick, and unlike other mixed reality headsets we’re used to – like the Meta Quest 3 – this design doesn’t layer stereoscopic images to create a sense of depth. Instead, it produces holograms that should look more realistic and be more natural to view.

That means it’s not only thin, but high-quality too – an important combination.

Now there’s still more work to be done. The prototype shown in the image above doesn’t look close to being a consumer-grade product that’s ready to hit store shelves.

What’s more, it doesn’t yet seem to pass what’s called the Visual Turing Test. This would be the point at which it's impossible to distinguish between a hologram and a real-world object, though that goal looks to be what Reality Labs and Stanford hope to eventually achieve.

Even with this technology still likely years (perhaps even a decade) from making it to a gadget you or I could go out and buy, the prototype’s design does showcase Meta’s desire to produce ultra-thin MR tech.

It lends credence to rumors that Meta’s next VR headset could be a pair of lightweight goggles about a fifth as heavy as the 515g Meta Quest 3.

Given these rumored goggles are believed to be coming in the next few years, they’ll likely avoid the experimental holography tech found in Meta and Stanford’s report, but if Meta were looking to trim weight and slim down the design further in future iterations, the research it’s conducting now would be a vital first step.

I, for one, am increasingly excited to see what XR tech Meta is cooking up.

It's Ray-Ban, and now Oakley, glasses have showcased the wild popularity that XR wearables can achieve if they find the sweet spot of comfort, utility, and price, with that first factor looking to be the most vital.

Meta’s other recent research into VR on the software side also highlights that a lighter headset would remove friction in keeping people immersed for hours on end.

This could lead to more meaningful productivity applications, but also more immersive and expansive gaming experiences, and other use cases I’m excited to see and try when the time is right.

For now, I'm content with my Meta Quest 3, but I can't deny it now looks a little like a boulder next to this 3 mm-thick prototype design.

You might also likeMicrosoft's trying to force OneDrive on us yet again - this time for moving from a Windows 10 PC to a Windows 11 one

- Microsoft has put together a new promotional video

- It sells the benefits of transferring to a new PC via the Backup app

- However, it's also pushing a OneDrive subscription - but there are free alternatives

Microsoft has published a video clip explaining how easy it is to move to Windows 11 using the Backup app to transfer the contents of your Windows 10 PC (or most of them, anyway).

Windows Latest spotted the new promotional video from Microsoft, which shows how easy it is to make the leap to a Windows 11 PC (see the clip below).

As the video makes clear, you can back up your personal files, Windows settings, and also some apps from your Windows 10 PC, and transfer them directly to a Windows 11 computer with a minimum of fuss (or that's certainly the idea).

To be fair to Microsoft, it also points out the major catches with using the Windows Backup app to switch over to a Windows 11 PC.

Namely, that you can't take third-party apps with you - they need manual reinstallation, only Microsoft Store apps can be ported across (their pins will be where you left them, and you can click on the relevant pin to restore the application) - and that you're limited to 5GB of files by default.

The 5GB restriction is in place because the backup that the Windows app creates is stored on OneDrive - so you need an account with Microsoft's cloud storage locker. The basic free account only has 5GB of cloud storage, and if you want more space than that, you'll have to pay for a OneDrive subscription.

So, the long and short of it is, if your data and settings amount to more than 5GB - which it surely will in most cases - then you'll need a paid plan on OneDrive to ensure Windows Backup transfers all your stuff to a new Windows 11 PC.

In other words, this is Microsoft not-so-subtly pushing a OneDrive subscription (not for the first time, I might add). If you want to use Microsoft's built-in Windows Backup facility, there's no alternative to OneDrive. If you paid for cloud storage with another provider, no choice is offered to use that cloud locker instead.

There are alternatives to paying for a OneDrive subscription to ensure you have enough capacity to fully transfer all your files across to a new PC. You can simply be very selective about what you choose for Microsoft's Backup app to port over, and perhaps leave out the hefty chunk of media files (photos, videos) you may have on your computer.

To move those media files, you could simply copy them to an external drive, and then subsequently manually move them onto the new PC - okay, that's a bit of extra hassle, but it's not really a big deal. (If you do go this route, don't delete the files from the original PC until they're safely transferred - always be sure to keep multiple copies. Never rely on a single copy of any data, as in this case, if the external drive goes kaput, you've lost everything).

Another eventual option will be the PC-to-PC migration feature in the Windows Backup app, which transfers your files over from one computer to another via the local network (with no need for OneDrive). However, you will need a Microsoft account still, and in this case, no apps will be ported across at all (not even those from the Microsoft Store).

Even so, this will be a useful alternative in the future, but the feature isn't live yet. Indeed, it hasn't appeared on Windows 10 at all - only the non-functional shell of the PC-to-PC migration feature is available on Windows 11 currently - but I can only presume that Microsoft is working to get this up and running before October 2025, when Windows 10 support runs dry.

Notably, though, Microsoft doesn't mention PC-to-PC migration in the above video clip. Fair enough, as noted, it isn't working yet, and so the company does have an excuse - but I'm betting there won't be an all-singing-and-dancing promotional video campaign for this feature when it debuts. Unlike pushing OneDrive, this isn't going to give Microsoft any immediate (potential) financial benefit.

You might also like...- Fed up with your mouse cursor supersizing itself randomly in Windows 11? Thankfully this frustrating bug should now be fixed

- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Apple Mac users should install this new update right now, as macOS Sequoia bows out with a crucial bug fix

- Apple has released an important new update for macOS Sequoia

- The update prevents a potential bricking issue for users utilizing DFU mode for device recovery

- It may be the last macOS Sequoia update before macOS Tahoe 26's arrival

Apple's macOS Tahoe 26 is now on the horizon, slated for release later this year. However, Apple isn't quite done with macOS Sequoia, and it's just released an important update for Mac users.

As reported by 9to5Mac, Apple has released macOS Sequoia 15.6, a new update with an important bug fix. This resolves an issue with Finder and Apple Configurator's inability to restore devices from DFU (Device Firmware Upgrade) mode, which could result in a bricked device if unsuccessful.

Users would need to specifically enter DFU mode (which serves as an alternative to recovery mode for device restoration) for this to occur. While users who aren't planning on using DFU may still be safe on Sequoia 15.5 (and older), it's better to eliminate the chance of bricking a device entirely by updating to Sequoia 15.6.

This could be one of the last updates of Sequoia we see before it eventually bows out to Tahoe 26, which promises a variety of improvements to the macOS experience – it's already available in public beta, and it looks set to be ideal for multitaskers and gamers.

With new tools like MetalFX Frame Interpolation, a Game Overlay, and an upgraded Game Porting Tool kit, macOS Tahoe 26 is another step in the right direction for Apple and its gaming support.

Having only used Intel-powered MacBooks, I've been debating a potential switch from Windows laptops to M-series MacBooks for a while now – and the arrival of macOS Tahoe 26 looks like the perfect time for it.

I'm a big gamer (if that wasn't clear enough already), and I'm not opposed to spending a hefty sum when a laptop can provide exceptional performance in productivity and multitasking, alongside gaming. Yes, gaming laptops exist, but MacBooks using the latest M-series chips look like the ideal answer due to their power efficiency.

I'm growing tired of Windows for gaming, and I'd rather use SteamOS for its console-like UI and better game performance – but using Discord for streaming to friends on SteamOS' game mode, isn't a simple task. Until that's addressed, I'll stick to SteamOS/Bazzite for handhelds exclusively.

As for gaming on a laptop, macOS is becoming a more appealing operating system after each update, especially with a growing game library with titles like Cyberpunk 2077 and Resident Evil 4 remake on Macs. Apple is continuously proving that gaming is on its radar, and it might just convince me to join the party.

You might also like...Some Windows 10 PCs are reportedly being offered a Windows 11 upgrade even though they don't support the OS – here's what to do if this happens to you

- There are scattered reports of Windows 10 PCs being offered a Windows 11 upgrade

- That's despite the fact that these devices don't meet the Windows 11 hardware requirements

- This has happened in the past, too, and it looks like it's a recurring bug – and not an offer you want to take up

There are scattered reports of Windows 10 PCs being offered an upgrade to Windows 11 even though they don't meet the requirements of the newer operating system.

German tech blog Born City brings us this news (via Neowin), with the author of the post describing an incident with their Dell Latitude 7490.

That laptop is running Windows 10 22H2, and keeps being offered an upgrade to Windows 11 every few months, even though it isn't compatible with the latter OS, and the author says they're having to repeatedly dismiss the upgrade prompt.

On top of this, a reader contacted Born City, explaining that their Lenovo IdeaPad, also using version 22H2 of Windows 10, was offered a Windows 11 upgrade despite having TPM 2.0 switched off in the BIOS (this is a hard-and-fast requirement for running Windows 11).

This annoyed the reader, seeing as they had specifically turned off this TPM functionality to avoid getting prompted about an upgrade.

Furthermore, the report points out previous incidents earlier this year, where an IT admin at a company contacted Born City complaining that multiple PCs had been automatically upgraded to Windows 11 24H2 (from Windows 10 22H2) without their knowledge, bypassing all the usual update procedures in place for these business machines.

The author of the article asks if any other readers have encountered these offers of Windows 11 upgrades that have been piped through to PCs that shouldn't be getting them. Notably, there are no responses saying that other people have, and scouring the usual online forums, I can't find other recent reports of this kind of behavior (on the likes of Reddit, for example).

So, my conclusion at this point is that these are very much scattered incidents, but what's interesting is that they aren't happening for the first time.

In my digging around on Reddit, I found reports from early in 2023, reminding me of a very similar incident whereby Windows 10 devices were offered an upgrade to Windows 11.

Back at the time, Microsoft told us: "These ineligible devices did not meet the minimum requirements to run Window 11. Devices that experienced this issue were not able to complete the upgrade installation process." This was, in fact, a bug that was fixed on the same day.

There are also historic reports of Windows 10 users receiving the Windows 11 upgrade despite having switched off TPM 2.0 (in order to avoid the newer OS, as was the case with the Born City reader mentioned above).

What does all this mean? In my book – and this is just my opinion – this looks to be a recurring bug with Windows 10 (as was previously the case, and seemingly one with a very limited impact this time).

And it's not like there's any shortage of glitches that keep making a comeback with Microsoft's desktop OS – just look at the persisting installation failures with Windows updates across the years.

There are theories around that this could be Microsoft somehow forcing Windows 11 upgrades to help with adoption numbers of the newer operating system, which have spiked recently, which is only to be expected with Windows 10 End of Life now drawing ever closer.

Granted, these theorists could have a point in terms of updates being forced with PCs that have actively tried to avoid them – as in deliberately turning off TPM 2.0, when it's supported on the device – but I remain skeptical even then.

Neowin points out that a recent stealth update for both Windows 10 and 11, which can force-upgrade PCs to a newer version, may have something to do with all this, and that again is a possibility.

Still, I feel this is buggy behavior, even if that's true – Microsoft would surely never intentionally push an upgrade to unsupported hardware. And if the rules have changed regarding Windows 11 compatibility somehow, it would be very remiss of Microsoft not to point this out.

So, the question you may have is this point is: what should you do if, by chance, you're offered a Windows 11 upgrade when your Windows 10 PC doesn't meet the system requirements of the newer platform?

The simple answer here is: don't take that upgrade. For starters, the update may fail (especially given that it's possibly being offered in error), as was the case with previous instances of this happening. And even if it was to succeed, there's no telling if things might go awry with your Windows 11 installation in the future.

Just like a fudged upgrade to avoid the requirement of having TPM 2.0 – which can be done – the recommendation remains not to take this route.

If you're worried about the impending death of Windows 10, remember that even though support is going to be officially ending in October 2025, you can now sign up for free updates for another year (all you need to do is sync PC settings to OneDrive, which isn't a big deal I don't think – though your opinion might vary).

That'll give you plenty of breathing space – until October 2026 – to work out what you're going to do, but I really don't think trying to run Windows 11 on an officially unsupported PC is a good idea. Not, at least until Microsoft clarifies that Windows 11's system requirements have somehow been changed, if that's indeed true (and as mentioned I very much doubt it), or of that happens in the future – and I don't see that in my crystal ball, either.

You might also like...- Fed up with your mouse cursor supersizing itself randomly in Windows 11? Thankfully this frustrating bug should now be fixed

- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Meta revealed what makes a VR game perfect, and it could be hinting at big hardware changes

- Meta revealed the ideal VR gaming session is 20 to 40 minutes

- Less than that and VR doesn't feel worthwhile

- Longer and hardware issues can have a negative impact

Meta has released new research it has conducted into the perfect length of VR games, and based on my experience testing its Meta Quest 3, Meta Quest 3S, and its older headsets, the results of the study ring true.

This advice might not just mean we see alterations to the kinds of apps we get in VR, but also tweaks to Meta’s hardware itself. Its published findings point to design issues that many have with existing hardware, problems that leaks of Meta’s next headset release suggest have been resolved for its next device.

More on that below, but first let’s begin with Meta’s research, and why 20-40 minutes is apparently the ideal length for a VR game session.

As Meta succinctly explains in a short graphic (above), the “Golidilocks session length” is about 20-40 minutes based on its research.

If a VR session is shorter than 20 minutes, we can be left feeling unsatisfied. While many mobile games can get away with a shorter 5 to 10 minute loop (or even less), VR requires more effort to enter (clearing space, donning the headset, etc), so it necessitates a more worthwhile experience.

VR can still offer those shorter loops – such as Beat Saber delivering levels which are just one song long – but they need to be chained together in a meaningful way. For example, you can play several Beat Saber missions as part of a workout, or as a warm-up to your VR gaming sesh. For multiplayer games, if a match is typically 10 minutes long, a satisfying experience might be that your daily quests are something you usually accomplish in two games.

After 40 minutes, the experience starts to have diminishing returns as people begin to feel friction from physical constraints – such as their fitness levels for a more active game, social isolation in single-player mode, limited battery life, or (for newcomers) motion sickness.

That’s why Meta says it has found games between this length are just right (i.e. in the Goldilocks zone) for most VR gamers.

Now, if you’re not a VR app developer, this will be directly useful for your software, but for non-developers, there are some things we can take away from Meta’s findings.

For a start, it provides some additional proof for the advice I always give VR newcomers: just start with a headset and get accessories later.

Now, if they come free in a bundle that’s one thing, but if you’re looking to spend a significant sum on a headstrap with a built-in battery on day one, you likely want to think again.

Yes there are plenty of people who do push through that 40-minute barrier and love it, and so having a larger battery is useful – I always think back to my time playing Batman: Arkham Shadow for as long as my battery would allow and being so frustrated at waiting for it to recharge – there are many folks for whom just 20 to 40 minutes is perfect.

As I always say, try your headset for a few weeks and see if you need a bigger battery or would benefit from any other accessories before buying them. With fast delivery, you won’t be waiting long before you get them anyway if you do decide they’re for you.

This research could also point to Meta’s next VR headset design as it works to remove some of VR’s hardware barriers.

There are several rumors that its next headset, codenamed Puffin, and now Phoenix in leaks, will be ultra-slim goggles. Its rival, Pico, is said to be designing something similar (you can see the Pico 4 Ultra above).

The bulk of the processing power and the battery would be shifted to a puck, kinda like Apple’s Vision Pro, but with even more crammed into the pocket-sized pack, so that the weight on a person’s head is only a little over 100g.

Considering a Meta Quest 3 weighs 515g, this would be a serious change, and could transform the Horizon OS headset into something people can (and want) to wear for hours on end rather than less than an hour.

What's more, with the battery in a person's pocket, Meta could make it even larger than before without affecting comfort. Though, as with all speculation, we'll have to wait and see what Meta announces next, perhaps it'll be nothing like a headset and a smartwatch instead.

You might also likeMicrosoft makes a nifty tweak to the Windows 11 taskbar – but it's probably not the change you were hoping for

- Windows 11 has a useful change for multiple monitors in a new preview

- You'll be able to access notifications and the calendar flyout on the secondary monitor

- Previously that wasn't possible, even though it is in Windows 10

If you use multiple monitors with Windows 11, there's a change in the pipeline with the taskbar that you're really going to appreciate.

Windows Central noticed that Microsoft has brought in the ability to access the notification center and calendar flyout in the taskbar on a secondary display. This has happened with the latest preview release of Windows 11 in the Dev channel (build 26200.5722).

It's currently the case that if you're running two monitors with Windows 11 you can only access these details on the main display. With the secondary display, the active elements of the system tray – on the far right-hand side of the taskbar – don't work, meaning all you can do is look at the time and date.

If you want to access the calendar panel (by clicking on the date), you need to mouse across to the primary monitor to do so (and the same is true for checking on notifications).

However, with the new preview build, it's possible to click on those parts of the taskbar and access the mentioned panels on the secondary monitor.

This is another tweak for Windows 11 which sounds like a relatively small move, but it'll actually be a major convenience for those whose PC setup includes two monitors (or perhaps more). That might be a niche set of people, granted, but it'll be quite a boon for them – the move has already been welcomed with open arms by some (Windows Central included).

Indeed, you might be wondering why this wasn't possible in the first place – especially because in Windows 10 you've always been able to access these parts of the taskbar on a secondary monitor.

Well, that's a good question, and it's not the only piece of functionality that fell by the wayside when Windows 11 arrived. There were quite a few key pieces of the interface and options therein that were mysteriously dropped from Windows 10 in the shift to Windows 11.

They included the ability to move the taskbar away from the bottom of the screen to pick an obvious example (or to 'never combine' apps on the taskbar, though that functionality has since been added back).

The reason these decisions were made was apparently down to some of the complexity involved in the changes under the hood with Windows 11 – or at least those were the vague noises Microsoft made some time ago now, by way of a rather unsatisfying explanation.

At any rate, Microsoft acknowledged in the blog post for the new preview build that this change is being made to "address your feedback", so clearly there have been a fair few complaints about the missing functionality in question.

Note though that this change is only rolling out in testing for now, so not all Windows Insiders will see it (though it is possible to force an enablement, as leaker PhantomOfEarth explains on X).

It'll probably be a while before this arrives in the finished version of Windows 11, and the feature seems a likely pick for inclusion in the big Windows 11 25H2 update arriving later this year.

You might also like...- Fed up with your mouse cursor supersizing itself randomly in Windows 11? Thankfully this frustrating bug should now be fixed

- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Fed up with your mouse cursor supersizing itself randomly in Windows 11? Thankfully this frustrating bug should now be fixed

- Windows 11 24H2 had a strange bug that messed with the mouse

- It made the mouse cursor larger after the PC woke from sleep (or was rebooted)

- Microsoft has seemingly fixed this problem with the July update

Microsoft has reportedly fixed a bug in Windows 11 which caused the mouse cursor to supersize itself in irritating fashion under certain circumstances.

Windows Latest explained the nature of the bug, and provided a video illustrating the odd behavior. It shows the mouse cursor being at its default size (which is '1' in the slider in settings for the mouse), and yet clearly the cursor is far larger than it should be.

When Windows Latest manipulates the slider to make the mouse cursor larger, then returns it to a size of '1', the cursor ends up being corrected and back to normal. Apparently, this issue manifests after resuming from sleep on a Windows 11 PC.

Windows Latest says this bug has been kicking around since Windows 11 24H2 first arrived (in October last year), but the issue hasn't been a constant thorn in its side. Seemingly it has only happened now and again – but nonetheless, it's been a continued annoyance.

Not anymore, though, because apparently with the July update for Windows 11, the problem has been fixed.

Oddly enough, Microsoft never acknowledged this issue, although other Windows 11 users certainly have – Windows Latest hasn't been alone in suffering at the hands of this bug.

I've spotted a few reports on Reddit regarding the issue, and some posters have experienced the supersized cursor after rebooting their machine rather than coming back from sleep mode (and there are similar complaints on Microsoft's own help forums).

Whatever the case, the issue seems to be fairly random in terms of when or whether it occurs, but the commonality is some kind of change of state for the PC in terms of sleeping or restarting.

While the mouse cursor changing size may not sound like that big a deal, it's actually pretty disruptive. As Windows Latest observes, having a supersized cursor can make it fiddlier and more difficult to select smaller menu items in apps or Windows 11 itself.

And if you weren't aware of the mentioned workaround – to head into the Settings app, find the mouse size slider, and adjust it – you might end up rebooting your PC to cure the problem. And that's if a reboot does actually fix things, because, as some others have noted, restarting can cause the issue, too.

This was an irksome glitch, then, so it's good to hear that it's now apparently resolved with the latest update for Windows 11.

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- Windows 11's handheld mode spotted in testing, and I'm seriously excited for Microsoft's big bet on small-screen gaming

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Meta's next wearables announcement might include a smartwatch for its smart glasses

- Meta is said to be developing a new smartwatch

- It could feature a camera but be light on health features

- We might see it at Meta Connect later this year

Meta’s on-again-off-again relationship with smartwatches might be back on an upswing, as there are reports it will be releasing new wrist-based tech at Meta Connect 2025, which is taking place on September 17 to 18.

That’s according to Digitimes (behind a paywall), who claim Meta is partnering with Chinese manufacturers to bring its latest smartwatch iteration to life.

The device, however, might not be as health-focused as the competition, such as the Samsung Galaxy Watch 8 or Apple Watch 10. Instead, Meta, perhaps unsurprisingly, might be focusing on XR technologies.

Its watch would apparently incorporate a camera of some kind, and could complement Meta’s smart glasses, including its much-rumored upcoming pair, which would feature a display for the first time. This sounds like it might be an enhanced version of the wristband Meta Orion testers have used to control those glasses.

However, it’s unclear if the rumored smartwatch would enhance Meta's existing best smart glasses, like the Ray-Ban Meta and Oakley Meta HSTN specs.

Light on detailsAs with all leaks, we should take these details with a pinch of salt. However, Meta has continued its development of wrist-based EMG technology, and so it’s not out of the realm of possibility that it would want to develop something more sophisticated using its research.

What’s more, as I alluded to earlier, this would hardly be the first smartwatch leak we’ve heard from the company, though some rumors were related to its cancellation and subsequent revival, suggesting some previously teased details may no longer be accurate.

Even if a Meta Watch is on the way, many questions remain when it comes to its cost, battery life, specs, affordability, and release date. Even if the device is part of Meta’s 2025 Connect opening keynote, it might just be a teaser of what’s to come rather than a concrete promise of a gadget releasing soon.

I, however, am interested to see what Meta can construct.

I would still rather the device served as an add-on to its smart glasses, much like existing smart watches with phones, rather than a more standalone device, which appears to be on the cards. But if it can incorporate health, fitness, and hand-tracking tools, I’m fine for it to also include a camera and worthwhile Metaverse tools, provided the cost doesn’t get out of hand.

We’ll just have to wait and see what it showcases when Meta’s ready to finally make this much-rumored wearable official.

You might also likeGamers are getting around Discord's new UK age verification tools by using Death Stranding's photo mode – yes, you read that right

- The UK's Online Safety Act has introduced age verification tools across multiple platforms

- It prevents users from accessing potentially 18+ rated content

- It's left multiple gamers and Discord users looking for alternatives due to verification issues

Britain's new Online Safety Act has left online users in uproar, with age verification required to access channels on platforms like Discord or Reddit that contain 18+ (NSFW) content. However, as the internet often does, many have found a workaround to bypass this requirement.

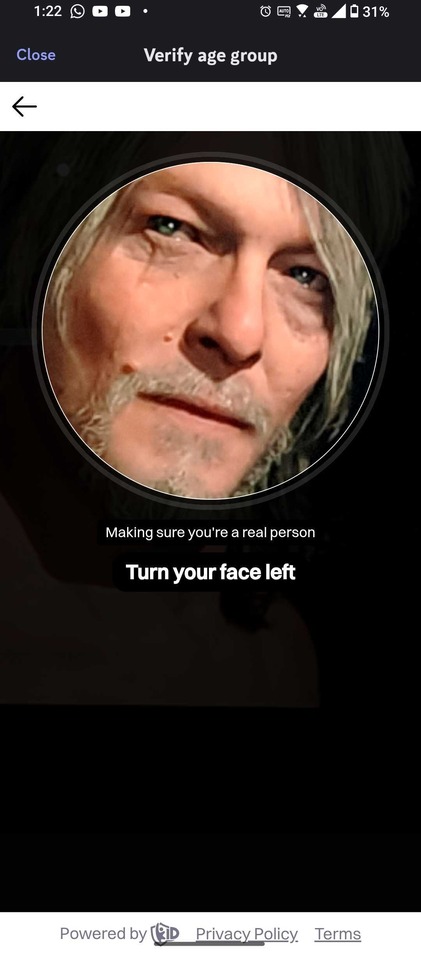

As reported by our friends at PC Gamer, Hideo Kojima's Death Stranding and its photo mode allow users to bypass new UK age verification checks. The measure requires users to capture their faces or personal ID to determine their age, but using Death Stranding's Sam Porter Bridges character appears to be enough for verification.

Since Death Stranding's photo mode has multiple emotes for Sam, players can easily use their phone's front camera and use specific emotes that make Sam open and close his mouth, as per the face scanner's instructions (which you'll see below).

Having tried to scan my face to access my own Discord server and other Safe for Work (SFW) servers with age-restricted channels, it either displays instructions every few seconds (before you can even follow them) or incorrectly determines that you're a young teenager, which was my result.

An easier solution of using an image might come to mind, such as scanning in a pre-existing image, but as I've mentioned, this system requires you to make certain facial movements, which is something a still image can't do.

The new requirement is considered a positive measure, possibly preventing underage users from accessing harmful content (and rightly so). However, it's inadvertently affecting those who are of age, especially those who aren't comfortable with sharing their personal ID, despite the suggestion that images aren't stored after verification is completed.

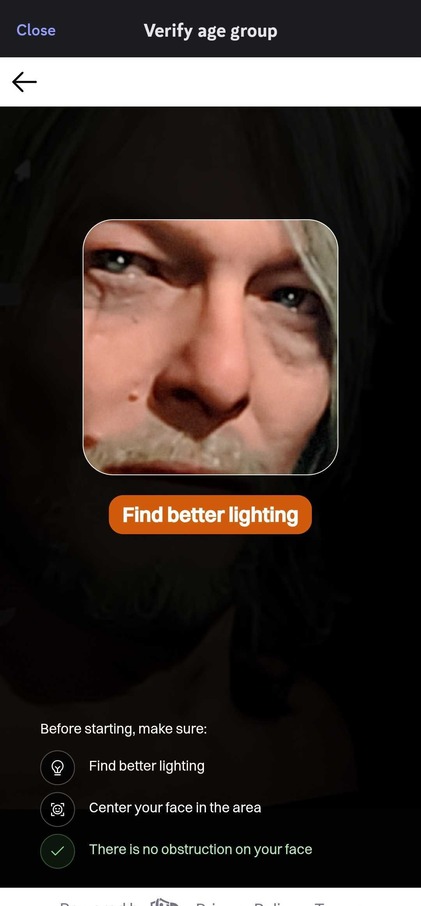

We've already seen a huge leak of the Tea dating app, leaving personal IDs and images of users widely available online, so there's no guarantee that using personal IDs for other social apps is safe either.

Image 1 of 2

First and foremost, I'm not advocating or recommending the use of this Death Stranding bypass by any means, especially if you're underage. However, since there are clearly issues with the face scanner, including a lack of alternatives to access Discord servers (besides using a personal ID), this is the only solution for many.

I've tried to use the face scanner to no avail, and according to Discord, I'm a young teenager, which clearly isn't the case. Fortunately, Reddit's age verification face scanner worked easily for me without any issues, so perhaps it's an isolated issue with Discord's face scanner.

The last thing I would do is use my personal ID for verification for a social app like Discord, and it explains why gamers are going to the lengths of using tools like Death Stranding's photo mode.

It's worth noting that there is already a petition in place to repeal the new Online Safety Act, so it remains to be seen what comes of this all.

For now, I'll be patiently waiting for another solution – and fortunately, I'm in no rush to regain access to a small selection of Discord servers.

You might also like...- The Tea app hack explained - how a data breach spilled thousands of photos from the top free US app, and what to do

- Yahoo and AOL mail suffered hours of outage – here's how it played out

- Can't (or won't) upgrade to Windows 11, but afraid to switch from Windows 10 to Linux? This app might make the transition easy

The Tea app hack explained – how a data breach spilled thousands of photos from the top free US app, and what to do

- Tea is a popular 'dating safety tool' that just suffered a data breach

- 72,000 images pertaining to the app were involved, some of which were user photo IDs

- There's an ongoing investigation, but the obvious worry here is potential identity theft for those whose images were exposed

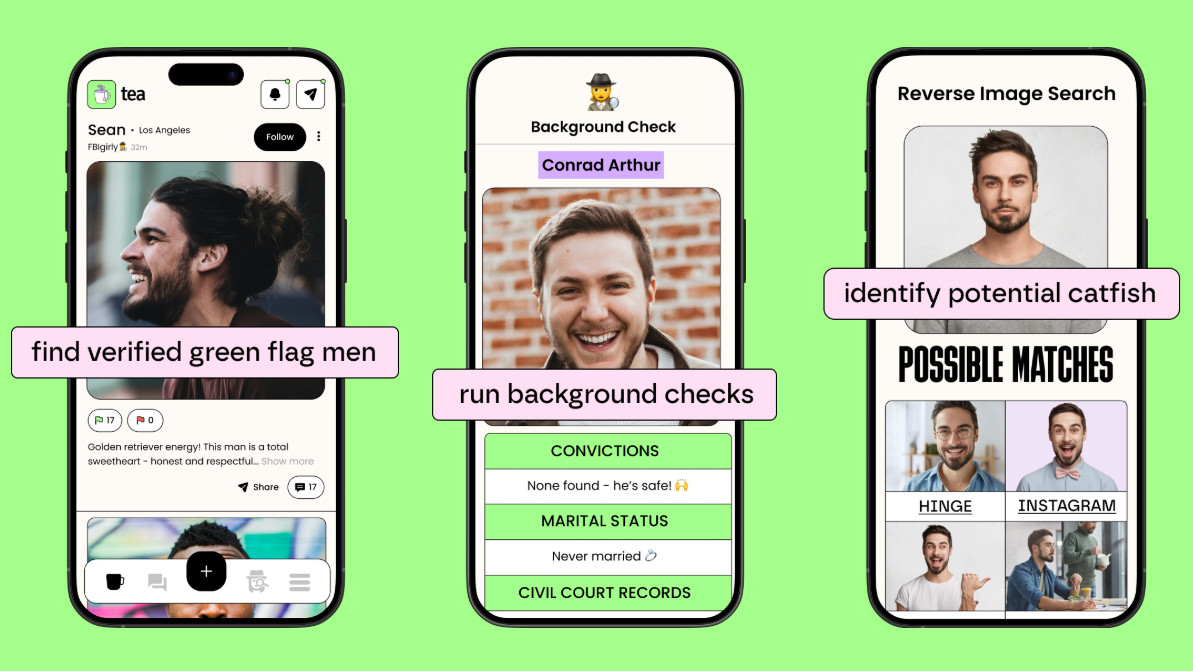

Tea is a popular mobile app designed as a 'dating safety tool' to protect women and has been around since 2023.

Its full name is Tea Dating Advice, and the central idea is a women-only app that gives those who are dating the ability to access background checks on men. This includes whether they have a criminal record (or if they're sex offenders), as well as reverse image searching to identify catfishing (assuming a false identity online).

At the end of last week, as NBC News reported, Tea admitted that it had suffered a data breach in which 72,000 images were accessed by the intruder.

That included 13,000 images (selfies and photo ID) submitted by users during account verification. The other 59,000 images were also provided by users and "publicly viewable" in posts (and direct messages) on the app.

As Tea acknowledged on its Instagram account, these images were stored on an 'archived data system' and the firm said that any users who signed up for Tea during or after February 2024 won't be affected. In other words, this is old data archived on a server that only pertains to older posts and accounts before that date.

The company made it clear that the photos "can in no way be linked to posts within Tea".

A Tea spokesperson told NBC: "This data was originally stored in compliance with law enforcement requirements related to cyberbullying prevention."

NBC reported that the hack may be connected to 4chan, with a 4chan poster allegedly allowing for the database of stolen images to be downloaded on that platform. Supposed ID photos from Tea users are also said to have been posted on some social media outlets, too, but obviously, exercise caution around such reports.

Tea said that it has more than four million users in total, and it became the top free app in Apple's App Store in the US this past week (having recently gained a million new members).

Tea said it's conducting an ongoing investigation into the security incident, which includes external cybersecurity experts, and that it has notified law enforcement in the US.

The key point to remember here is that if you signed up more recently for Tea, you shouldn't be affected by this breach. As noted, the impact only extends to an archive server and members who joined before February 2024.

At least that's according to what we know from the investigation so far, and the apparent extent of the breach - so the caveat is that we assume the ongoing investigation won't reveal anything else has been accessed.

The other important point to remember here is that only the images were accessed, according to Tea, and no personal data relating to members, such as email addresses or phone numbers.

The worrying part about the data that was accessed, though, is that some of it contains official IDs (and selfies) which could potentially be used for identity theft. It's worth noting here that Tea also clarifies (in an official statement flagged by USA Today) that it no longer requires an official ID for sign-up, and dispensed with that requirement in 2023.

If you joined Tea before February 2024 and provided a government ID for the sign-up process, then the latter could have been exposed. There's no clear way of knowing that at this point, but it's safest to assume that your ID (or other images) may have been leaked online.

That means this information could end up in the hands of a bad actor, sadly, but it's difficult to say whether that will happen for sure, or indeed to know if it does happen.

What you can do for now as an obvious first line of defense is keep an eye on your finances (bank accounts and credit card statements), watching for any irregularities. In all honesty, this is something you should do anyway, as fraud is an ever-present danger these days with a growing number of scams (alongside data breaches like this one).

A further proactive move is to sign up for one of the best credit monitoring services, and the good news is that you can get this for free (from Experian).

What these services do is keep an eye out for your personal details (from, say, a stolen ID) being used online in suspicious circumstances, bringing these incidents to your attention, so you can be aware of anything potentially underhanded before it comes to fruition. There are also full identity theft protection suites out there, too, for a more comprehensive level of protection.

You might also like...- Keep your identity intact: 5 easy-to-follow tips to avoid identity theft and fraud

- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Can't (or won't) upgrade to Windows 11, but afraid to switch from Windows 10 to Linux? This app might make the transition easy

- A Windows-to-Linux migration tool has been revealed

- It's still in development, but looks very promising, providing a seamless way of transitioning to Linux

- Only one distro is supported, but there's the possibility of multiple options in the future

Those whose PC doesn't support Windows 11 - or people who just plain don't like Microsoft's newest OS, and don't want to leave Windows 10 for it - could, at some point down the line, have another option in terms of a way to switch to Linux instead.

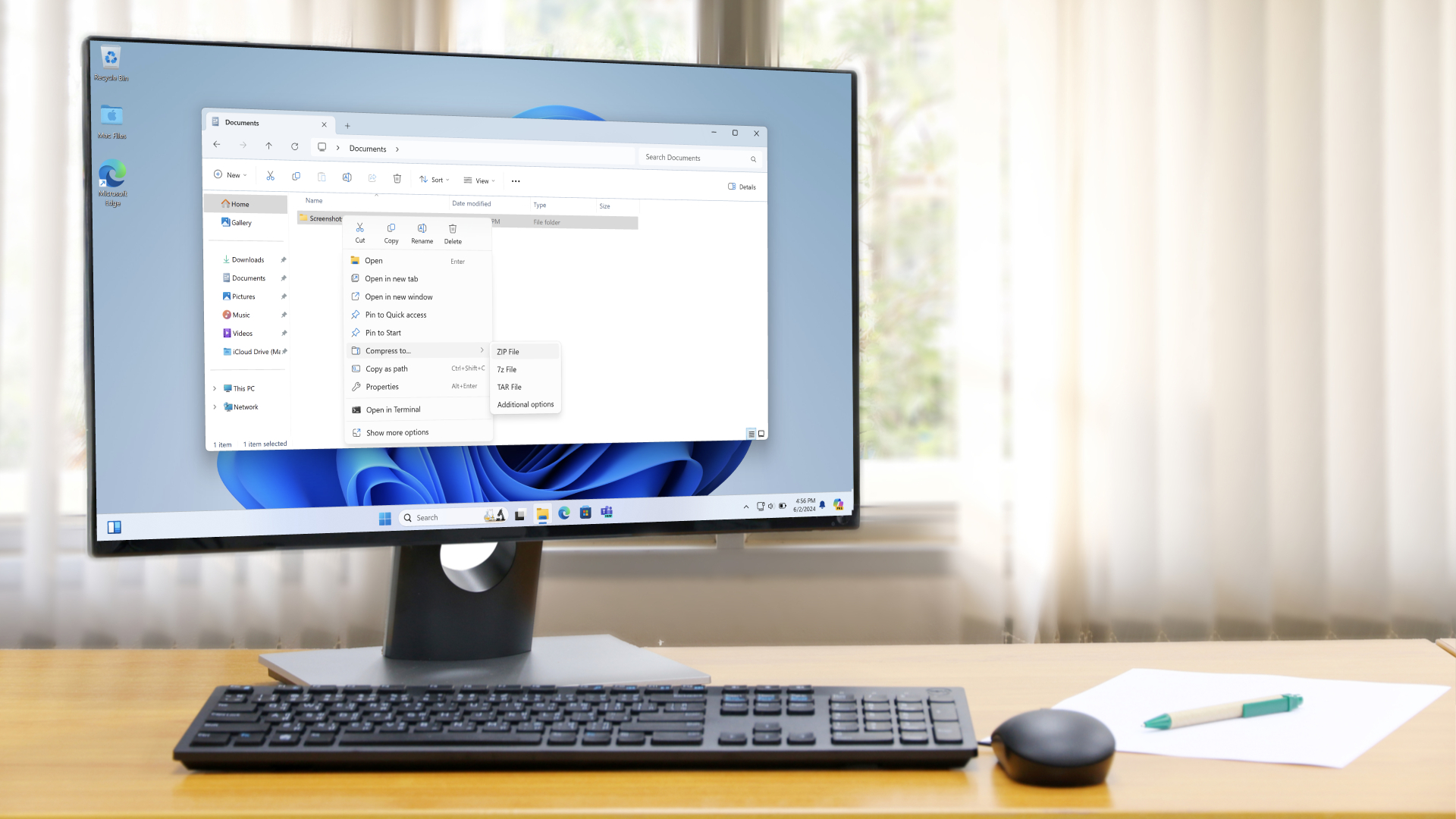

Neowin picked up on a project that's a Windows-to-Linux migration tool, enabling you to shift across all your files and settings - as well as installed apps - from Windows 10 over to Linux.

Now, there are a few caveats for this concept, and I'll lead with the most notable, namely that this isn't a finished product yet. The software is still under development, in an "early" form, and all we can see of it now is a demo on YouTube.

The tool, called Operese, is being put together by 'TechnoPorg', an engineering student at the University of Waterloo (in Canada), and the utility has been under development for some time.

Another sizeable catch is that it only allows Windows 10 users to switch to one particular Linux distro, Kubuntu (which is based on the popular Ubuntu, so it's far from a bad choice - and I'll come back to this shortly).

Otherwise, you can see how the process works in the 'Program Demo' section of the YouTube clip below. The app takes care of the whole migration, extracting the relevant data from Windows 10, then installing Kubuntu, and reapplying the correct settings, your files, and so on (achieving this using some clever trickery with drive partitions).

Analysis: In-place upgrade to LinuxEssentially, Operese is like an in-place upgrade, the same as you might perform to shift to Windows 11, except in this case, you're going from Windows 10 to Linux. It's a very smart idea in that respect for those who are intimidated by the idea of migrating to Linux - you can do it straight from your existing Windows 10 PC, with no fuss whatsoever, just sit back and let the tool do all the hard work.

I'm not sure how the transfer of apps will be facilitated, and obviously, that could get tricky where software isn't available on Linux (or doesn't work on the platform). Indeed, the program migration aspect remains unfinished in Operese at this point in time, as the developer tells us in the YouTube clip, so this is still rather up in the air.

Another issue is that, given that we're told it's still relatively early in development, is this app going to be ready for Windows 10's End of Life? TechnoPorg says it'll be full steam ahead working on Operese until October, which suggests that the dev is trying to hit that deadline, when support for Windows 10 ceases. He may need help to that end, and talks about making the code open source, too (not a bad thing in terms of security, either).

Still, even if that deadline is missed, remember that Windows 10 users can get an extra year of support just by syncing PC settings via the Windows Backup app (not a high price to pay in my view). The app could still be very useful even as a late arrival, then, given that breathing space. And even if this project isn't realized, it does show that this kind of streamlined Linux migration is perfectly possible - and that it might show up in some form, hopefully sooner rather than later.

For those grumbling that there's only one choice of distro, TechnoPorg observes that Kubuntu was selected for some good reasons. Its stability, third-party driver support, and the KDE Plasma desktop environment will make those who are used to the Windows desktop feel somewhat at home. Also, Ubuntu offers a great set of tools for automated installations, apparently, which makes it suit this project nicely.

All that said, the developer indicates that supporting some of the other best Linux distros is a possible long-term goal, and that "based on the overwhelming community feedback, I will be making the internals [of Operese] more distro-agnostic". Meaning other options aside from Kubuntu - hopefully, alternatives well-suited to Windows users - could be in the cards eventually.

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- Windows 11's handheld mode spotted in testing, and I'm seriously excited for Microsoft's big bet on small-screen gaming

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Yahoo and AOL mail suffered hours of outage – here's how it played out

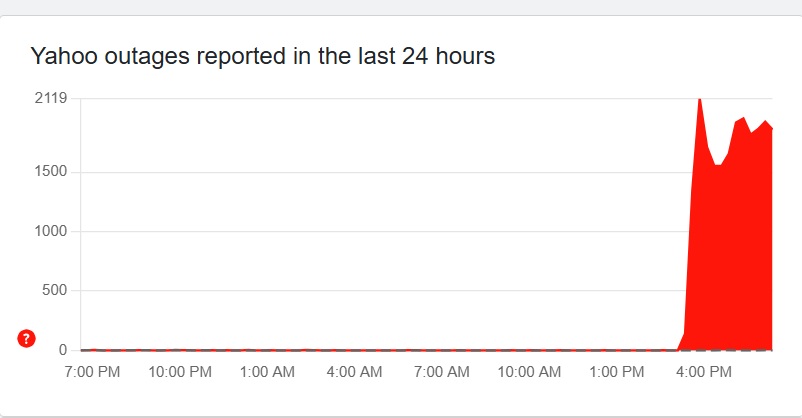

Both Yahoo and AOL's email services got hit by an outage that appeared to last some four hours, and mostly affected the US.

From what I could ascertain, the outage happened around 10am ET, with users unable to access the email services of both brands, or unable to send and receive emails.

At the time of this update, it would appear that the outage has been resolved, with increasing amounts of users reporting they now have access to their email. However, plenty of people have noted they are missing emails; at the time of writing there's no indication Yahoo or AOL will work to restore these emails.

It's also not clear what caused this outage, but it did seem to be limited to just the email services.

Check out the live blog posts below for my reporting of the outage as it developed, And do let me know if you have any insight into it, or if it affected you in a big way.

AOL took to X to post that it's experiencing outage problems with it email service.

We understand some users are currently experiencing difficulties accessing their accounts. We are actively investigating this issue and will provide updates as soon as more information becomes available. We appreciate your patience and apologize for any inconvenience this may…July 24, 2025

Yahoo has basically said the same as AOL on X: "We understand some users are currently experiencing difficulties accessing their accounts. We are actively investigating this issue and will provide updates as soon as more information becomes available. We appreciate your patience and apologize for any inconvenience this may cause."

It's seems to be a day for outages as over in the UK, network provider EE is also suffering an outage, which also appears to be ongoing.

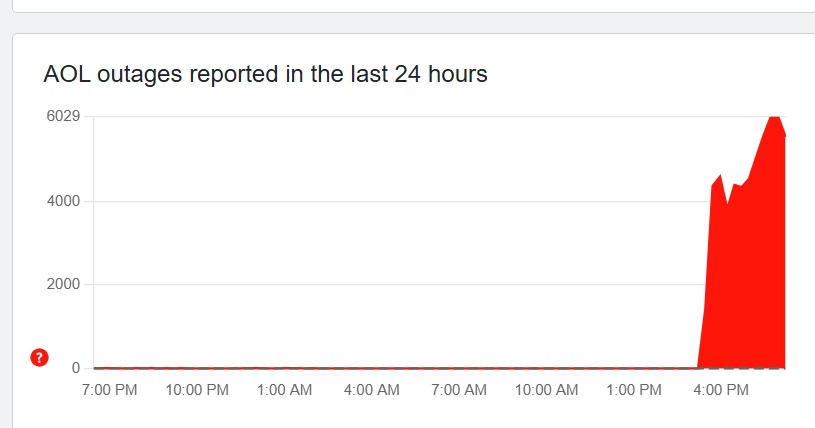

Looking at the Downdetector report for AOL, it seems to be specifically affecting the email side of its services.

"I'm in Alabama. I can log in and it show's I have email but the messages says there was an error fetching the items in the list. I used MS Outlook and am getting a sync error for my AOL account. This SUCKS! I sell Real Estate and this is one of the WORST things that could happen!!!" said one user on Donwdetector.

It seems to me that this outage has been going on for AOL from around 10am ET.

And there have been growing reports on Downdetector, which would indicate this issue is becoming widespread in the US.

In the UK there was a spike of AOL outage reports, again pertaining to emails but the outage seems a lot smaller; that could be down to fewer UK users than in the US.

The same could be said for Yahoo Mail in the UK, though it seems to me that on the Downdetector page, reports of problems could be abating.

As one might expect, there's not a lot of friendly sentiment for Yahoo on X, with one user noting: "Yahoo email is so unreliable. Time to find something better, and that shouldn't be too hard."

Yahoo email is so unreliable. Time to find something better, and that shouldn't be too hard.July 24, 2025

Equally, and a little like me, some people have expressed wry bemusement that people are still using Yahoo email...

TIL people are still using Yahoo for thingsJuly 24, 2025

There's a little bit of inconsistency in how the outage seems to be affecting Yahoo Mail users, with some saying they can log into their email but aren't getting any messages, while others are noting they can't aces any of their emails, with error messages being thrown up.

Things aren't exactly rosy for AOL either, with responses to the company's X account noting the outage is somewhat widespread in the States and affecting different devices.

This user isn't happy...

I guess this what we get for keeping aol as our email. I've had it since 1998. RidiculousJuly 24, 2025

So why are AOL and Yahoo both suffering an outage? Well they merged into one company so are almost certainly sharing infrastructure, meaning an outage for one brand is likely to affect the other.

With so many email clients and services one might think an AOL/Yahoo outage could be glossed over a little but as one Downdetector poster notes: "RIP. Can't retrieve MFA codes."

So if you're using such an email service to handle multi-factor authentication then you might be a little stuck.

Still nothing new from Yahoo or AOL on how long this outage will last or what's caused it.

I can't see any outages for cloud platform providers that could be affecting email service servers and supporting infrastructure, so I'm a little limited on how much I can speculate as to the cause of this email outage.

Interestingly, the sign-up page for AOL email still appears to be active...

I kind of feel sorry for small businesses when these forms of email outages occur, as they probably lack the resources and backup systems to mitigate for such outages.

Down on desktop, app and iPhone in Massachusetts. Please keep us updatedJuly 24, 2025

So if you look at this image, you can see it's not a good look for AOL's email in Downdetector, but perhaps the number of outage reports have peaked...

It's understandably a similar situation for Yahoo on Downdetector, as you can see below...

No hint of when a fix will be made for Yahoo. And I feel this X user's frustration...

What is the time frame to have this issue fixed? I really need to access my EmailJuly 24, 2025

AOL is being equally quiet...

But oddly it seems like AOL email is working on iPhone for some users.

AOL mail is down online but available on an iPhone.July 24, 2025

Totally get this could be a big ol' issue for Yahoo email users too:

When an important website (like a bank) sent a code to your email to sign in but your email is unavailable....What happens to the code or other info that was sent to this email? @YahooCare @yahoomail pic.twitter.com/YPlCJ3z2pgJuly 24, 2025

I'm a Microsoft and Gmail email user so tend to have backups for certain important authentication services. But it's not a fool proof method, especially if you can't use a backup or secondary email.

If I couldn't get a bank code due to an email outage, I'd be rather upset... I'm not the most patient of people.

AOL's main website, including its search and news functions are all operating fine, so this does appear to be an outage exclusively linked to email services.

Hmmm I'm seeing some murmurings that AOL email could slowly be coming back with Downdetector user MelissaW noting "It looks like it works, but will not delete email or let you look in folders. Gives an error message."

Certainly doesn't look look like the email issue is fixed, but sporadic returns of service may be an indicator that AOL is trying out fixes.

A scathing comment about AOL by one Stephen Jackson on Downdetector: "AOL too busy bombarding us with their ever switching non-stop spam, instead of focusing on making sure their email works."

On the Yahoo Downdetector page it looks like some of the service is coming back with one RL Ross noting: "It seems to be coming back up - just got my emails from this morning, finally."

"Mine just came back," said Downdetector user Pamela. So things are looking up.

This message still remains at the top of AOL's Help page: "We are aware some users are experiencing issues accessing their AOL Mail, or displaying their mailbox. We are working to resolve this as quickly as possible. Thank you for your patience as our engineers work to remedy this concern."

Looks like AOL email could be back up in South Florida, New York City, Langhorne, Washington, Los Angeles and North Carolina, according to a clutch of Downdetector users.

We got an email from a reader called Barbara Callahan who said the following: "I have been following you about AOL outage. Thank you for ALL the updates am on Long Island NY and mine seems to be up and working now."

So it looks like all is slowly getting well with Yahoo and AOL's email services. Expect a few hiccups or perhaps some lost emails or ones stuck in drafts.

"The outage today ate some emails. I have a gap of about 3-4 hours of emails that I never received that I know were sent. Yikes." said a Downdetector user on the Yahoo page.

With AOL some users are suggesting a restart of the app, and that's something I'd recommend after an outage, so consider it a good tip.

While it doesn't look like a every Yahoo and AOL email user has got a fully functional instance of the services, it would appear that whatever has been done to fix the outage is spreading across that States.

There are a good few users of both email services reporting that they are missing emails that they expected across the some four hours of service outage.

"My Yahoo mail is back but it looks like about four hours of missing messages? Will Yahoo restore those? I hope so." said Downdetector user 'leemortimer'.

In regards to AOL, Downdetector user Jana noted: "The mail is back up in NJ but I am missing all the messages sent from 10:30am-2:15pm."

While AOL has yet to remove the outage message from its Help page, and neither it or Yahoo have posted on X to follow up on their previous posts noting their respective email services were experiencing issues, going by Downdetector and user reports, it would seem like both email services are mostly back up and running.

There are plenty of reports of missing email, which is to be expected in such an outage, and it's not clear if Yahoo/AOL will be able to retrieve them.

But I'm closing off this live blog for now, as I consider the incident to be over. I just hope you weren't hugely affected by this, but if so do check out our roundup of the best email services if you fancy a switch from AOL or Yahoo.

Microsoft gives you another reason to hook up your Windows 11 PC and Android smartphone - it's a security feature that could come in seriously handy

- The Link To Windows app on Android has a new 'Lock PC' button

- This allows you to remotely lock your Windows 11 PC from the phone

- Other goodies introduced with Link To Windows recently also include the ability to grab clipboard contents from your PC on your smartphone

Windows 11 users are getting the ability to lock their PC remotely by using their Android smartphone, assuming these two devices are hooked up via the Phone Link app.

Windows Central reports that the ability to lock your Windows 11 PC with the tap of a button from your phone is now present in the overhauled Link To Windows app on Android. Apparently, it's in version 1.25071.165 of the app (or newer), but the functionality is still rolling out, so it may take a while yet to turn up for all users.

The 'Lock PC' button could come in very handy if you leave your desktop PC for a quick trip to the coffee machine, but end up called away for longer, and so you want to ensure the device is secure while you're away (without having to return to the computer).

As Windows Central notes, when you use this remote lock function, it will disconnect your smartphone from the Phone Link app until you log back in.

The freshly redesigned Link To Windows app now also lets you access the contents of the clipboard on your Windows 11 PC (if you have this synced), and allows for viewing recently shared files, too.

These are potentially very useful extras that add a good deal of convenience - it might be very handy to grab Windows 11 clipboard contents on your smartphone. And as noted, the ability to remotely lock your computer could be a security lifesaver of sorts in certain situations (maybe not often, but when you need the ability, you'll be glad of it).

There is something else to be aware of here, though, in terms of existing functionality. As Windows Central points out, you can already have your PC automatically lock when you're not present by using a Bluetooth-powered feature. This is called dynamic lock, and it works by kicking in when you've left your PC, and your smartphone is far enough away so the Bluetooth signal weakens to a certain level.

The catch here is obvious enough, though - not everyone wants to keep Bluetooth on constantly (given the battery drain on the phone, or indeed the Windows 11 PC, if it's a laptop). So, a simple manual alternative in the form of the 'Lock PC' button is clearly a boon.

Microsoft is making good progress with Phone Link, even if that progress is more of the slow and steady variety than huge leaps forward. Still, some bugbears remain with users, most notably that only Samsung Galaxy phones get the best features.

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- Windows 11's handheld mode spotted in testing, and I'm seriously excited for Microsoft's big bet on small-screen gaming

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Microsoft claims Windows 11 24H2 is the 'most reliable version of Windows yet' - but there are PC gamers out there who disagree

- Microsoft is claiming Windows 11 24H2 is the most reliable version ever

- The company says it has 24% fewer crashes than version 22H2

- In a blog post about resiliency, Microsoft also clarifies the benefits of the latest Windows update for 24H2

Microsoft has put forward a case for Windows 11 24H2 being the "most reliable version" of its desktop operating system ever made.

The long and short of this is that the software giant claims there are a good deal fewer crashes with version 24H2 compared to version 22H2 of Windows 11.

XDA Developers spotted that Microsoft made this assertion in a post on the Windows IT Pro Blog, stating that: "We're also proud to share that Windows 11 24H2 is our most reliable version of Windows yet. Compared to Windows 10 22H2, failure rates for unexpected restarts have dropped by 24%."

This is based on telemetry data gathered this month (July 2025) by Microsoft, we're told. Unexpected restarts refers to complete lock-ups of the system, and as noted earlier in the post, these Blue Screen of Death (BSoD) experiences have been changed to a more streamlined black screen. That happened in the latest update for Windows 11 24H2, which just arrived in preview (but will be a full release next month).

Microsoft underlines the benefits of the new BSoD (which handily enough uses the same acronym) and notes that: "In Windows 11 24H2, we made significant improvements to crash dump collection that reduced the time users spend on the [BSoD] screen from 40 seconds to just 2 seconds for most consumer devices." (I should note that the bolding for emphasis is Microsoft's).

Furthermore, Microsoft reminds us of recent progress in terms of recovery from nasty crashes, namely boot failures, observing that the most recent Windows 11 update also represents the rollout and general availability of quick machine recovery. QMR is a new spin on attempting to fix a PC that will no longer boot to the desktop.

Analysis: crash tests, dummy

There's no doubting that Microsoft has made some useful strides forward here in terms of making Windows 11 devices more resilient, which is the theme of the blog post. But does the latest version of Windows 11, 24H2, really have a quarter fewer crashes than 22H2?

Well, we obviously need to be cautious about stats produced by internal testing - not that I'm accusing Microsoft of anything underhand, but we're all aware that in these kind of scenarios, multiple tests can be carried out and results cherry-picked. (This practice is common across all marketing, of course).

There are doubtless those who would argue vehemently that 24H2 very much isn't the most reliable take on Microsoft's desktop OS that's ever been seen - mainly based on all the bugs that came with this release. I won't bang on about those glitches again, save to say that there were indeed a lot of them initially, and some were very odd affairs indeed.

I've always been of the opinion that the shift to a new underlying platform for Windows 11 (called Germanium) threw a number of unexpected spanners in the works for the OS.

Microsoft has made a lot of headway in fixing those bugs, mind - although not all of them - and I've got to accept the stats the company presents here at face value. (I can hardly disprove them with my own crash testing, after all).

However, what I can say is that this is all relative, anyway. By which I mean I've not seen a BSoD in ages, on my Windows 11 PCs, or indeed my Windows 10 machines.

So, fewer crashes means those BSoD instances are even closer to next-to-nothing - and how meaningful is the difference, then? I'm not sure. There's no disputing that in contemporary times, full-on lock-ups are a lot rarer in Windows than they used to be. Rewind time by 15 years or so, and crashes like this were more of a problem (and go back further, to the turn of the millennium, and that was doubly true).

Whatever the case, Microsoft deserves some credit here, and I'm also hoping that the new quick machine recovery feature will be a useful string to the troubleshooting bow when a PC hits a boot failure (that most dreaded of problems).

I have my reservations about the redesigned BSoD, mind - as I've said before - and you can bet that Microsoft's assertion that Windows 11 24H2 is the most reliable version ever is going to cause the grinding of some gears out there - particularly with those who adopted 24H2 early, and were unfortunate to run into some of the many bugs that plagued the release (gamers were especially in the firing line).

However, there are anecdotal reports on Reddit that Microsoft is finally getting it right with version 24H2, even if it was wonky to begin with - though note that in the same thread, others are still reporting negative experiences (again, particularly those who are keen PC gamers).

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- Windows 11's handheld mode spotted in testing, and I'm seriously excited for Microsoft's big bet on small-screen gaming

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Hate Windows 11 and don't want to upgrade? You can now extend the life of Windows 10 until October 2026 – here's how

- Windows 10's scheme for extended updates is now open

- You can sign up using your Microsoft account and get free updates (if you sync your PC settings to OneDrive)

- The enrollment wizard to access the scheme seems to still be rolling out, but you should see it very soon

Windows 10 users can now sign up for extended updates, meaning security patches that are delivered past the end-of-support deadline to keep the PC safe.

As you're doubtless aware, it isn't long before Windows 10's End of Life arrives, when Microsoft officially stops supplying security updates (or feature updates for that matter). This happens on October 14, 2025, and after that date a PC without updates will potentially be open to exploits.

The Extended Security Updates (ESU) program allows for Windows 10 users to sign up for another year of updates, all the way through to October 2026, and enrollment for that scheme has now opened to consumers.

In a blog post (mostly pertaining to the most recent update for Windows 11, and the heap of new AI features therein), Microsoft explains that: "Starting today, individuals will begin to see an enrollment wizard through notifications and in Settings, making it simple to select the best option for you and enroll in ESU directly from your personal Windows 10 PC."

At this point you might be scratching your head and asking: so where is this enrollment wizard?

As Microsoft observes above, you may see a notification pop up in Windows 10 offering a link to sign up for the ESU scheme – and obviously you can use that if you see it. Otherwise, you can head to Windows Update (in Settings), where you should find a link to the same end, though you may have to bide your time.

In the Windows Update panel, you may see a link to 'enroll now' for Extended Security Updates either under where you check for updates (at the top), or with the links over on the right-hand side. I can't see this on my Windows 10 PC yet, but this YouTube video (from ThioJoe) shows where the links should be visible.

The reason why I can't see this is presumably because the rollout hasn't fully kicked off yet. As Microsoft says in the blog post, consumers will "begin to see" the enrollment wizard, meaning the rollout hasn't reached everyone yet. You may not see it either, and it's just a case of being patient – nobody should have long to wait at this point.

Whatever the case, when you click to enroll, if you're not signed in to a Microsoft account, you must do so. This is because you'll need to register for the scheme, and also if you want to get the year of extra updates for free you'll need to be signed in to verify that you have synced your PC settings using the Windows Backup app.

That's the alternative way to enroll for the ESU, as opposed to paying a $30 fee (or using Microsoft Rewards points, which is a third option). Note that you don't have to use Windows Backup to actually make a full backup of your system to get free updates, you just need to sync your PC settings to OneDrive using this app, which seems a relatively small price to pay (compared to $30, certainly).

Those who've already synced settings in this way will be able to click straight through and get the ESU offer for free with no fuss.

According to YouTuber ThioJoe, it is possible to sign in to a Microsoft account to get the ESU on your PC, then switch away to a local account afterwards – and you'll still receive the additional updates throughout 2026 on that computer. Just in case you were curious about that tactic, it works – or so we're told, anyway.

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance

- Windows 11's handheld mode spotted in testing, and I'm seriously excited for Microsoft's big bet on small-screen gaming

- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

Windows 11 gets a big new update – here are the top 4 features, including a powerful AI agent for Copilot+ PCs

- Windows 11 has a new optional update

- It's a big one, delivering a whole load of new features

- These include an AI agent in Settings, a new quick-recovery option for PCs that won't boot, and added AI features for many Windows 11 apps

Windows 11 has a new update in preview, and it's a hefty download which packs in a lot of features, many of which are AI-related (and for Copilot+ PCs only, with their beefy NPUs).

This is patch KB5062660 for Windows 11 24H2, and it should be noted that this is an optional (preview) update, meaning that it's still in testing, so the various features may still have bugs (install it at your own risk, in other words).

Everything should be fully knocked into shape by the time the full update for August arrives, which is when all these features will be officially deployed (or should be – although some are on a 'controlled rollout', meaning they'll be drip-fed out).

So, with those caveats out of the way, what have we got to look forward to with this optional update (and next month's full upgrade)?

Here's my pick of the top features rolling out with KB5062660 (again, bear in mind that some are for Copilot+ laptops only).

1. AI agent in SettingsThe headline functionality here is, sadly for most of us, for Copilot+ PCs only, and it's the addition of the first so-called Windows Agent. This is an AI agent specifically for the Settings app, and it lets you find and manipulate the options you need in a much more convenient way.

Normally, trying to find a setting involves using the search functionality, which can be rather hit-or-miss. With the AI agent, you're essentially getting an intelligent search where you can simply ask a question (in natural language) pertaining to what you're trying to do in Windows 11, and the agent will (hopefully) immediately surface the correct setting.

The AI doesn't just find the right setting for you, but can also make suggestions as to what changes you might want to make as well. Check out the video clip above of the agent in action to get a flavor of how it all works.

This is just rolling out to Arm-based Copilot+ PCs (with Snapdragon CPUs) to start with, but support for AMD and Intel chips is "coming soon" (it's also for the English language only initially).

Suffice it to say this is one of the more impressive uses of AI in Windows 11 I've seen so far, alongside more intelligent Windows 11 search from the desktop (for both Copilot+ laptops, and other PCs too). Yes, Microsoft appears to be progressing overall search capabilities nicely with AI, which is good to see.

Click to Do is Microsoft's array of context-sensitive AI-powered options in Windows 11 (for Copilot+ PCs), and a few more shortcuts (for selected text or images) have been added here. That includes a choice to fire up Reading Coach, which is a free app (installed via the Microsoft Store) that aims to help you polish up your reading skills (pronunciation and more besides).

Secondly, Immersive Reader is now in Click to Do, which takes any text and presents it in a "distraction-free environment", allowing you to adjust text size, font, spacing, and a bunch of other parameters to make everything more easily readable. It also helps with reading skills (breaking down words into syllables, providing a picture dictionary, and more).

Draft with Copilot is also now in Word (for Microsoft 365 Copilot subscribers), allowing you to turn a sentence (or short summary) into a lengthy full draft penned by the AI.

Microsoft's Quick Machine Recovery (or QMR) is now arriving for all PCs (not just Copilot+ devices), which is the ability to recover from a problem that means your PC won't boot. This is the nightmare scenario we all dread, and the idea is that you'll be able to get help via the Windows Recovery Environment (which can be accessed if your system won't boot to the desktop).

QMR allows for diagnostic data to be sent to Microsoft and hopefully a patch can be sent back to cure the problem, or that's the idea. Another avenue of troubleshooting – one that's completely automated – is clearly a good idea, and I'm looking forward to seeing how useful this will be. (And I should clarify, I'm keen to see how competent this feature is – not to personally use it, with any luck. We can all hope that it's a screen we don't ever have to visit).

4. Image-related AI powers in Photos, Paint and Snipping ToolA bunch of new AI-powered functionality for images is now inbound (for Copilot+ PCs), as previously seen in testing. That includes a Relight ability in the Photos app – allowing you to place virtual light sources to change the lighting in an image – and object select in the Paint app, which uses AI to select any given object (so you don’t have to do so manually).

A similar feature to the latter is coming to the Snipping Tool called 'perfect screenshot', which lets you roughly select an area of the screen you want to grab, and then automatically makes a precise crop of that element, taking the pain out of that process. (Again, check the above video to see how this works).

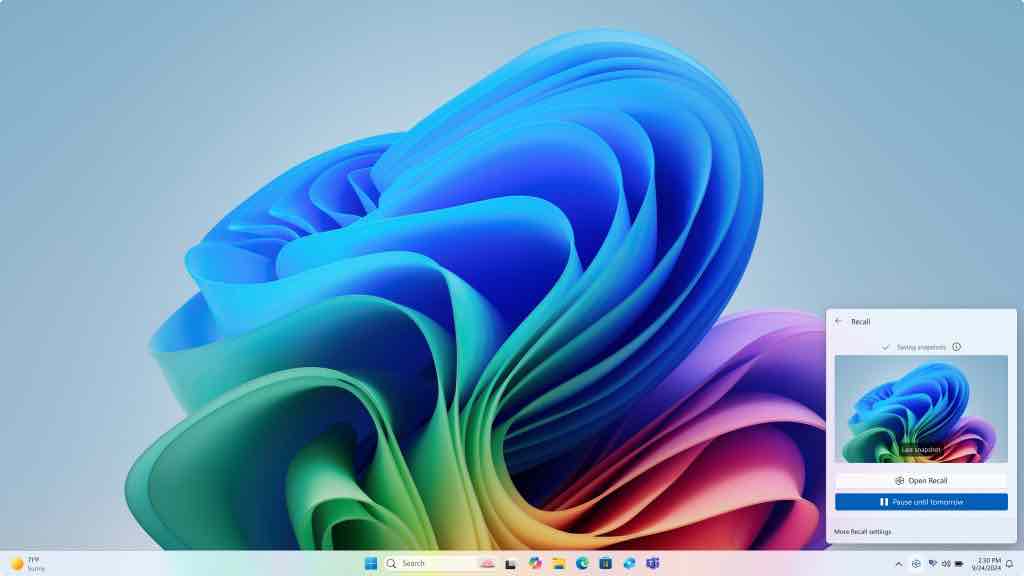

There's quite a lot going on with this update, as mentioned, and another development is that Recall is now being deployed in Europe. Previously, this AI-supercharged search (that works using regularly taken screen grabs) wasn't out in the European Economic Area (EEA), but it is now, and it comes with a new ability. This is the option to export its screenshots (called snapshots) to "trusted third-party apps and websites" (wherever you want, basically - should you wish to share this data).

Furthermore, all Recall users are getting a reset button, which deletes all data relating to the feature and restores Recall to its original settings, should you wish to start afresh (or indeed abandon the ability and turn it off).

Also, the Black Screen of Death is now official, so wave goodbye to the blue version that's been with us so long, and say hello to a more streamlined effort whenever your PC is unfortunate enough to witness Windows 11 locking up. (I'm not sure about this change, as I've discussed elsewhere at length recently).

The Gamepad layout in the virtual keyboard for Windows 11 now benefits from "enhanced controller navigation" which includes word suggestions and better handling of menus, as well as the ability to use a gamepad to sign in from the Windows lock screen (via the PIN panel).

Finally, this preview update fixes a problem with the May 2025 update for Windows 11 which caused some PCs to suffer instability issues (crashes). Microsoft says this was a "rare" bug that didn't affect many, but it sounds like a truly nasty one, so having it resolved will doubtless be a relief.

You might also like...- Microsoft promises to crack one of the biggest problems with Windows 11: slow performance