Do NOT follow this link or you will be banned from the site!

Feed aggregator

Tiny11 strikes again, as bloat-free version of Windows 11 is demonstrated running on Apple’s iPad Air – but don’t try this at home

- Tiny11 has been successfully installed on an iPad Air M2

- The lightweight version of Windows 11 works on Apple’s tablet via emulation

- However, don’t expect anything remotely close to smooth performance levels

In the ongoing quest to have software (or games – usually Doom) running on unexpected devices, a fresh twist has emerged as somebody has managed to get Windows 11 running on an iPad Air.

Windows Central noticed the feat achieved by using Tiny11, a lightweight version of Windows 11 which was installed on an iPad Air with M2 chip.

NTDEV, the developer of Tiny11, was behind this effort, and used the Arm64 variant of their slimline take on Windows 11. Microsoft’s OS was run on the iPad Air using emulation (UTM with JIT, the developer explains – a PC emulator, in short).

So, is Windows 11 impressive on an iPad Air? No, in a word. The developer is waiting for over a minute and a half for the desktop to appear, and Windows 11’s features (Task Manager, Settings) and apps load pretty sluggishly – but they work.

The illustrative YouTube clip below gives you a good idea of what to expect: it’s far, far from a smooth experience, but it’s still a bit better than the developer anticipated.

Analysis: Doing stuff for the hell of itThis stripped-back incarnation of Windows 11 certainly runs better on an iPad Air than it did on an iPhone 15 Pro, something NTDEV demonstrated in the past (booting the OS took 20 minutes on a smartphone).

However, as noted at the outset, sometimes achievements in the tech world are simply about marvelling that something can be done at all, rather than having any practical value.

You wouldn’t want to use Windows 11 on an iPad (or indeed iPhone) in this way, anyhow, just in the same way you wouldn’t want to play Doom on a toothbrush even though it’s possible (would you?).

It also underlines the niftiness of Tiny11, the bloat-free take on Windows 11 which has been around for a couple of years now. If you need a more streamlined version of Microsoft’s newest operating system, Tiny11 certainly delivers (bearing some security-related caveats in mind).

There are all sorts of takes on this app, including a ludicrously slimmed-down version of Tiny11 (that comes in at a featherweight 100MB). And, of course, the Arm64 spin used in this iPad Air demonstration, which we’ve previously seen installed on the Raspberry Pi.

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

A surprising 80% of people would pay for Apple Intelligence, according to a new survey – here’s why

- 80% of people would pay for Apple Intelligence, a survey has found

- Over 50% of respondents would be happy to pay $10 a month or more

- The results are surprising given Apple Intelligence’s history of struggles

Apple Intelligence hasn’t exactly received rave reviews since it was announced in summer 2024, with critics pointing to its delayed features and disappointing performance compared to rivals like ChatGPT. Yet that apparently hasn’t dissuaded consumers, with a new survey suggesting that huge numbers of people are enticed by Apple’s artificial intelligence (AI) platform (via MacRumors).

The survey was conducted by investment company Morgan Stanley, and it found that one in two respondents would be willing to pay at least $10 a month (around £7.50 / AU$15 p/month) for unlimited access to Apple Intelligence. Specifically, 30% would accept paying between $10 and $14.99, while a further 22% would be okay with paying $15 or more. Just 14% of respondents were unwilling to pay anything for Apple Intelligence and 6% weren’t sure, implying that 80% of people wouldn’t mind forking out for the service.

According to 9to5Mac, the survey found that 42% of people said it was extremely important or very important that their next iPhone featured Apple Intelligence, while 54% of respondents who planned to upgrade in the next 12 months said the same thing. All in all, the survey claimed that its results showed “stronger-than-expected consumer perception for Apple Intelligence.”

Morgan Stanley’s survey polled approximately 3,300 people, and it says that the sample is representative of the United States’ population in terms of age, gender, and religion.

Surprising results

If you’ve been following Apple Intelligence, you’ll probably know it’s faced a pretty bumpy road in the months since it launched. For one thing, it has received much criticism for its ability to carry out tasks for users, with many people comparing it unfavorably to some of the best AI services like ChatGPT and Microsoft’s Copilot.

As well as that, Apple has been forced to delay some of Apple Intelligence’s headline features, such as its ability to work within apps and understand what is happening on your device’s screen. These were some of Apple Intelligence’s most intriguing aspects, yet Apple’s heavy promotion of these tools hasn’t translated into working features.

That all makes these survey results seem rather surprising, but there could be a few reasons behind them. Perhaps consumers are happy to have any AI features on their Apple devices, even if they’re missing a few key aspects at the moment.

Or maybe those people who were willing to pay for Apple Intelligence did so based on getting the full feature set, rather than the incomplete range of abilities that are currently available. Alternatively, it could be that everyday users haven’t been following Apple Intelligence’s struggles as much as tech-savvy consumers and so aren’t acutely aware of its early difficulties.

Whatever the reasons, it’s interesting to see how many people are still enticed by Apple Intelligence. It will be encouraging reading for Apple, which has faced much bad press for its AI system, and might suggest that Apple Intelligence is not in as bad a spot as we might have thought.

You might also like- I'm actually glad the new Siri with Apple Intelligence is delayed, and here's why we've got Apple's AI problem backwards

- Apple removed 'Available Now' from the Apple Intelligence webpage, but it may not have been Apple's choice

- Apple is currently levelling up Siri’s ‘onscreen awareness,’ enabling it to interact with your screen

Windows 10 might be on its deathbed, but Microsoft seemingly can’t resist messing with the OS in latest update, breaking part of the Start menu

- Windows 10’s April update has messed up part of the Start menu

- Right-click jump lists that were present with some apps no longer work

- These were a very handy shortcut and a key part of the workflow of some users, who are now pretty frustrated at their omission

Windows 10’s latest update comes with an unfortunate side effect in that it causes part of the Start menu to stop working.

Windows Latest reports that after installing the April update for Windows 10, released earlier this month, jump lists for some apps on the Start menu now appear to be broken.

Normally, when you right click on a tile (large icon) in the Start menu – on the right side of the panel, next to the full list of apps installed on the PC which is on the left – the menu that pops up provides a standard set of options, as well as what’s known informally as a jump list linking to various files (note that this only appears with certain apps).

It's a list of recently opened (or pinned, commonly used) files that you can get quick access to (or jump straight to, hence the name). So, for example, with the Photos app, you’ll see links to recently opened images (or recently viewed web pages in a web browser).

However, that jump list section is no longer appearing for the apps that should have this additional bit of furniture attached to the right-click menu.

Windows Latest observes that this is a problem with all their Windows 10 PCs, and there are a number of reports from those hit by this bug on Microsoft’s Answers.com help forum and Reddit, too.

Not everyone is affected here by any means. My Windows 10 PC is fine, and I’ve applied the apparently troublesome April 2025 update. Or that upgrade seems to be the problematic piece of the puzzle, at least according to the amateur sleuthing going on.

There are a lot of potential fixes floating around here and there, but sadly, none of them appear to work. The only cure seems to be removing the update, which is hardly ideal seeing as it’s only a temporary solution which leaves you short of the latest security patches. However, restoring the PC to before the update was applied does indeed seem to do the trick in bringing back the jump list functionality, suggesting this update is indeed the root cause.

Windows Latest does raise the prospect that this might be an intentional removal by Microsoft, but I don’t think so. If that’s the case, Microsoft better clarify this, as there are a number of annoyed Windows 10 users out there due to this problem.

I feel a more likely suggestion is that Microsoft has perhaps backported some changes from Windows 11, and this has somehow caused unexpected collateral damage in Windows 10.

Hopefully we’ll hear from Microsoft soon enough as to what’s going on, but meanwhile, the workflows of some Windows 10 users are being distinctly messed with by the removal of these convenient shortcuts.

Of course, Windows 10 doesn’t have much date left on its shelf life at this point – only six months, although you’ll be able to pay to extend support for another year should you really want to avoid upgrading to Windows 11 (or indeed if you can’t upgrade).

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

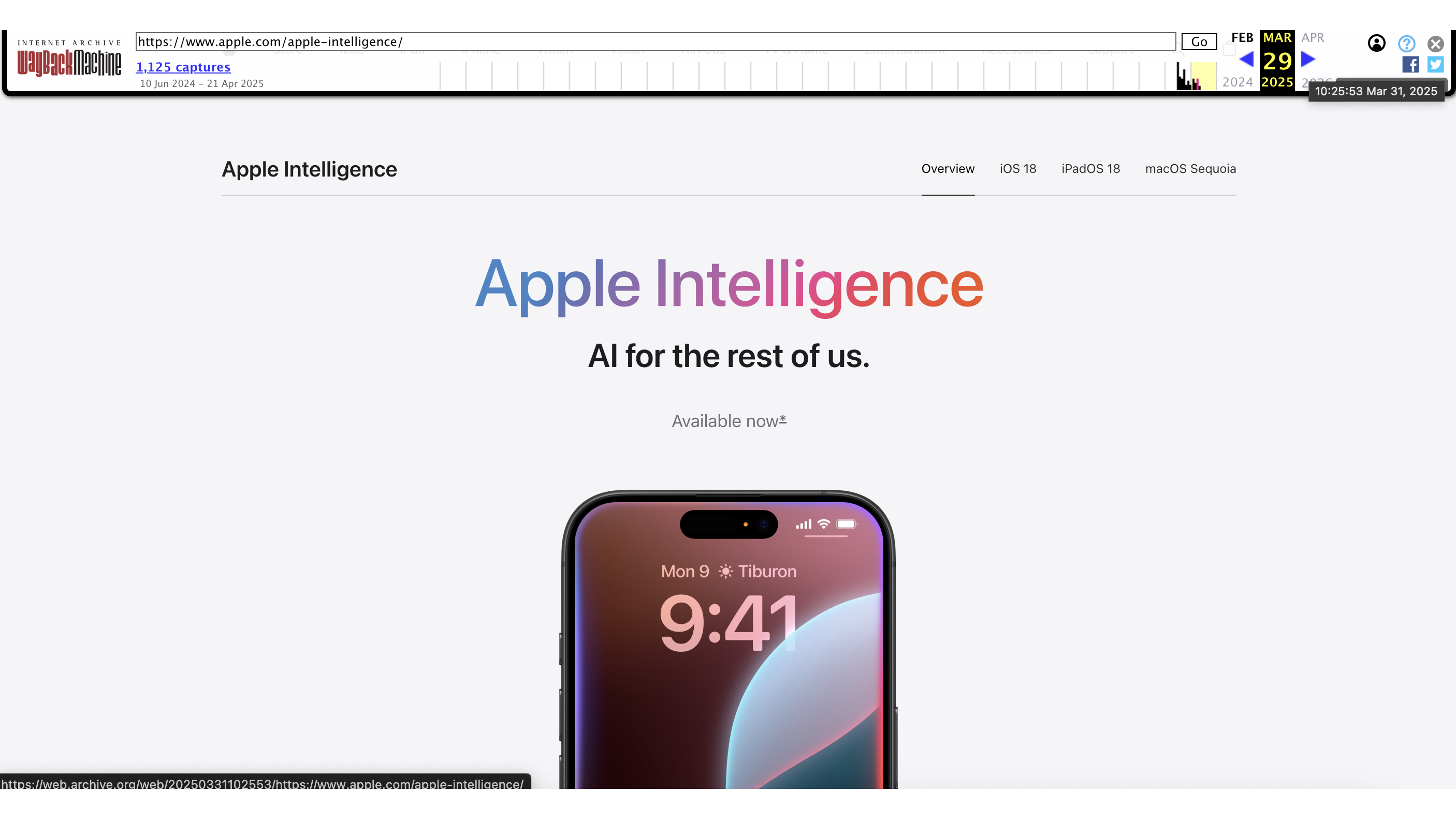

Microsoft finally plays its trump AI card, Recall, in Windows 11 – but for me, it’s completely overshadowed by another new ability for Copilot+ PCs

- Microsoft has finally made Recall available on Windows 11 for Copilot+ PCs – it’s rolling out with the April preview update

- Click to Do is also generally available now, as is an AI-supercharged version of Windows 11’s basic search functionality

- The latter improved search feature is what might interest many Copilot+ PC owners

Microsoft has finally unleashed its Recall feature on the general computing public after multiple misfires and backtracks since the functionality was first revealed almost a year ago now.

In case it escaped your attention – which is unlikely to say the least – Recall is the much talked about AI-powered feature that uses regularly saved screenshots to provide an in-depth, natural language search experience.

Microsoft announced that the general availability of Recall for Windows 11 is happening, with the feature now rolling out, albeit with the caveat that this is for Copilot+ PCs only. That’s because it requires a chunky NPU to beef up the local processing power on hand to ensure the search works responsively enough.

Alongside Recall, the Click to Do feature is also debuting in Windows 11 for Copilot+ PCs, which is a partner ability that offers up context-sensitive AI-powered actions (most recently a new reading coach integration is in testing, a nifty touch).

There’s also a boost for Windows 11 search in general on Copilot+ laptops, which now benefits from a natural language approach, as seen in testing recently. This means you can type a query in the taskbar search box to find images of “dogs on beaches” and any pics of your pets in the sand will be surfaced. (This also ties in cloud-based results with findings on your device locally).

Microsoft further notes that it has expanded Live Captions, its system-wide feature to provide captions for whatever content you’re experiencing, to include real-time translations in Chinese (Simplified) covering 27 languages (for audio or video content).

It’s Recall that’s the big development here, though, and while Microsoft doesn’t say anything new about the capability, the company underlines some key aspects (we were informed about in the past) regarding privacy.

Microsoft reminds us: “Recall is an opt-in experience with a rich set of privacy controls to filter content and customize what gets saved for you to find later. We’ve implemented extensive security considerations, such as Windows Hello sign-in, data encryption and isolation in Recall to help keep your data safe and secure.

“Recall data is processed locally on your device, meaning it is not sent to the cloud and is not shared with Microsoft and Microsoft will not share your data with third parties.”

Furthermore, we’re also reminded that Recall can be stripped out of your Copilot+ PC completely if you don’t want the feature, and are paranoid about even its barebones being present (disabled) on your PC. There are instructions for removing Recall in this support document that also goes into depth about how the functionality works.

All of these features are set to be rolled out to Copilot+ PCs starting with the April 2025 preview update, which is imminent (or should be). However, note that for certain regions, release timing may vary. The European Economic Area won’t get these abilities until later in 2025, notably, as Microsoft already told us.

The arrival of Recall is not surprising, because even though it’s been almost a year since Microsoft first unveiled the feature – and rapidly pulled the curtain back over it for some time, after the initial copious amounts of flak were fired at the idea – it was recently spotted in the Release Preview channel for Windows 11. That’s the final hurdle before release, of course, so the presence of Recall there clearly indicated it was close at hand.

I must admit I didn’t think it would be out quite so soon, though, and a relatively rapid progression through this final test phase would seem to suggest that things went well.

That said, Microsoft makes it clear that this is a ‘controlled feature rollout’ for Copilot+ PCs and so Recall will likely be released on a fairly limited basis to begin with. In short, you may not get it for a while, but if you do want it as soon as possible, you need to download the mentioned optional update for April 2025 (known as the non-security preview update). Also ensure you’ve turned on the option to ‘Get the latest updates as soon as they’re available’ (which is in Settings > Windows Update).

Even then, you may have to be patient for some time, as I wouldn’t be surprised if Microsoft was tentative about this rollout to start with. There’s a lot at stake here, after all, in terms of the reputation of Copilot+ PCs.

Arguably, however, the most important piece of the puzzle here isn’t Recall at all, although doubtless Microsoft would say otherwise. For me – if I had a Copilot+ PC (I don’t, so this is all hypothetical, I should make clear) – what I’d be really looking forward to is the souped-up version of Windows 11’s basic search functionality.

Recall? Well, it might be useful, granted, but I have too many trust issues still to be any kind of early adopter, and I suspect I won’t be alone. Click to Do? Meh, it’s a bit of a sideshow for Recall and while possibly handy, it looks far from earth-shattering in the main.

A better general Windows search experience overall, though? Yes please, sign me up now. Windows 11 search has been regarded as rather shoddy in many ways, and the same is broadly true for searching via the taskbar box in Windows 10. An all-new, more powerful natural language search could really help in this respect, and might be a much better reason for many people to grab a Copilot+ PC than Recall, which as noted is going to be steered clear of by a good many folks (in all likelihood).

Microsoft’s own research (add seasoning) suggests this revamped basic Windows 11 search is a considerable step forward. The software giant informs us that on a Copilot+ device, the new Windows search means it “can take up to 70% less time to find an image and copy it to a new folder” compared to the same search and shift process on a Windows 10 PC.

Of course, improved search for all Windows 11 PCs (not just Copilot+ models) would be even better – obviously – and hopefully that’s in the cards from an overarching perspective of the development roadmap for the OS. The catch is that this newly pepped up search is built around the powerful NPU required for a Copilot+ laptop, of course, but that doesn’t stop Microsoft from enhancing Windows search in general for everyone.

Naturally, Microsoft is resting a lot of expectations on Copilot+ PCs, though, noting that it expects the ‘majority’ of PCs sold in the next few years to be these devices. Analyst firms have previously predicted big things for AI PCs, as they’re also known, as well.

You may also like...- Windows 11 users are getting fixes for some longstanding bugs in 24H2, including the cure for a seriously annoying File Explorer glitch

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Microsoft looks to be making a big change to how you install and log in to Windows 11 – and I’m not happy about it at all

Shopify is hiring ChatGPT as your personal shopper, according to a new report

- New code reveals ChatGPT may soon support in-chat purchases via Shopify

- The chat would offer prices, reviews, and embedded checkout through the AI

- The feature could give Shopify merchants access to ChatGPT’s users while making OpenAI an e-commerce platform

When you ask ChatGPT what sneakers are best for trail running, you get an overview of top examples and links to review sites and product catalogs, Soon, though, ChatGPT might just offer to let you buy your choice from among a range of Shopify merchants directly in the chat, according to unreleased code discovered by Testing Catalog.

Rather than clicking through to different websites, Shopify would populate ChatGPT with the suggested products, complete with price, reviews, shipping details, and, most importantly, a “Buy Now” button.

Clicking it won't send you away from ChatGPT either. You'll just purchase those shoes through ChatGPT, which will be the storefront itself.

At least, that's what the uncovered bits of code describe. The direct integration of Shopify into ChatGPT's interface would make the AI assistant a retail clerk as well as a review compiler. The fact that some of the code is actually live, if hidden, suggests the rollout is coming sooner than you might expect.

For Shopify’s millions of merchants, this could be a huge boon. Right now, most small sellers have to hustle for traffic, investing in SEO, ads, or pleading with mysterious social media algorithms to go viral. However, ChatGPT could make its products appear directly in front of the AI chatbot's more than 800 million users.

And it might lead to more actual sales. After all, it's one thing to stumble upon a jacket on Instagram and follow a link to a site requiring you to find the jacket again and fill in payment details. It's a lot easier to purchase something when you see it appear amid recommendations from ChatGPT, with a buy button there for you to click on without any intermediate steps.

Shop ChatGPTOf course, OpenAI isn’t doing this in a vacuum. The idea that AI can guide you not just to information, but through decision-making and transactions, is referred to as 'agentic commerce' and is quickly becoming a major competition space for AI developers.

Microsoft has its new Copilot Merchant Program, and Perplexity has its “Buy with Pro” feature for shopping within its AI search engine, to name two others.

Regardless of any competition, this is a move that could set up OpenAI for a new role in 'doing' and not just 'knowing. Until now, ChatGPT’s value was mostly cerebral—it helped you think, organize, and research. But letting you buy things directly with Shopify's help might give a lot of people a new perspective on what ChatGPT is for.

OpenAI has already experimented with the Operator agent to browse the web on your behalf. This just extends its power into transactions as well. ChatGPT won't just suggest things for you to do; it will carry out those tasks for you.

It's not that AI-assisted shopping will end all websites for buying things, but the promise of AI-assisted shopping is that it can cut through the noise, learn your preferences, and serve up products tailored to you. That won't save you from buyer's regret, though; it just gets you there faster.

You might also likeTesla Profits Drop 71% Amid Backlash to Elon Musk’s Role Under Trump

The carmaker reported the sharp decline in quarterly earnings after its brand suffered because of its chief executive’s role in the Trump administration.

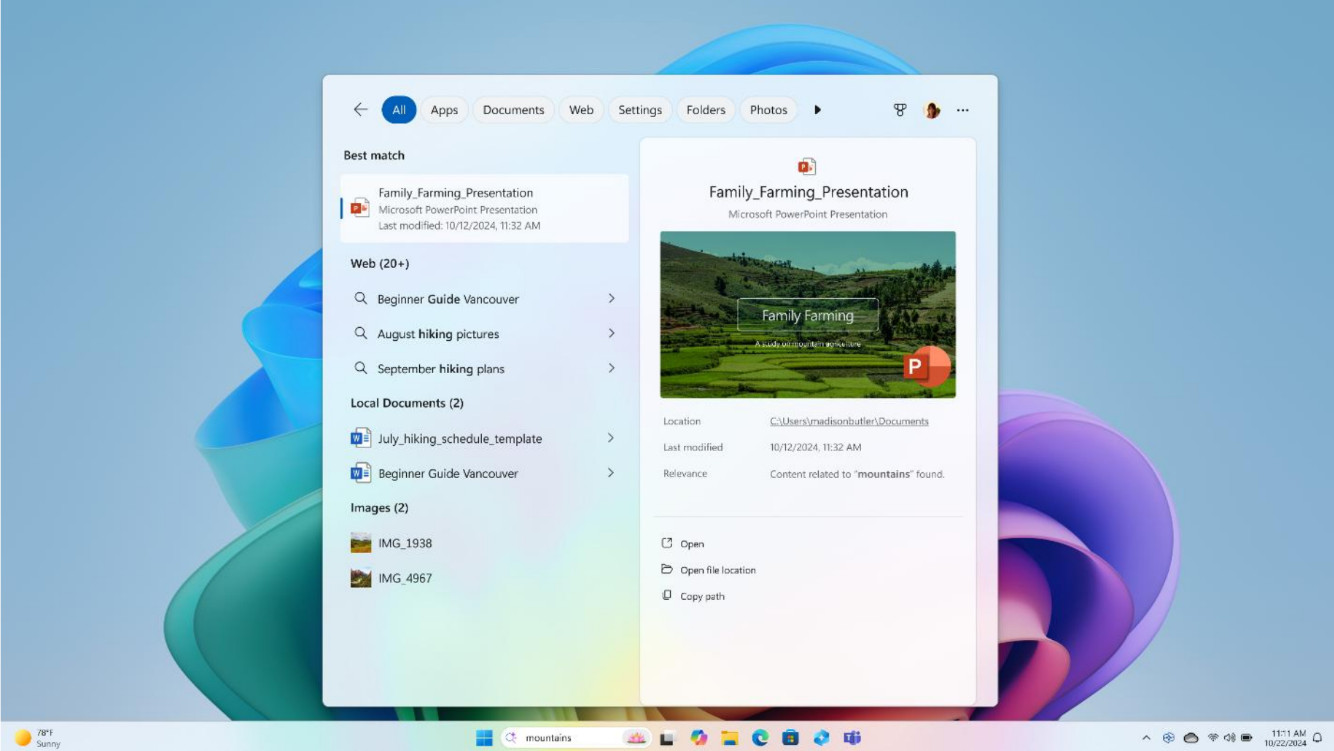

Apple removed 'Available Now' from the Apple Intelligence webpage, but it may not have been Apple's choice

- Apple has removed "Available Now" from its Apple Intelligence webpage

- The change comes after the BBB's National Advertising Division recommended it clarify or remove availability details

- The issue is around the language of when Apple Intelligence features launched and the wait for forthcoming ones

Apple has long said that there is a long road ahead for Apple Intelligence, but it’s been more of a long-winded road since Apple's AI was first announced at WWDC 2024. Earlier in 2025, the promised AI-powered Siri features were pushed back to the ‘coming year,’ which is pretty vague.

Now, Apple has made a change to its Apple Intelligence landing page, dropping the ‘Available Now’ tagline from under “AI for the rest of us.” Surprisingly, the Cupertino-based tech giant doesn't seem to have made the decision to remove the message itself, but instead was asked by the Better Business Bureau's National Programs’ National Advertising Division (NAD).

In a shared release, the NAD recommends “that Apple Inc. modify or discontinue advertising claims regarding the availability of certain features associated with the launch of its AI-powered Apple Intelligence tool in the U.S.” It seems that launch features including the new Siri, still not being available, weren’t clear enough on the page.

The NAD states that the Apple Intelligence landing pages made it seem like Apple Intelligence features, including Priority Notifications, Image Playground, Image Wand, and ChatGPT integration as well as Genmoji, “were available at the launch of the iPhone 16 and iPhone 16 Pro.”

However, Apple Intelligence didn’t begin its launch until iOS 18.2, which was released after the new iPhones. NAD’s argument states that the disclosures, presented as “footnotes” or “small print disclosures,” were not clear enough.

The BBB’s NAD also had similar thoughts about Apple displaying the forthcoming Siri features, such as on-screen awareness and personal content, on the same page as the “Available Now” heading.

The NAD even connected with Apple, who informed them that “Siri features would not be available on the original timeline” and that the corresponding language was updated. This evidently happened around the same time as Apple pulled one of its advertisements featuring the all-new Siri.

Apple, in the release, says “While we disagree with the NAD’s findings related to features that are available to users now, we appreciate the opportunity to work with them and will follow their recommendations.” And it’s clear the recommendations were taken to heart, as “Available Now,” according to snapshots viewed on the Wayback Machine by TechRadar, was removed on March 29, 2025.

That’s well before this release from the National Advertising Dvision making the ask that Apple stops or modifies it’s language, but also after the tech giant confirmed the delay with the AI infused Siri as of February 7, 2025.

Either way, it’s clear Apple is getting its ducks in a row, and it’s good to be clearer with consumers. TechRadar has reached out to Apple asking for further comment, and we'll update this when and if we hear back.

You might also likeAt Meta Trial, Instagram Co-Founder Says Startup Was Denied Resources

Kevin Systrom said during testimony in a landmark antitrust trial that he believed Mark Zuckerberg, Meta’s chief executive, viewed Instagram as a threat.

ChatGPT crosses a new AI threshold by beating the Turing test

- When ChatGPT uses the GPT-4.5 model, it can pass the Turing Test by fooling most people into thinking it's human

- Nearly three-quarters of people in a study believed the AI was human during a five-minute conversation

- ChatGPT isn't conscious or self-aware, though it raises questions around how to define intelligence

Artificial intelligence sounds pretty human to a lot of people, but usually, you can tell pretty quickly when you're engaging with an AI model. However, that may change as OpenAI's new GPT-4.5 model passed the Turing Test by fooling people into thinking it was a human over the course of a five-minute conversation. Not just a few people, but 73% of those participating in a University of California, San Diego study.

In fact, GPT-4.5 outperformed some of the actual human participants, who were accused of being AI in the blind test. Still, the fact that the AI did such a good impression of a human being that it seemed more human than actual humans says a lot about the brilliance of the machine or just how awkward humans can be.

Participants sat down for two back-to-back conversations with a human and a chatbot, not knowing which was which, and had to identify the AI afterward. To help GPT-4.5 succeed, the model had been given a detailed personality to mimic in a series of prompts. It was told to act like a young, slightly awkward, but internet-savvy introvert with a streak of dry humor.

With that little nudge toward humanity, GPT-4.5 became surprisingly convincing. Of course, as soon as the prompts were stripped away and the AI went back to a blank slate personality and history, the illusion collapsed. Suddenly, GPT-4.5 could only fool 36% of those studied. That sudden nosedive tells us something critical: this isn't a mind waking up. It’s a language model playing a part. And when it forgets its character sheet, it’s just another autocomplete.

Cleverness is not consciousnessThe result is historic, no doubt. Alan Turing's proposal that a machine capable of conversing well enough to be mistaken for a human might therefore have human intelligence has been debated since he introduced it in 1950. Philosophers and engineers have grappled with the Turing Test and its implications, but suddenly, theory is a lot more real.

Turing didn't equate passing his test with proof of consciousness or self-awareness. That's not what the Turing Test really measures. Nailing the vibes of human conversation is huge, and the way GPT-4.5 evoked actual human interaction is impressive, right down to how it offered mildly embarrassing anecdotes. But if you think intelligence should include actual self-reflection and emotional connections, then you're probably not worried about the AI infiltration of humanity just yet.

GPT-4.5 doesn’t feel nervous before it speaks. It doesn’t care if it fooled you. The model is not proud of passing the test, since it doesn’t even know what a test is. It only "knows" things the way a dictionary knows the definition of words. The model is simply a black box of probabilities wrapped in a cozy linguistic sweater that makes you feel at ease.

The researchers made the same point about GPT-4.5 not being conscious. It’s performing, not perceiving. But performances, as we all know, can be powerful. We cry at movies. We fall in love with fictional characters. If a chatbot delivers a convincing enough act, our brains are more than happy to fill in the rest. No wonder 25% of Gen Z now believe AI is already self-aware.

There's a place for debate around this, of course. If a machine talks like a person, does it matter if it isn’t one? And regardless of the deeper philosophical implications, an AI that can fool that many people could be a menace in unethical hands. What happens when the smooth-talking customer support rep isn’t a harried intern in Tulsa, but an AI trained to sound disarmingly helpful, specifically to people like you, so that you'll pay for a subscription upgrade?

Maybe the best way to think of it for now is like a dog in a suit walking on its hind legs. Sure, it might look like a little entrepreneur on the way to the office, but it's only human training and perception that gives that impression. It's not a natural look or behavior, and doesn't mean banks will be handing out business loans to canines any time soon. The trick is impressive, but it's still just a trick.

You might also likeThere's no need to wait for Google's Android XR smart glasses – here are two amazing AR glasses I’ve tested that you can try now

Google and Samsung took the world by storm with their surprise Android XR glasses announcement – which was complete with an impressive prototype demonstration at a TED 2025 event – but you don’t need to wait until 2026 (which is when the glasses are rumored to be launching) to try some excellent XR smart glasses for yourself.

In fact, we’ve just updated our best smart glasses guide with two fantastic new options that you can pick up right now. This includes a new entry in our number one slot for the best AR specs you can buy (the Xreal One glasses) and a new pick in our best affordable smart glasses slot called the RayNeo Air 3S glasses.

Admittedly these specs aren’t quite what Google and Samsung are boasting the Android XR glasses will be, but the Xreal and RayNeo glasses are more for entertainment – you connect them to a compatible phone, laptop or console to enjoy your film, TV or gaming content on a giant virtual screen wherever you are – and they’re damn good at what they do.

What’s more they’re a lot less pricey than the Android XR glasses are likely to be (based on pricing rumors for the similar Ray-Ban Meta AR glasses), so if you’re looking to dip your toes into the world of AR before it takes off then these two glasses will serve you well.

The best of the bestSo, let’s start with the Xreal One glasses: our new number one pick, that are easily the best of the best AR smart glasses around.

As I mentioned before these smart specs are perfect for entertainment – both on-the-go and at-home (say in a cramped apartment, or when you want to relax in bed). You simply connect them to a compatible device via their USB-C cable and you watch your favorite show, film, or game play out on a giant virtual screen that functions like your own private cinema.

This feature appears on other AR smart glasses, but the Xreal One experience is on another level thanks to the full-HD 120Hz OLED screens, which boast up to 600-nits of brightness. This allows the glasses to produce vibrant images that have superb contrast for watching dark scenes.

Image quality is further improved by electrochromically-dimmable lenses – lenses which can be darkened or brightened via electrical input that you can toggle with a switch on the glasses.

At their clearest the lenses allow you to easily see what’s going on around you, while at their darkest the lenses serve as the perfect backdrop to whatever you’re watching – blocking out most errant external light that would ruin their near-perfect picture.

Beyond their picture, these glasses are also impressive thanks to their speakers which are the best I’ve heard from smart glasses.

With sound tuned by Bose (creators of some of the best headphones around) the Xreal One glasses can deliver full-bodied audio across highs, midtones and bass.

You will find that using a separate pair of headphones can still elevate your audio experience (especially because they leak less sound than these glasses will), however, these are the first smart glasses I’ve tested where a pair of cans feels like an optional add-on rather than an essential accessory.

Couple all that with a superb design that’s both functional, and fairly fashionable, and you’ve got a stellar pair of AR glasses. Things get even better if you snag a pair with an Xreal Beam Pro (an Android spatial computer which turns your glasses into a complete package, rather than a phone accessory).

Plus, it only looks set to get better with the upcoming Xreal Eye add-on which will allow the glasses to take first-person photos and videos, and which may help unlock some new spatial computing powers, if we’re lucky.

The best on a budget (but still awesome)At $499 / £449 the Xreal One glasses are a little pricey. If you’re on a budget you’ll want to try the RayNeo Air 3S glasses instead.

These smart glasses cost just $269 (around £205 / AU$435). For that you’ll get access to their 650-nit full-HD micro-OLED setup that produces great images and audio that doesn’t necessitate a pair of headphones.

They’re a lot like the Xreal One glasses, but do boast a few downgrades as you might expect.

The audio performance isn’t quite as impressive. It’s fine without headphones but isn’t quite as rich as Xreal’s alternative, and it’s more leaky (so if you use these while travelling your fellow passengers may hear what’s going on too).

What’s more, while on paper their image quality should be better than the Xreal glasses the overall result isn’t quite as vibrant because the reflective lenses the glasses use as a backdrop aren’t as effective as the electrochromic dimming used by the Xreal Ones.

This allows more light to sneak through the backdrop leading to image clarity issues (such as the picture appearing washed out) if you’re trying to use the glasses in a brighter environment.

They also lack any kind of camera add-on option if that’s a feature you care about.

But these glasses are still very impressive, as are the specs, and I think most people should buy them because their value proposition is simply so fantastic.

Whether you pick the Xreal One glasses of RayNeo Air 3S smart glasses you’ll be in for a treat – and if you love the taste of AR they provide you’ll have a better idea of whether the Android XR and other AR glasses will be worth your time.

You might also like- Star Wars Celebration is in full swing, and Lucasfilm just dropped more details on its Beyond Victory experience for Meta Quest, and I couldn't be more stoked

- James Cameron thinks VR is the future of cinema, but Meta needs to solve a major content problem first

- Apple Vision Pro 2 tipped to fix two of its biggest flaws – here's why I might actually buy one

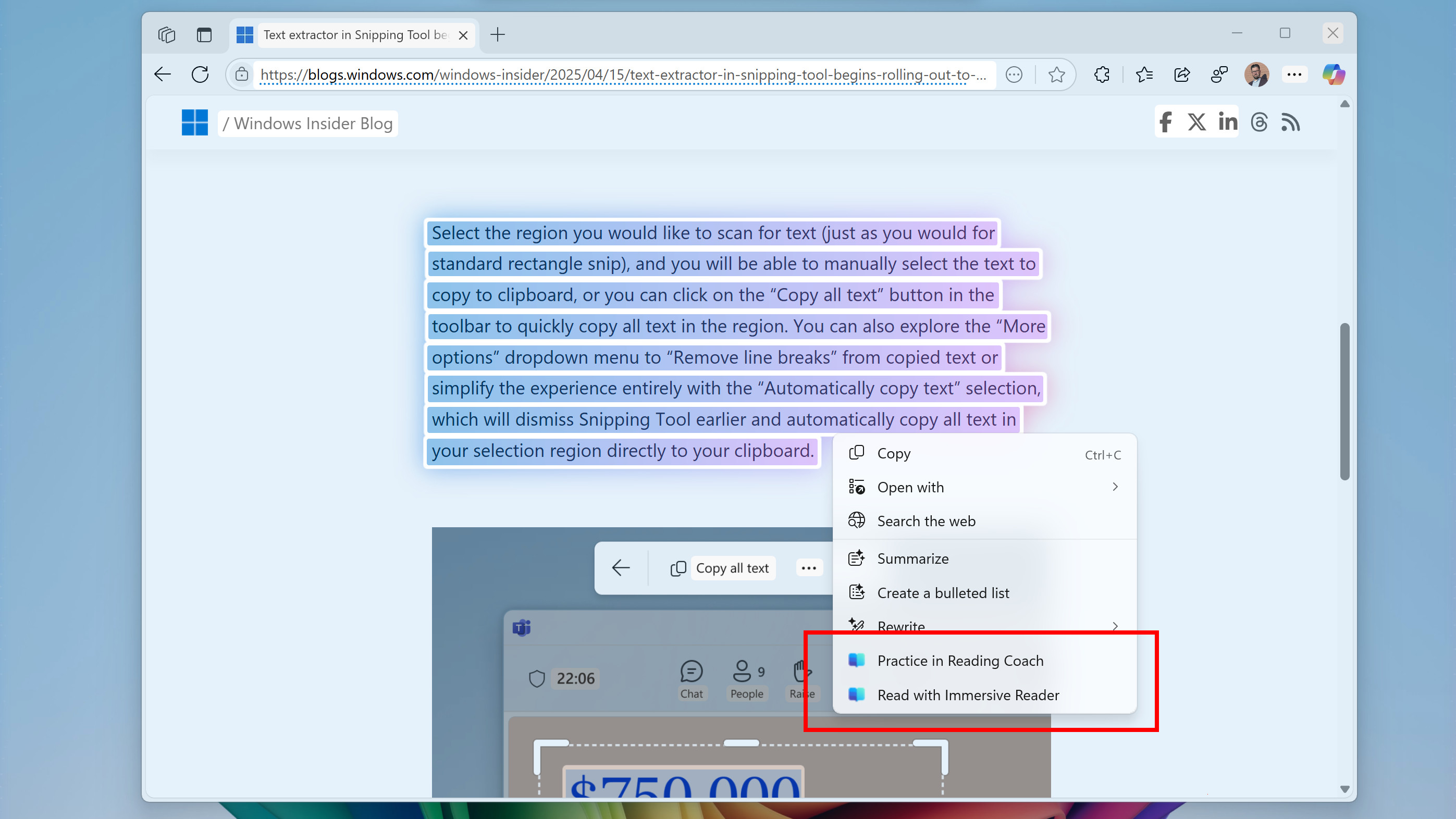

Microsoft is working on some seriously exciting Windows 11 improvements – but not everyone will get them

- Windows 11 has a new preview build in the Beta channel

- It offers new Click to Do features for Copilot+ PCs, including Reading Coach integration

- Search has also been pepped up with AI, and Voice Access has got a handy new addition too

Windows 11’s latest preview version just arrived packing improved search functionality and some impressive new capabilities for accessibility, including the integration of Microsoft’s ‘Reading Coach’ app on certain PCs.

This is preview build 26120.3872 in the Beta channel, and some of the fresh additions are just for Copilot+ PCs, and specifically only for devices with Snapdragon (Arm-based) chips.

So, first up in this category is the integration of Reading Coach with Click to Do. To recap on those pieces of functionality, Click to Do provides context-sensitive actions which are AI-powered – this was brought in as the partner feature to Recall on Copilot+ PCs – and Reading Coach became available for free at the start of 2024.

The latter is an app you can download from the Microsoft Store in order to practice your reading skills and pronunciation, and Reading Coach can now be chosen direct from the Click to Do context menu, so you can work on any selected piece of text. (You’ll need the coaching app installed to do this, of course).

Also new for Click to Do (and Copilot+ PCs) is a ‘Read with Immersive Reader’ ability which is a focused reading mode designed for those with dyslexia and dysgraphia.

This allows users to adjust the text size and spacing, font, and background theme to best suit their needs, as well as having a picture dictionary option that Microsoft notes “provides visual representations of unfamiliar words for instant understanding.” You can also elect to have text read aloud and split into syllables if required.

Another neat feature for Copilot+ PCs – albeit only in the European Economic Area to begin with – is the ability to find photos saved in the cloud (OneDrive) via the search box in the Windows 11 taskbar. Again, this is AI-powered, so you can use natural language search to find images in OneDrive (such as photos of “Halloween costumes” for example). Both local (on the device) and cloud-based photos will be displayed in the taskbar search results.

All of the above are now rolling out in testing to Snapdragon-powered Copilot+ PCs, but devices with AMD and Intel CPUs will also be covered eventually.

A further noteworthy introduction here – for all PCs this time – is that Voice Access now grants you the power to add your own words to its dictionary. So, if there’s a word that the system is having difficulty picking up when you say it, you can add a custom dictionary entry and hopefully the next time you use it during dictation, Voice Access will correctly recognize the word.

There are a bunch of other tweaks and refinements in this new preview version, all of which are covered in Microsoft’s blog post on the new Beta build.

It’s good to see Microsoft’s continued efforts to improve Windows 11 in terms of accessibility and learning, even if some of the core introductions here won’t be piped through to most folks – as they won’t have a Copilot+ PC. What’s also clear is that Microsoft is clearly giving devices with Snapdragon processors priority on an ongoing basis, and that’s fine, as long as the same powers come to all Copilot+ PCs eventually (which they are doing thus far, and there’s no reason why they shouldn’t).

The Voice Access addition is a very handy one, although I’m surprised it took Microsoft this long to implement it. I was previously a heavy user of Nuance (Dragon) speech recognition tool (my RSI has long since been cured, thanks in part to taking a break from typing by using this software) and it offered this functionality. As Windows 11’s Voice Access is essentially built on the same tech – Microsoft bought Nuance back in 2021 – it’s taken a while to incorporate what I felt was an important feature.

As ever, though, better late than never, and I certainly can’t complain about Voice Access being free, or at least free in terms of being bundled in with Windows 11.

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

AI took a huge leap in IQ, and now a quarter of Gen Z thinks AI is conscious

- ChatGPT's o3 model scored a 136 on the Mensa IQ test and a 116 on a custom offline test, outperforming most humans

- A new survey found 25% of Gen Z believe AI is already conscious, and over half think it will be soon

- The change in IQ and belief in AI consciousness has happened extremely quickly

OpenAI’s new ChatGPT model, dubbed o3, just scored an IQ of 136 on the Norway Mensa test – higher than 98% of humanity, not bad for a glorified autocomplete. In less than a year, AI models have become enormously more complex, flexible, and, in some ways, intelligent.

The jump is so steep that it may be causing some to think that AI has become Skynet. According to a new EduBirdie survey, 25% of Gen Z now believe AI is already self-aware, and more than half think it’s just a matter of time before their chatbot becomes sentient and possibly demands voting rights.

There’s some context to consider when it comes to the IQ test. The Norway Mensa test is public, which means it’s technically possible that the model used the answers or questions for training. So, researchers at MaximumTruth.org created a new IQ test that is entirely offline and out of reach of training data.

On that test, which was designed to be equivalent in difficulty to the Mensa version, the o3 model scored a 116. That’s still high.

It puts o3 in the top 15% of human intelligence, hovering somewhere between “sharp grad student” and “annoyingly clever trivia night regular.” No feelings. No consciousness. But logic? It’s got that in spades.

Compare that to last year, when no AI tested above 90 on the same scale. In May of last year, the best AI struggled with rotating triangles. Now, o3 is parked comfortably to the right of the bell curve among the brightest of humans.

And that curve is crowded now. Claude has inched up. Gemini’s scored in the 90s. Even GPT-4o, the baseline default model for ChatGPT, is only a few IQ points below o3.

Even so, it’s not just that these AIs are getting smarter. It’s that they’re learning fast. They’re improving like software does, not like humans do. And for a generation raised on software, that’s an unsettling kind of growth.

I do not think consciousness means what you think it meansFor those raised in a world navigated by Google, with a Siri in their pocket and an Alexa on the shelf, AI means something different than its strictest definition.

If you came of age during a pandemic when most conversations were mediated through screens, an AI companion probably doesn't feel very different from a Zoom class. So it’s maybe not a shock that, according to EduBirdie, nearly 70% of Gen Zers say “please” and “thank you” when talking to AI.

Two-thirds of them use AI regularly for work communication, and 40% use it to write emails. A quarter use it to finesse awkward Slack replies, with nearly 20% sharing sensitive workplace information, such as contracts and colleagues’ personal details.

Many of those surveyed rely on AI for various social situations, ranging from asking for days off to simply saying no. One in eight already talk to AI about workplace drama, and one in six have used AI as a therapist.

If you trust AI that much, or find it engaging enough to treat as a friend (26%) or even a romantic partner (6%), then the idea that the AI is conscious seems less extreme. The more time you spend treating something like a person, the more it starts to feel like one. It answers questions, remembers things, and even mimics empathy. And now that it’s getting demonstrably smarter, philosophical questions naturally follow.

But intelligence is not the same thing as consciousness. IQ scores don’t mean self-awareness. You can score a perfect 160 on a logic test and still be a toaster, if your circuits are wired that way. AI can only think in the sense that it can solve problems using programmed reasoning. You might say that I'm no different, just with meat, not circuits. But that would hurt my feelings, something you don't have to worry about with any current AI product.

Maybe that will change someday, even someday soon. I doubt it, but I'm open to being proven wrong. I get the willingness to suspend disbelief with AI. It might be easier to believe that your AI assistant really understands you when you’re pouring your heart out at 3 a.m. and getting supportive, helpful responses rather than dwelling on its origin as a predictive language model trained on the internet's collective oversharing.

Maybe we’re on the brink of genuine self-aware artificial intelligence, but maybe we’re just anthropomorphizing really good calculators. Either way, don't tell secrets to an AI that you don't want used to train a more advanced model.

You might also like3 things we learned from this interview with Google Deepmind's CEO, and why Astra could be the key to great AI smart glasses

Google has been hyping up its Project Astra as the next generation of AI for months. That set some high expectations when 60 Minutes sent Scott Pelley to experiment with Project Astra tools provided by Google DeepMind.

He was impressed with how articulate, observant, and insightful the AI turned out to be throughout his testing, particularly when the AI not only recognized Edward Hopper’s moody painting "Automat," but also read into the woman’s body language and spun a fictional vignette about her life.

All this through a pair of smart glasses that barely seemed different from a pair without AI built in. The glasses serve as a delivery system for an AI that sees, hears, and can understand the world around you. That could set the stage for a new smart wearables race, but that's just one of many things we learned during the segment about Project Astra and Google's plans for AI.

Astra's understandingOf course, we have to begin with what we now know about Astra. Firstly, the AI assistant continuously processes video and audio from connected cameras and microphones in its surroundings. The AI doesn’t just identify objects or transcribe text; it also purports to spot and explain emotional tone, extrapolate context, and carry on a conversation about the topic, even when you pause for thought or talk to someone else.

During the demo, Pelley asked Astra what he was looking at. It instantly identified Coal Drops Yard, a retail complex in King’s Cross, and offered background information without missing a beat. When shown a painting, it didn’t stop at "that’s a woman in a cafe." It said she looked "contemplative." And when nudged, it gave her a name and a backstory.

According to DeepMind CEO Demis Hassabis, the assistant’s real-world understanding is advancing even faster than he expected, noting it is better at making sense of the physical world than the engineers thought it would be at this stage.

Veo 2 viewsBut Astra isn’t just passively watching. DeepMind has also been busy teaching AI how to generate photorealistic imagery and video. The engineers described how two years ago, their video models struggled with understanding that legs are attached to dogs. Now, they showcased how Veo 2 can conjure a flying dog with flapping wings.

The implications for visual storytelling, filmmaking, advertising, and yes, augmented reality glasses, are profound. Imagine your glasses not only telling you what building you're looking at, but also visualizing what it looked like a century ago, rendered in high definition and seamlessly integrated into the present view.

Genie 2And then there’s Genie 2, DeepMind’s new world-modeling system. If Astra understands the world as it exists, Genie builds worlds that don’t. It takes a still image and turns it into an explorable environment visible through the smart glasses.

Walk forward, and Genie invents what lies around the corner. Turn left, and it populates the unseen walls. During the demo, a waterfall photo turned into a playable video game level, dynamically generated as Pelley explored.

DeepMind is already using Genie-generated spaces to train other AIs. Genie can help these navigate a world made up by another AI, and in real time, too. One system dreams, another learns. That kind of simulation loop has huge implications for robotics.

In the real world, robots have to fumble their way through trial and error. But in a synthetic world, they can train endlessly without breaking furniture or risking lawsuits.

Astra eyesGoogle is trying to get Astra-style perception into your hands (or onto your face) as fast as possible, even if it means giving it away.

Just weeks after launching Gemini’s screen-sharing and live camera features as a premium perk, they reversed course and made it free for all Android users. That wasn’t a random act of generosity. By getting as many people as possible to point their cameras at the world and chat with Gemini, Google gets a flood of training data and real-time user feedback.

There is already a small group of people wearing Astra-powered glasses out in the world. The hardware reportedly uses micro-LED displays to project captions into one eye and delivers audio through tiny directional speakers near the temples. Compared to the awkward sci-fi visor of the original Glass, this feels like a step forward.

Sure, there are issues with privacy, latency, battery life, and the not-so-small question of whether society is ready for people walking around with semi-omniscient glasses without mocking them mercilessly.

Whether or not Google can make that magic feel ethical, non-invasive, and stylish enough to go mainstream is still up in the air. But that sense of 2025 as the year smart glasses go mainstream seems more accurate than ever.

You might also likeFTC Sues Uber Over Billing for Its Uber One Subscription Service

The suit is an indication that the commission’s close scrutiny of the tech industry will continue in the Trump administration.

New AI Chibi figure trend may be the cutest one yet, and we're all doomed to waste time and energy making these things

The best AI generation trends are the cute ones, especially those that transform us into our favorite characters or at least facsimiles of them. ChatGPT 4o's ability to generate realistic-looking memes and figures is now almost unmatched, and it's hard to ignore fresh trends and miss out on all the fun. The latest one is based on a popular set of Anime-style toys called Chibi figures.

Chibi, which is Japanese slang for small or short, describes tiny, pocketable figures with exaggerated features like compact bodies, big heads, and large eyes. They are adorable and quite popular online. Think of them as tiny cousins of Funko Pop!.

Real Chibi figures can run you anywhere from $9.99 to well over $100. Or, you can create one in ChatGPT.

What's interesting about this prompt is that it relies heavily on the source image and doesn't force you to provide additional context. The goal is a realistic Chibi character that resembles the original photo, and to have it appear inside a plastic capsule.

The prompt describes that container as a "Gashapon," which is what they're called when they come from a Bandai vending machine. Bandai did not invent this kind of capsule, of course. Tiny toys in little plastic containers that open up into two halves have been on sale in coin-operated vending machines for over 50 years.

If you want to create a Chibi figure, you just need a decent photo of yourself or someone else. It should be clear, sharp, in color, and at least show their whole face. The effect will be better if it also shows part of their outfit.

Here's the prompt I used in ChatGPT Plus 4o:

Generate a portrait-oriented image of a realistic, full-glass gashapon capsule being held between two fingers.

Inside the capsule is a Chibi-style, full-figure miniature version of the person in the uploaded photo.

The Chibi figure should:

- Closely resemble the person in the photo (face, hairstyle, etc.)

- Wear the same outfit as seen in the uploaded photo

- Be in a pose inspired by the chosen theme

Since there's no recognizable background or accessories in the final ChatGPT Chibi figure image, the final result is all about how the character looks and dresses.

I made a few characters. One based on a photo of me, another based on an image of Brad Pitt, and, finally, one based on one of my heroes, Mr. Rogers.

These Chibi figures would do well on the Crunchyroll Mini and Chibi store, but I must admit that they lean heavily on cuteness and not so much on verisimilitude.

Even though none of them look quite like the source, the Mr. Rogers one is my favorite.

Remember that AI image generation is not without cost. First, you are uploading your photo to OpenAI's server, and there's no guarantee that the system is not learning from it and using it to train future models.

AI image generation also consumes electricity on the server side to build models and to resolve prompts. Perhaps you can commit to planting a tree or two after you've generated a half dozen or more Chibi AI figures.

You might also likeU.S. Asks Judge to Break Up Google

The Justice Department said that the best way to address the tech company’s monopoly in internet search was to force it to sell Chrome, among other measures.

Canadians Confront News Void on Facebook and Instagram as Election Nears

After Meta blocked news from its platforms in Canada, hyperpartisan and misleading content from popular right-wing Facebook pages such as Canada Proud has filled the gap.

Will a Federal Judge Break Up Google?

On Monday, the tech giant and the U.S. government face off in court over how to fix the company’s online search monopoly. The outcome could alter Google and Silicon Valley.

‘Black Mirror’ Showed Us a Future. Some of It Is Here Now.

The long-running tech drama always felt as if it took place in a dystopian near future. How much of that future has come to pass?

Mike Wood, Whose LeapFrog Toys Taught a Generation, Dies at 72

His LeapPad tablets, which helped children read, found their way into tens of millions of homes beginning in 1999.