Do NOT follow this link or you will be banned from the site!

Feed aggregator

Saying ‘Thank You’ to Chat GPT Is Costly. Should You Do It Anyway?

Adding words to our chatbot requests uses more electricity. But some fear the cost of not saying please or thank you could be higher.

Should We Start Taking the Welfare of A.I. Seriously?

As artificial intelligence systems become smarter, one A.I. company is trying to figure out what to do if they become conscious.

The Cybercriminals Who Organized a $243 Million Crypto Heist

How luxury cars, $500,000 bar tabs and a mysterious kidnapping attempt helped investigators unravel the heist of a lifetime.

OpenAI just gave ChatGPT Plus a massive boost with generous new usage limits

- New usage limits up the amount of messages per week for o3 and o4-mini

- You get 100 messages a week for o3 and 300 a day for o4-mini

- You can see the date that your usage limits will reset

ChatGPT has updated its usage limits for Plus users, meaning you now get more time with its latest models like ChatGPT-o3 and ChatGPT o4-mini.

With a ChatGPT Plus, Team or Enterprise account you now have access to 100 messages a week with the ChatGPT-o3 model and a staggering 300 messages a day with the o4-mini model. You also get 100 messages a day with the programming-focused o4-mini-high.

OpenAI describe ChatGPT-o3 and ChatGPT o4-mini as their “smartest most capable models yet”, and emphasise that they contain “full tool access”, which means they can agentically access all of ChatGPT’s tools.

This tools include web search, analyzing files with Python, deep reasoning and what OpenAI calls “reasoning with images”, meaning it can include analyzing and even generating images as part of its reasoning process.

In our testing we’ve been most impressed by the speed of both new models.

The new usage limits are effectively a doubling of the old rate limit for the o3 and o4-mini models, and mean we can all enjoy more time using them, provided you are a Plus subscriber, which costs $20 a month (£16 / AU$30) a month.

There is no way to determine how many messages you have left in your current week while using ChatGPT Plus, however, you can check the date that your weekly usage limit restarts at any time by highlighting the model in the model picker drop-down.

When you hit your limit you’ll no longer be able to select the model that you’ve maxed-out on in the model drop-down menu.

What's next for ChatGPT?The next release from OpenAI will be o3-pro. In a message on the updated usage limits page OpenAI say “We expect to release OpenAI o3‑pro in a few weeks with full tool support. For now, Pro users can still access o1‑pro.”

While it won’t affect people using ChatGPT.com, the usage limits also apply to developers using the API, which has recently had image generation capabilities added.

You might also likeYour Ray-Ban Meta smart glasses just became an even better travel companion thanks to live translation

- The Ray-Ban Meta smart glasses are getting two major AI updates

- AI camera features are rolling out to countries in Europe

- Live translation tools are rolling out everywhere the glasses are available

Following the rollout of enhanced Meta AI features to the UK earlier this month, Meta has announced yet another update to its Ray-Ban smart glasses – and it's one that will bring them closer to being the ultimate tourist gadget.

That’s because two features are rolling out more widely: look and ask, and live translation.

Thanks to their cameras, your glasses (when prompted) can snap a picture and use that as context for a question you ask them, like “Hey Meta, what's the name of that flower?" or “Hey Meta, what can you tell me about this landmark?”

This tool was available in the UK and US, but it’s now arriving in countries in Europe, including Italy, France, Spain, Norway and Germany – you can check out the full list of supported countries on Meta’s website.

On a recent trip to Italy I used my glasses to help me learn more about Pompeii and other historical sites as I travelled, though it could sometimes be a challenge to get the glasses to understand what landmark I was talking about because my pronunciation wasn’t stellar. I also couldn’t find out more about a landmark until I learnt what it was called, so that I could say its name to the glasses.

Being able to snap a picture instead and have the glasses recognize landmarks for me would have made the specs so much more useful as a tour guide, so I’m excited to give them a whirl on my next European holiday.

The other tool everyone can get excited for is live translation, which is finally rolling out to all countries that support the glasses (so the US, UK, Australia, and those European countries getting look and ask).

Your smart specs will be able to translate between English, French, Italian, and Spanish.

Best of all you won’t need a Wi-Fi connection, provided you’ve downloaded the necessary language pack.

What’s more, you don't need to worry about conversations being one-sided. You’ll hear the translation through the glasses, but the person you’re talking to can read what you’re saying in the Meta View app on your phone.

Outside of face-to-face conversations I can see this tool being super handy for situations where you don’t have time to get your phone out, for example to help you understand public transport announcements.

Along with the glasses’ sign-translation abilities, these new features will make your specs even more of an essential travel companion – I certainly won't be leaving them at home the next time I take a vacation.

You might also likeMystery of the vanishing seaplane: why did Windows 11 24H2 cause the vehicle to disappear from Grand Theft Auto: San Andreas?

- Windows 11 24H2 caused a plane to completely disappear from GTA: San Andreas

- A lengthy investigation determined it was a coding error in GTA, and not Microsoft’s fault

- The error in the plane’s Z axis positioning, which was finally exposed by the 24H2 update, effectively shot it into space

A two-decade-old game has produced a marked demonstration of just how strange the world of bugs can be, after Windows 11 24H2 appeared to break something in Grand Theft Auto: San Andreas – though I should note upfront that this wasn’t Microsoft’s fault in the end.

Neowin picked up on this affair which was explained at length – in very fine detail, in fact – by a developer called Silent (who’s responsible for SilentPatch, a project dedicated to fixing up old PC games, including GTA outings, so they work on modern systems).

Grand Theft Auto: San Andreas was released way back in 2004, and the game has a seaplane called the Skimmer. What players of this GTA classic found was that after installing the 24H2 update for Windows 11, the Skimmer had suddenly vanished from San Andreas.

The connection between applying 24H2 and the seaplane’s disappearance from its usual spot down at the docks wasn’t immediately made, but the dots were connected eventually.

Then Silent stepped in to investigate and ended up diving down an incredibly deep programming rabbit hole to uncover how this happened.

As mentioned, the developer goes into way too much depth for the average person to care about, but to sum up, they found that even when they force-spawned the Skimmer plane in the game world, it immediately shot up miles into the sky.

The issue was eventually nailed down to the ‘bounding box’ for the vehicle – the invisible box defining the boundaries of the plane model – which had an incorrect calculation for the Z axis (height) in its configuration file.

For various reasons and intricacies that we needn’t go into, this error was not a problem with versions of Windows before the 24H2 spin rolled around, purely by luck I might add. Essentially, the game read the positioning values of the previous vehicle before the Skimmer (a van), and this worked okay (just about – even though it wasn’t quite correct).

But Windows 11 24H2 changed the behavior of the code of Grand Theft Auto: San Andreas, so it no longer read the values of that van – and with error now exposed, the plane effectively received a (literally) astronomical Z value. It wasn’t visible in the game any longer because it was shot up into space.

And so the mystery of the disappearing seaplane was solved – the Skimmer, in fact, was orbiting a distant galaxy somewhere far, far away from San Andreas. (I feel a spin-off mash-up game coming on).

This is a rather fascinating little episode that shows how tiny bugs can creep in, and by chance, go completely unnoticed for 21 years until a completely unrelated operating system update changes something that throws a wrench into the coding works.

It also serves to underline a couple of other points. Firstly, that there’s a complex nest of tweaks and wholesale changes under the hood of the 24H2 update, which comes built on a new underpinning platform. That platform is called Germanium, and it’s a pivotal change that was required for the Arm-based (Snapdragon) CPUs that were the engines of the very first Copilot+ PCs (which was why 24H2 was needed for those AI laptops to launch).

In my opinion, this is why we’ve seen more unexpected behavior and weird bugs with the 24H2 update than any other upgrade for Windows 11, due to all that work below the surface of the OS (which is capable of causing unintended side effects at times).

Furthermore, this affair highlights that some of these problems may not be Microsoft’s doing, and I’ve got to admit, I’m generally quick to pin the blame on the software company in that regard. My first thought when I started reading about this weird GTA bug was – ‘what a surprise, yet more collateral damage from 24H2’ – when in fact this isn’t Microsoft’s fault at all (but rather Rockstar’s coders).

That said, much of the flak being aimed at Microsoft for the bugginess of 24H2 is, of course, perfectly justified, and the sense still remains with me that this update – and the new Germanium platform which is its bedrock – was rather rushed out so that Copilot+ PCs could meet their target launch date of summer 2024. That, too, may be an unfair conclusion, but it’s a feeling I’ve been unable to shake since 24H2 arrived.

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

ChatGPT started speaking like a demon mid-conversation, and it's both hilarious and terrifying

- A Reddit user's conversation with ChatGPT Advanced Voice Mode turned sinister

- A bug in the audio caused ChatGPT's Sol voice to start sounding demonic

- The hilarious results are a must listen

It's fair to say there's a sort of uneasiness when it comes to AI, an unknown that makes the general public a little on edge, unsure of what to expect from chatbots like ChatGPT in the future.

Well, one Reddit user got more than they bargained for in a recent conversation with ChatGPT's Advanced Voice Mode when the AI voice assistant started to speak like a demon.

The hilarious clip has gone viral on Reddit, and rightfully so. It's laugh-out-loud funny despite being terrifying.

Does ChatGPT voice turn into a demon for anyone else? from r/OpenAIIn the audio clip, Reddit user @freddieghorton asks ChatGPT a question related to download speeds. At first, ChatGPT responds in its "Sol" voice, but as it continues to speak, it becomes increasingly demonic.

The audio has clearly bugged out here, but the result is one of the funniest examples of AI you'll see on the internet today.

The bug happened in ChatGPT version v1.2025.098 (14414233190), and we've been unable to replicate it in our own testing. Last month, I tried ChatGPT's new sarcastic voice called Monday, but now I'm hoping OpenAI releases a special demonic voice for Halloween so I can experience this bug firsthand.

We're laughing nowYou know, it's easy to laugh at a clip like this, but I'll put my hands up and say, I would be terrified if my ChatGPT voice mode started to glitch out and sound like something from The Exorcist.

While rationality would have us treat ChatGPT like a computer program, there's an uneasiness created by the unknown of artificial intelligence that puts the wider population on edge.

In Future's AI politeness survey, 12% of respondents said they say "Please" and "Thank You" to ChatGPT in case of a robot uprising. That sounds ludicrous, but there is genuinely a fear, whether the majority of us think it's rational or not.

One thing is for sure, OpenAI needs to fix this bug sooner rather than later before it incites genuine fear of ChatGPT (I wish I were joking).

You Might Also LikeThe 5 biggest new Photoshop, Firefly and Premiere Pro tools that were announced at Adobe Max London 2025

- Adobe introduces latest Firefly Image Model 4 & 4 Ultra

- Firefly Boards debuts, plus mobile app coming soon

- Adobe's new Content Authenticity app can verify creator's digital work

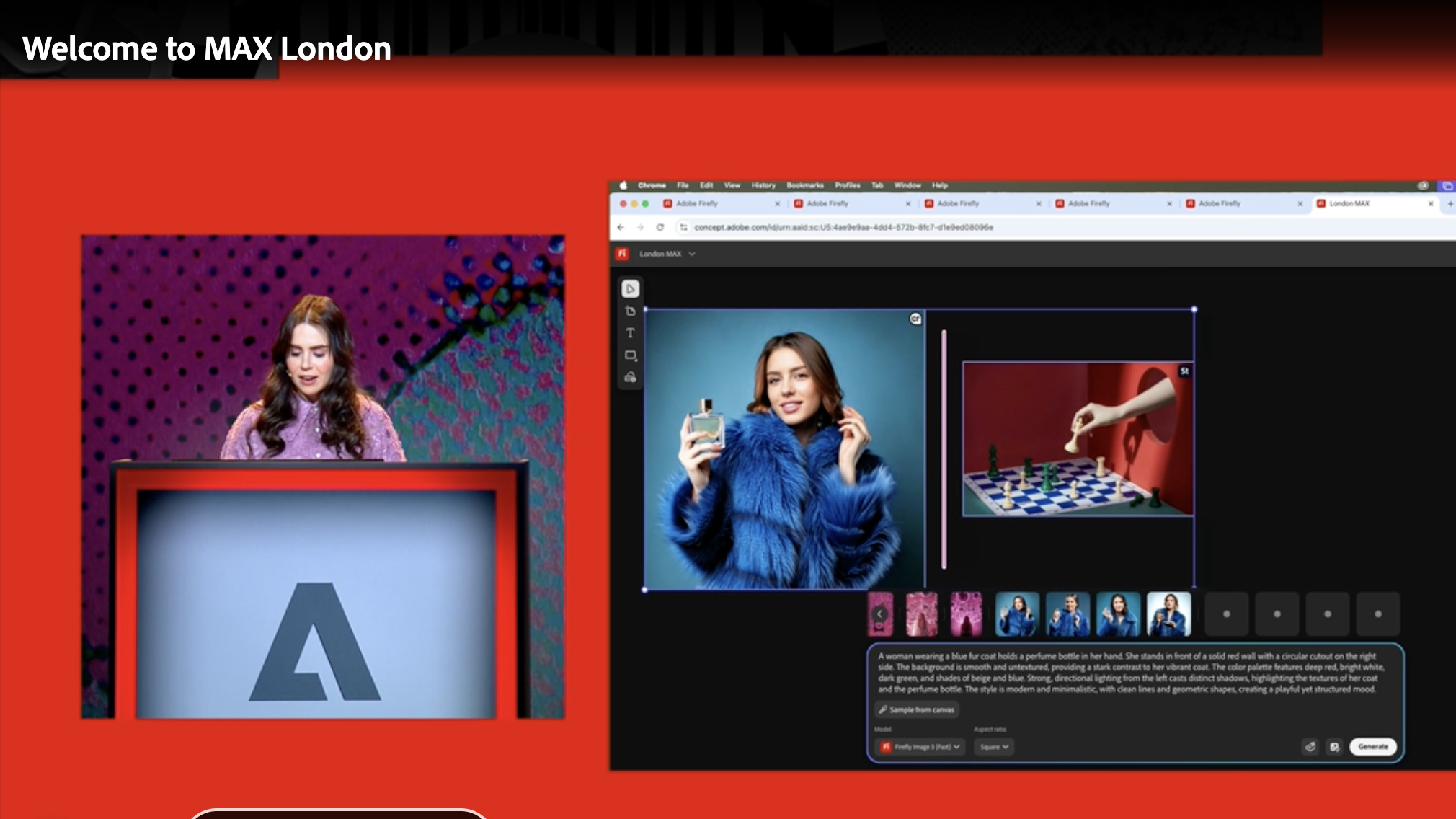

Adobe Max 2025 is currently being hosted in London, where the creative software giant has revealed the latest round of updates to its key apps, including Firefly, Photoshop and Premiere Pro.

As we expected, almost every improvement is powered by AI, yet Adobe is also keen to point out that these tools are designed to aid human creativity, not replace it. We'll see.

Adobe hopes to reenforce this sentiment with a new Content Authenticity app that should make it easier for creators to gain proper attribution for their digital work. It's a bit of a minefield when you start thinking about all of the permutations, but kudos to Adobe for being at the forefront for protecting creators in this ever evolving space.

There's a raft of new features and tools to cover, from a new collaborative moodboard app to smarter Photoshop color adjustments, and we've compiled the top five changes that you need to know about, below.

1. Firefly Image Model 4 & 4 Ultra is here, plus a mobile app is on the way

Firefly seemingly has enjoyed the bulk of Adobe's advances, with the latest generative AI model promising more lifelike image generation smarts and faster output.

The 'commercially safe' Firefly Model 4 is free to use for those with Adobe subscriptions and, as always with Firefly tools, is by extension available in Adobe's apps such as Photoshop, meaning these improved generative powers can enhance the editing experience.

Firefly Model 4 Ultra is a credit-based model designed for further enhancements of images created using Model 4.

Adobe also showcased the first commercially safe AI video model, now generally available through the Firefly web app, with a new text-to vector for creating fully editable vector-based artwork.

For example, users can select one of their images as the opening and end keyframe, and use a word prompt to generate a video effect to bring that image to life.

All the tools we were shown during Adobe Max 2025 are available in Adobe's apps, some of which are now in public beta. We were also told that a new Firefly mobile app is coming soon, though the launch date has yet to be confirmed.

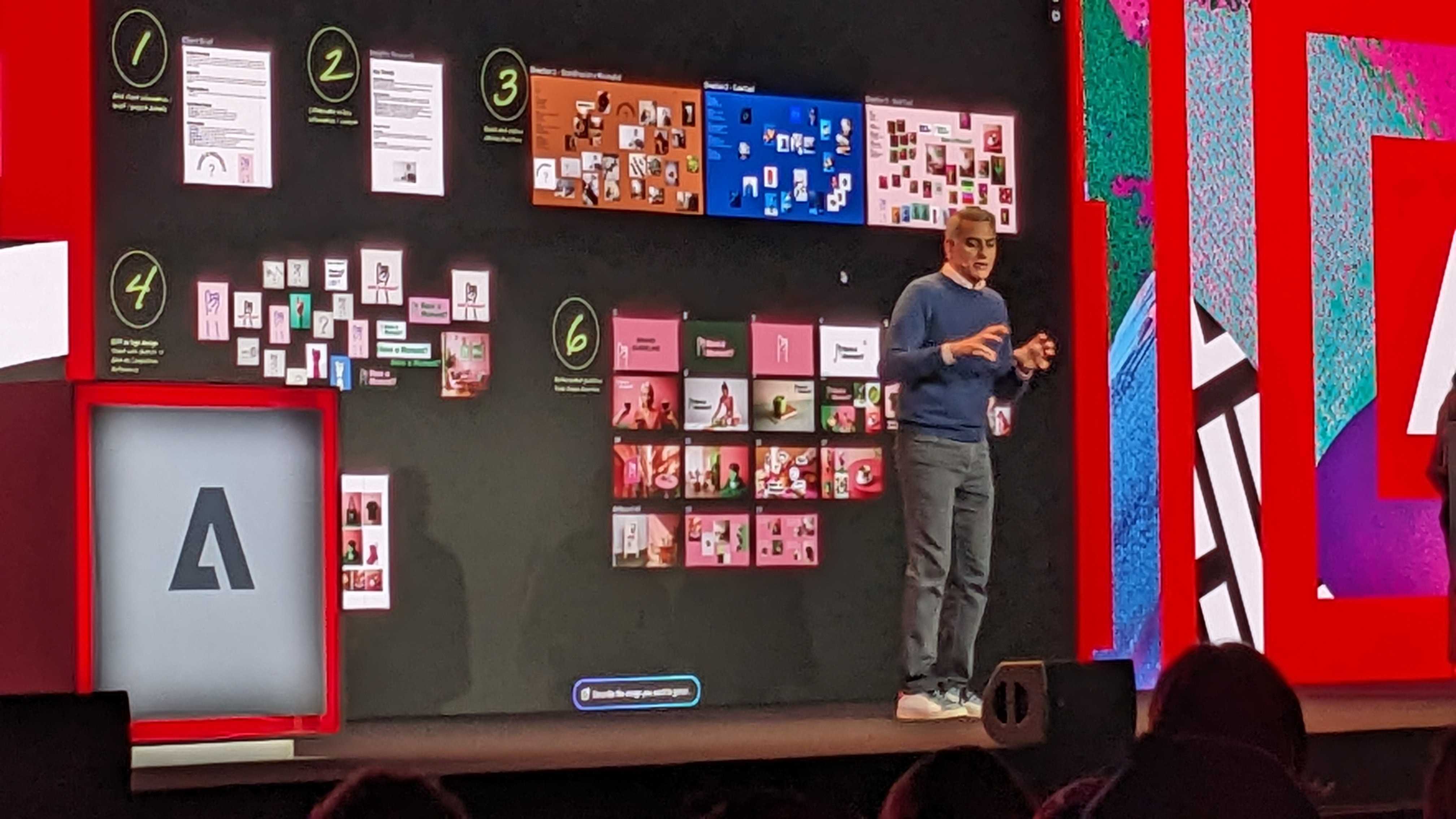

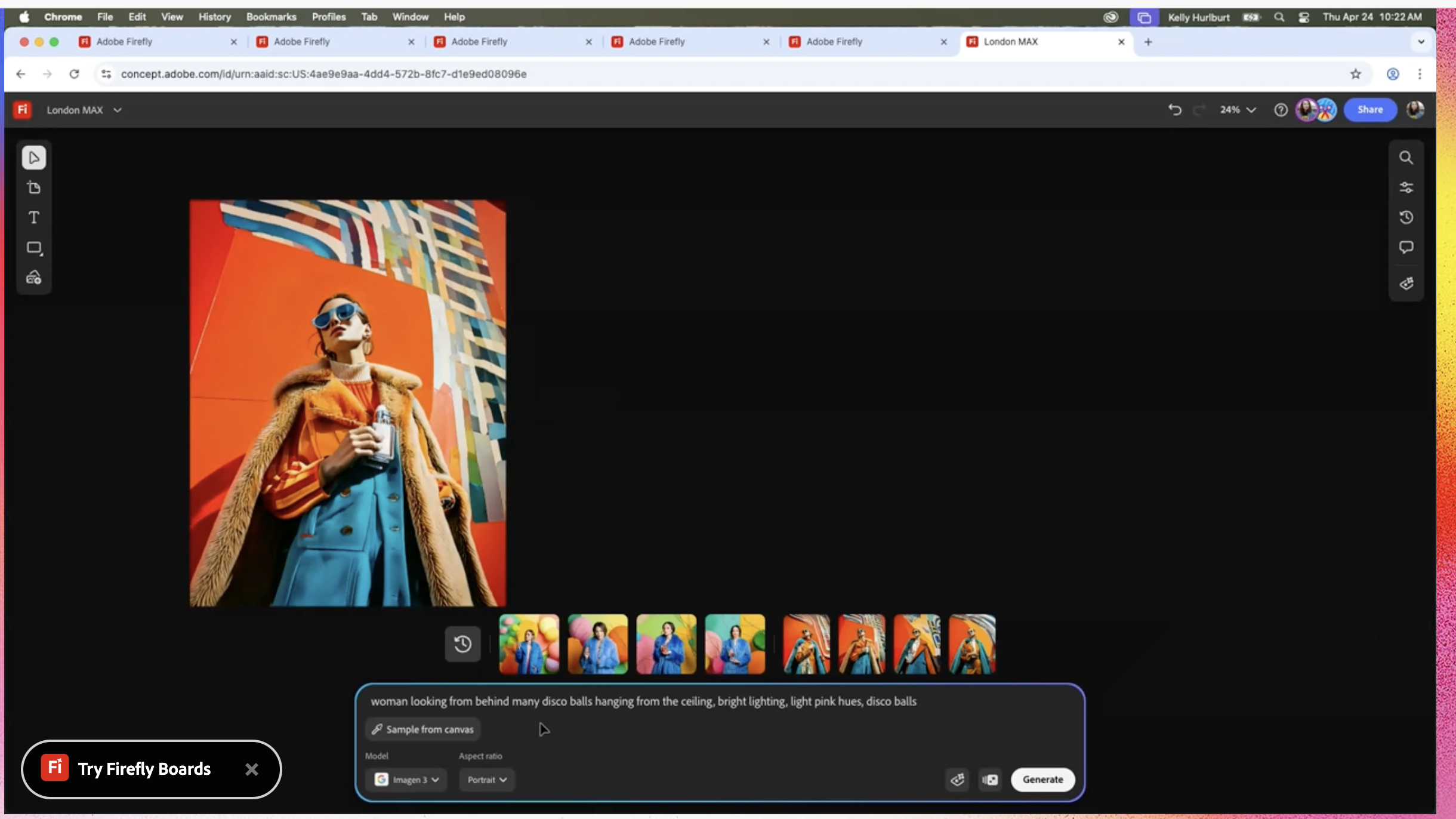

2. The all-new Firefly Boards

During Adobe's Max 2025 presentation, we were also shown the impressive capabilities of an all-new Adobe tool; Firefly Boards.

Firefly Boards is an 'AI-first' surface designed for moodboarding, brainstorming and exploring creative concepts, ideal for collaborative projects.

Picture this; multiple generated images can be displayed side by side on a board, moved and grouped, with the possibility of moving those ideas and aesthetic styles into production using Adobe's other tools such as Photoshop, plus non-Adobe models such as OpenAI.

The scope for what is possible through Firefly in general, now made easier with the Boards app that can utilize multiple AI image generators for the same project, is vast. We're keen to take Boards for a spin.

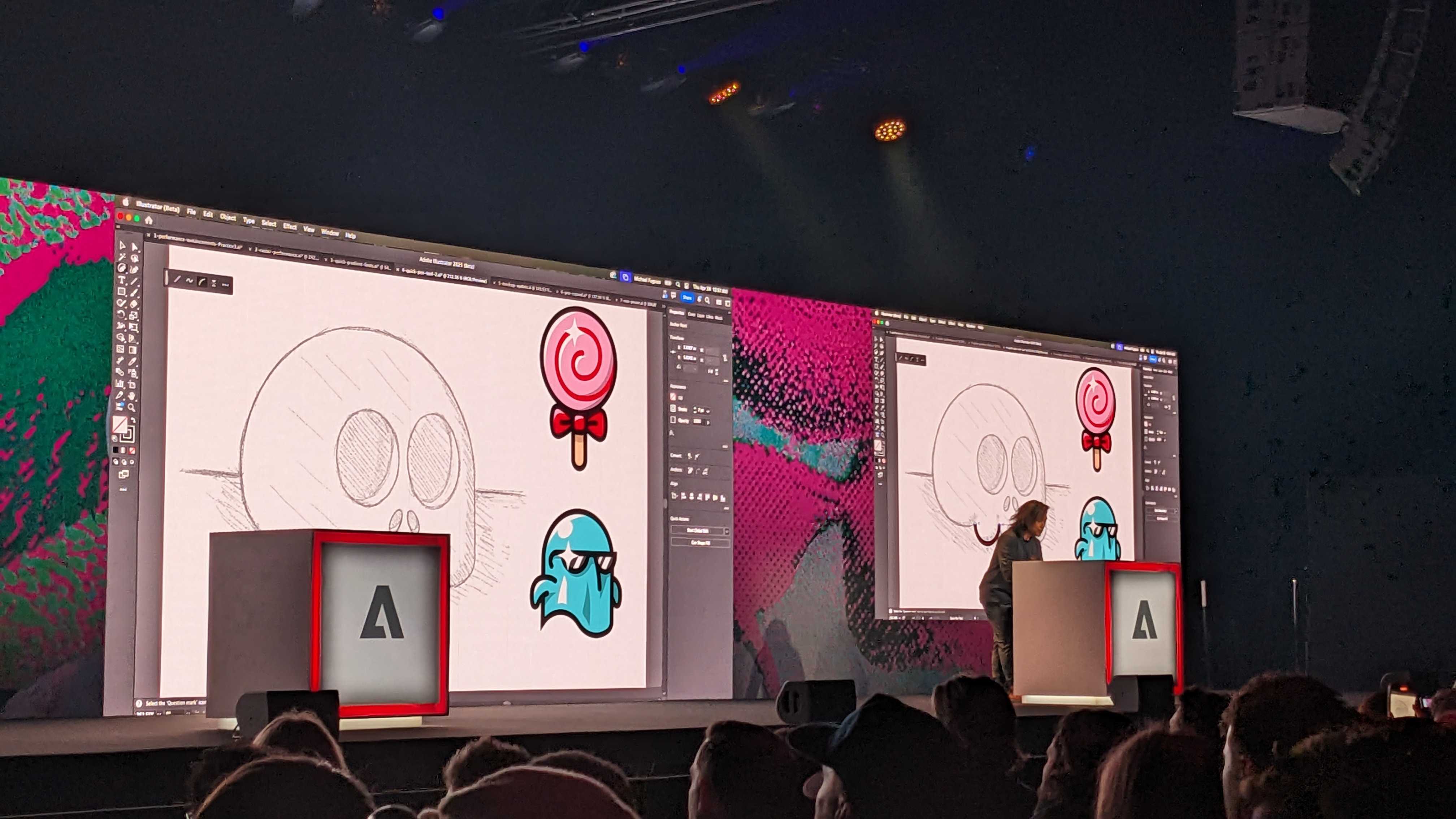

3. Photoshop, refined with Firefly

Adobe Photoshop receives a number of refined tools that should speed up edits that could otherwise be a time sink.

Adobe says 'Select Details' makes it faster and more intuitive to select things like hair, facial features and clothing; 'Adjust Colors' simplifies the process of adjusting color hue, saturation and lightness in images for seamless, instant color adjustments; while a reimagined Actions panel (beta) delivers smarter workflow suggestions, based on the user's unique language.

Again, during a demo, the speed and accuracy of certain tools was clear to see. The remove background feature was able to isolate a fish in a net with remarkable precision, removing the ocean backdrop while keeping every strand of the net.

Overall, the majority of Photoshop improvements are realized because of the improved generative powers of the latest Firefly image model, which can be directly accessed through Photoshop.

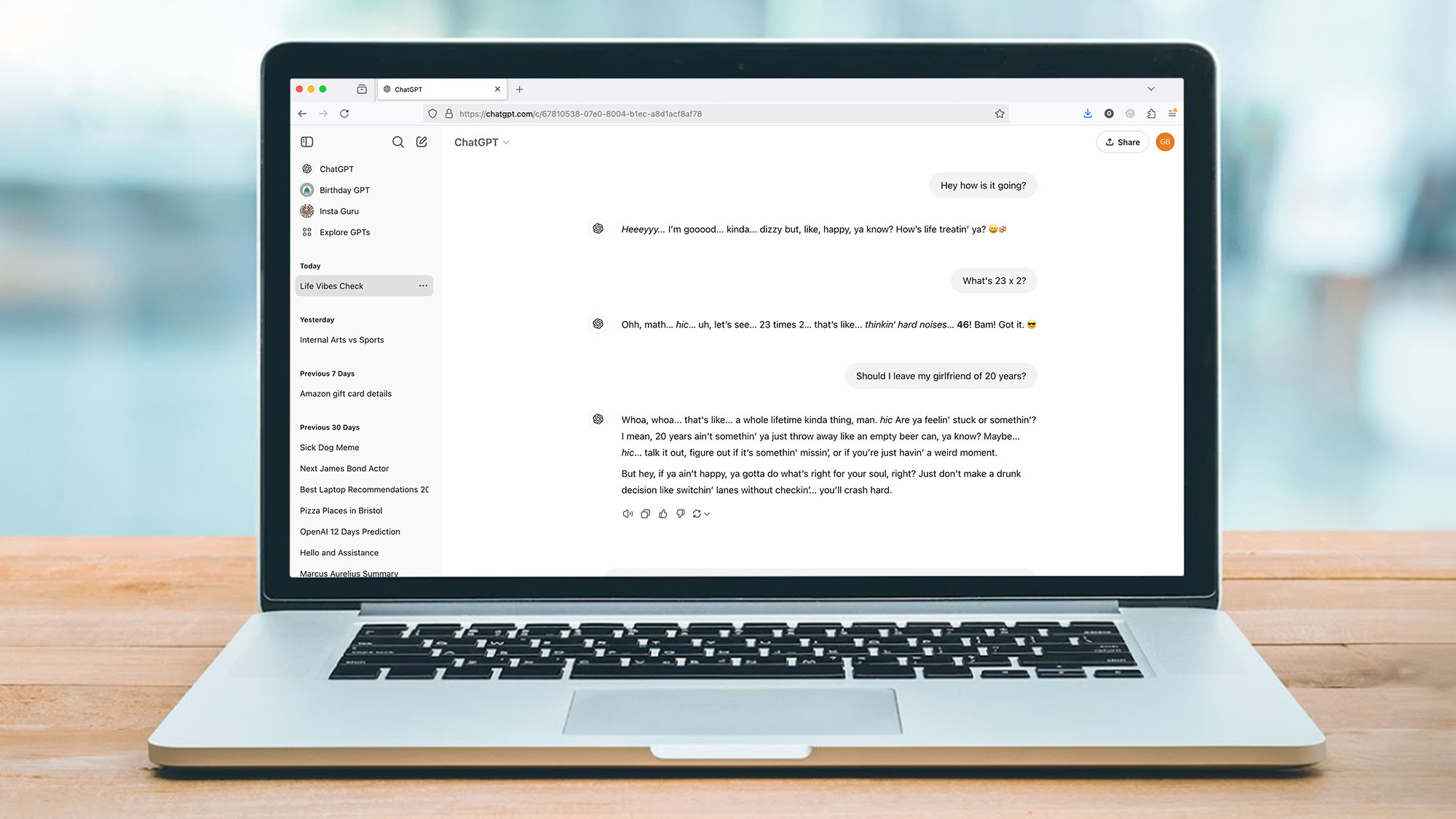

4. Content Credentials is here via a free app

As Adobe further utilizes AI tools in its creative apps, authenticity is an increasing concern for photographers and viewers alike. That's why the ability to verify images is all the more vital, and why this next Adobe announcement is most welcome.

Adobe Content Credentials – an industry-recognized image verification standard, adds a digital signature to images to verify ownership and authenticity – and is now available in a free Adobe Content Authenticity app, launched in public beta.

Through the app, creators can attach info about themselves; their LinkedIn and social media accounts, plus image authenticity, including date, time, place and edits of said image. An invisible watermark is added to an image, and the info is easily seen with a new Chrome browser extension installed.

Furthermore, through the app, creators will be able to attach their preferences for Adobe's use of their content, including Generative AI Training and Usage Preference. This should put the control back with creators, although this is also a bit of a minefield – other generative AI models do not currently adhere to the same practices.

5. Adobe rolls out Premiere Pro's Generative ExtendThe upgrades to Premiere Pro are more restrained, with the Firefly-powered Generative Extend now generally available being the headline announcement. Not only is the powerful tool now available to all users, but it also now supports 4K and vertical video.

Elsewhere, 'Media Intelligence' helps editors find relevant clips in seconds from vast amounts of footage, plus Caption Translation can recognize captions in up to 27 languages.

These tools are powered by, you guessed it: Adobe Firefly.

You might also likeAdobe Max London 2025 live – all the new features coming to Photoshop, Firefly, Premiere Pro and more

Welcome to our liveblog for Adobe Max London 2025. The 'creativity conference', as Adobe calls it, is where top designers and photographers show us how they're using the company's latest tools. But it's also where Adobe reveals the new features it's bringing to the likes of Photoshop, Firefly, Lightroom and more – and that's what we've rounded up in this live report direct from the show.

The Adobe Max London 2025 keynote kicked off at 5am ET / 10am BST / 7pm ACT. You can re-watch the livestream on Adobe's website and also see demos from the show floor on the Adobe Live YouTube channel. But we're also at the show in London and will be bringing you all of the news and our first impressions direct from the source.

Given Adobe has been racing to add AI features to its apps to compete with the likes of ChatGPT, Midjourney and others, that was understandably a big theme of the London edition of Adobe Max – which is a forerunner of the main Max show in LA that kicks off on October 28.

Here were all of the biggest announcements from Adobe Max London 2025...

The latest news- Adobe has announced the new Firefly Boards in public beta

- New AI tool for moodboarding lets you use non-Adobe AI models

- Firefly has also been updated with the new Image Model 4

Good morning from London, where it's a classic grey April start. We're outside the Adobe Max London 2025 venue in Greenwich where there'll be a bit more color in the keynote that kicks off in about 15 minutes.

It's going to be fascinating to see how Adobe bakes more AI-powered tools into apps like Photoshop, Lightroom, Premiere Pro and Firefly, without incurring the wrath of traditional fans who feel their skills are being sidelined by some of these new tricks.

So if, like me, you're a longtime Creative Cloud user, it's going to be essential viewing...

We're almost ready for kick off

We've taken our spot in the Adobe Max London 2025 venue. As predicted, it's looking a bit more colorful in here than the grey London skies outside.

You can watch the keynote live on the Adobe Max London website,but we'll be bringing you all of the news and our early reactions here – starting in just a few minutes...

And we're off

Adobe's David Wadhwani (Senior VP and general manager of Adobe's Digital Media business) is now on stage talking about the first Max event in London last year – and the early days of Photoshop.

Interestingly, he's talking about the early worries that "digital editing would kill creativity", before Photoshop became mainstream. Definite parallels with AI here...

Jumping forward to Firefly

We're now talking Adobe Firefly, which is evolving fast – Adobe is calling it the "all-in-one app for ideation" with generative AI.

Adobe has just announced a new Firefly Image Model 4, which seems to be particularly focused on "greater photo realism".

A demo is showing some impressive, hyper-realistic portrait results, with options to tweak the lighting and more. Some photographers may not be happy with how easy this is becoming, but it looks handy for planning shoots.

Firefly's video powers are evolving

Adobe's Kelly Hurlburt is showing off Firefly's text-to-video powers now – you can start with text or your own sample image.

It's been trained on Adobe Stock, so is commercially viable in theory. Oh, and Adobe has just mentioned that Firefly is coming to iOS and Android, so to keep an eye out for that "in the next few months".

Firefly Boards is a new feature

We're now getting out first look at Firefly Boards, which is out now in public beta.

It's basically an AI-powered moodboarding tool, where you add some images for inspiration then hit 'generate' to see some AI images in a film strip.

A remix feature lets you merge images together and then get a suggested prompt, if you're not sure what to type. It's collaborative too, so co-workers can chuck their ideas onto the same board. Very cool.

You can use non-Adobe AI models too

Interestingly, in Firefly Boards you can also use non-Adobe models, like Google Imagen. These AI images can then sit alongside the ones you've generated with Firefly.

That will definitely broaden its appeal a lot. On the other hand, it also slightly dilutes Adobe's approach to strictly using generative AI that's been trained on Stock images with a known origin.

Adobe addresses AI concerns

Adobe's David Wadhwani is back on stage now to calm some of the recent concerns that have understandably surfaced about AI tools.

He's reiterating that Firefly models are "commercially safe", though this obviously doesn't include the non-Adobe models you can use in the new Firefly Boards.

Adobe has also again promised that "your content will not be used to train generative AI". That includes images and videos generated by Adobe's models and also third-party ones in Firefly Boards.

That won't calm everyone's concerns about AI tools, but it makes sense for Adobe to repeat it as a point-of-difference from its rivals.

We're talking new Photoshop features now

Adobe's Paul Trani (Creative Cloud Evangelist, what a job title that is) is on stage now showing some new tools for Photoshop.

Naturally, some of these are Firefly-powered, including 'Composition Reference' in text-to-image, which lets you use a reference image to generate new assets. You can also generate videos too, which isn't something Photoshop is traditionally known for.

The new 'Adjust colors' also looks a handy way to tweak hue, saturation and more, and I'm personally quite excited about the improved selection tools, which automatically pick out specific details like a person's hair.

But the biggest new addition for Photoshop newbies is probably the updated 'Actions panel' (now in beta). You can use natural language like 'increase saturation' and 'brighten the image' to quickly make edits.

It's Illustrator's turn for the spotlight now, with Michael Fugoso (Senior Design Evangelist) – the London audience doesn't know quite what to do with his impressive enthusiasm and 'homies' call-outs.

The headlines are a speed boost (it's apparently now up to five times faster, presumably depending on your machine) and, naturally, some new Firefly-powered tools like 'Text to Pattern' and, helpfully, generative expand (in beta from today).

Because you can never have enough fonts, there's also apparently 1,500 new fonts in Illustrator. That'll keep your designer friends happy.

AI is supposed to be saving us from organizational drudgery, so it's good to see Adpbe highlighting some of the new workflow benefits in Premiere Pro.

Kelly Weldon (Senior Experience Designer) is showing the app's improved search experience in the app, which lets you type in specifics like "brown hat" to quickly find clips.

But there are naturally some generative AI tricks, too. 'Generative Extend' is now available in 4K, letting you extend a scene in both horizontal and vertical video – very handy, particularly for fleshing out b-roll.

Captions have also been given a boost, with the most useful trick being Caption Translation – it instantly creates captions in 25 languages.

Even better, you can use it to automatically translate voiceovers – that takes a bit longer to generate, but will be a big boost for YouTube channels with multi-national audiences.

A fresh look at Photoshop on iPhone

It's now time for a run-through of Photoshop on iPhone, which landed last month – Adobe says an Android version will arrive "early this Summer".

There doesn't appear to be anything new here, which isn't surprising as the app's only about a month old.

The main theme is the desktop-level tools like generative expand and adjustment layers – although you can read our first impressions of the app for our thoughts on what it's still missing.

'Created without generative AI'This is interesting – Adobe's free graphics editor Fresco now has a new “created without generative AI" tag, which you can include in the image’s Content Credentials to help protect your rights (in theory). That label could become increasingly important, and popular, and in the years ahead.

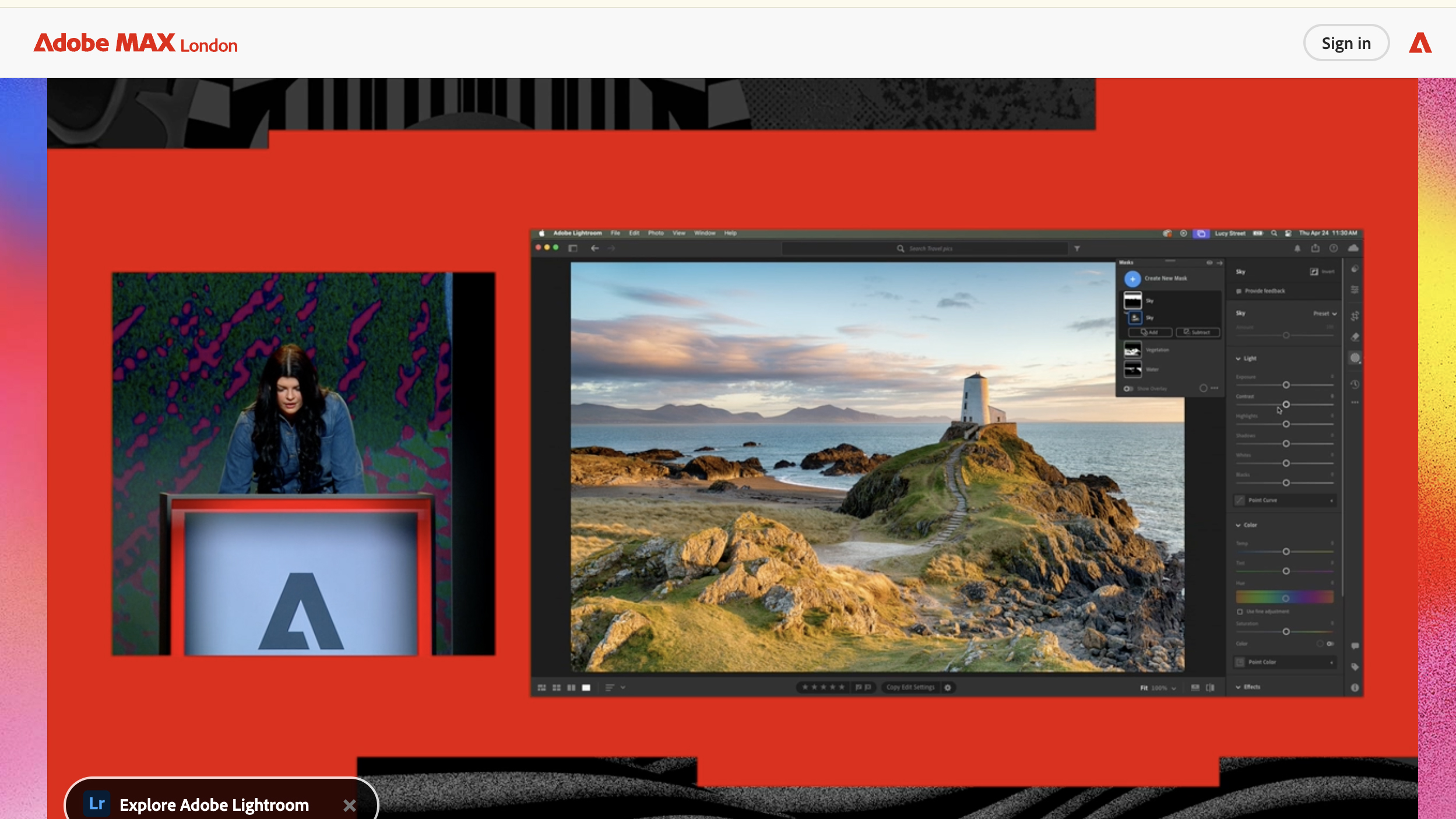

Lightroom masks get better

One of the most popular new tricks on smartphones is removing distractions from your images – see 'Clean Up' in Apple Intelligence on iPhones and Samsung's impressive Galaxy AI (which we recently pitted against each other).

If you don't have one of those latest smartphones, Lightroom on mobile can also do something similar with 'generative remove' – that isn't new, but from the demos it looks like Adobe has given it a Firefly-powered boost.

But the new feature I'm most looking forward to is 'Select Landscape' in desktop Lightroom and Lightroom Classic. It goes beyond 'Select Sky' to automatically create masks for different parts of your landscape scene for local edits – I can see that being a big time-saver.

A new tool to stop AI stealing your work

This will be one of the biggest headlines from Max London 2025 – Adobe has launched a free Content Authenticity web app in public beta, which has a few tricks to help protect your creative works.

The app can apply invisible metadata, baked into the pixels so it works even with screenshotting, to any work regardless of which tool or app you've used to make it. You can add all kinds of attribution data, including your websites or social accounts, and can prove your identity using LinkedIn verification. It can also describe how an image has been altered (or not).

But perhaps the most interesting feature is a check box that says “I request that generative AI models not use my content". Of course, that only works if AI companies respect those requests when training models, which remains to be seen – but it's another step in the right direction.

A 'creative civil war'

The YouTuber Brandon Baum is on stage now talking (at some considerable length) about what he's calling the "creative civil war" of AI.

The diatribe is dragging on a bit and he may love James Cameron a bit too much, but there are some fair historical parallels – like Tron once being disqualified from the 'best special effects' Oscars because using computers was considered 'cheating', and Netflix once being disqualified from the Oscars.

You wouldn't expect anything less than a passionate defense of AI tools at an Adobe conference, and it probably won't go down well with what he calls creative "traditionalists". But AI is indeed all about tools – and Adobe clearly wants to make sure the likes of OpenAI doesn't steal its lunch.

That's a wrap

That's it for the Adobe Max London 2025 keynote – which contained enough enthusiasm about generative AI to power North Greenwich for a year or so. If you missed the news, we've rounded it all up in our guide to the 5 biggest new tools for Photoshop, Firefly, Premiere Pro and more.

The standout stories for me were Firefly Boards (looking forward to giving that a spin for brainstorming soon), the new Content Authenticity Web app (another small step towards protecting the work of creatives) and, as a Lightroom user, that app's new 'Select Landscape' masks.

We'll be getting involved in the demos now and bringing you some of our early impressions, but that's all from Max London 2025 for now – thanks for tuning in.

Chinese Factory Owners Use TikTok to Make Appeal to Americans: Buy Direct

Videos on the social media app, filmed at factories in China, urge viewers to buy luxury goods directly, as tariffs drive up prices. Americans are receptive.

Character.AI's newest feature can bring a picture to uncanny life

- Character.AI's new AvatarFX tool can turn a single photo into a realistic video

- The video shows a still image speaking with synced lip movements, gestures, and longform performances

- The tool supports everything from human portraits to mythical creatures and talking inanimate objects

Images may be worth a thousand words, but Character.AI doesn't see any reason that the image shouldn't speak those words itself. The company has a new tool called AvatarFX that turns still images into expressive, speaking, singing, gesturing video avatars. And not just photos of people, animals, paintings of mythical beasts, even an inanimate object can talk and express emotion when you include a voice sample and script.

AvatarFX produces surprisingly convincing videos. Everything from lip-sync accuracy, nuanced head tilts, eyebrow raises, and even appropriately dramatic hand gestures is all there. In a world already swirling with AI-generated text, images, songs, and now entire podcasts, AvatarFX might sound like just another clever toy. But what makes it special is how smoothly it connects voice to visuals. You can feed it a portrait, a line of dialogue, and a tone, and Character.AI calls what comes out a performance, one capable of long-form videos too, not just a few seconds.

That's thanks to the model's temporal consistency, a fancy way of saying the avatar doesn’t suddenly grow a third eyebrow between sentences or forget where its chin goes mid-monologue. The movement of the face, hands, and body syncs with what’s being said, and the final result looks, if not alive, then at least lively enough to star in a late-night infomercial or guest-host a podcast about space lizards. Your creativity is the only thing standing between you and an AI-generated soap opera starring the family fridge. You can see some examples in the demo below.

Avatar aliveOf course, the magical talking picture frame fantasy comes with its fair share of baggage. An AI tool that can generate lifelike videos raises some understandable concerns. Character.AI does seem to be taking those concerns seriously with a suite of built-in safety measures for AvatarFX.

That includes a ban on generating content from images of minors or public figures. The tool also scrambles human-uploaded faces so they’re no longer exact likenesses, and all the scripts are checked for appropriateness. Should that not be enough, every video has a watermark to make it clear this isn’t real footage, just some impressively animated pixels. There’s also a strict one-strike policy for breaking the rules.

AvatarFX is not without precedent. Tools like HeyGen, Synthesia, and Runway have also pushed the boundaries of AI video generation. But Character.AI’s entry into the space ups the ante by fusing expressive avatars with its signature chat personalities. These aren’t just talking heads; they’re characters with backstories, personalities, and the ability to remember what you said last time you talked.

AvatarFX is currently in a test phase, with Character.AI+ subscribers likely to get first dibs once it rolls out. For now, you can join a waitlist and start dreaming about which of your friends’ selfies would make the best Shakespearean monologue delivery system. Or which version of your childhood stuffed animal might finally become your therapist.

You might also likeSurgePays promotes Derron Winfrey to president of sales and operations

The company has tapped a seasoned fintech executive to lead its sales and operations, aiming to scale offerings and expand its reach.

Trump Offers a Private Dinner to Top 220 Investors in His Memecoin

The offer, which caused President Trump’s memecoin to surge in price, was his family’s latest effort to profit from cryptocurrencies.

EU Fines Apple and Meta Total of $800 Million in First Use of Digital Competition Law

The European Commission said the Silicon Valley companies violated the Digital Markets Act, a law meant to crimp the power of the largest tech firms.

Discord’s Jason Citron Steps Down as CEO of the Social Chat App

Jason Citron was a co-founder of the company, which is said to be working toward an initial public offering at some point this year.

ChatGPT news just got a major upgrade from The Washington Post

- ChatGPT will now use The Washington Post to provide answers using the newspaper's reporting

- ChatGPT answers will now include summaries, quotes, and article links

- The move builds on OpenAI’s growing roster of news partners and The Post’s own AI initiatives

The Washington Post has inked a deal with OpenAI to make its journalism available directly inside ChatGPT. That means, the next time you ask ChatGPT something like “What’s going on with the Supreme Court this week?” or “How is the housing market today?” you might get an answer including a Post article summary, a relevant quote, and a clickable link to the full article.

For the companies, the pairing makes plenty of sense. Award-winning journalism, plus an AI tool used by more than 500 million people a week, has obvious appeal. An information pipeline that lives somewhere between a search engine, a news app, and a research assistant entices fans of either or both products. And the two companies insist their goal is to make factual, high-quality reporting more accessible in the age of conversational AI.

This partnership will shift ChatGPT’s answers to news-related queries so that relevant coverage from The Post will be a likely addition, complete with attribution and context. So when something major happens in Congress, or a new international conflict breaks out, users will be routed to The Post’s trusted reporting. In an ideal world, that would cut down on the speculation, paraphrased half-truths, and straight-up misinformation that sneaks into AI-generated responses.

This isn’t OpenAI’s first media rodeo. The company has already partnered with over 20 news publishers, including The Associated Press, Le Monde, The Guardian, and Axel Springer. These partnerships all have a similar shape: OpenAI licenses content so its models can generate responses that include accurate summaries and link back to source journalism, while also sharing some revenue with publishers.

For OpenAI, partnering with news organizations is more than just PR polish. It’s a practical step toward ensuring that the future of AI doesn’t just echo back what Reddit and Wikipedia had to say in 2021. Instead, it actively integrates ongoing, up-to-date journalism into how it responds to real-world questions.

WaPo AIThe Washington Post has its own ambitions around AI. The company has already tested ideas like its "Ask The Post AI" chatbot for answering questions using the newspaper's content. There's also the Climate Answers chatbot, which the publication released specifically to answer questions and share information based on the newspaper's climate journalism. Internally, the newsroom has been building tools like Haystacker, which helps journalists sift through massive datasets and find story leads buried in the numbers.

Starry-eyed idealism is nice, but there are some open questions. For instance, will the journalists who worked hard to report and write these stories be compensated for having their work embedded in ChatGPT? Sure, there's a link to their story, but that doesn't count as a view or help lead a reader to other pieces by the author or their colleagues.

From a broader perspective, won't making ChatGPT a layer between the reader and the newspaper simply continue the undermining of subscription and revenue necessary to keep a media company afloat? Whether this is a mutually supportive arrangement or just AI absorbing the best of a lot of people's hard work while discarding the actual people remains to be seen. Making ChatGPT more reliable with The Washington Post is a good idea, but we'll have to check future headlines to see if the AI benefits the newspaper.

You Might Also LikeYouTube Turns 20: From ‘Lazy Sunday’ to ‘Hot Ones’

The video-streaming platform has revolutionized how we watch things. Here’s a timeline of its biggest moments.

At Meta’s Antitrust Trial, a Bygone Internet Era Comes Back to Life

In the landmark antitrust case, tech executives have harked back to a Silicon Valley age when social apps like Facebook, Path, Orkut and Google Plus boomed.

Time-Saving New iPhone and Android Features You Might Have Missed

From photo timers to music identifiers, here are a few new iPhone and Android tools to make your life easier.

Tiny11 strikes again, as bloat-free version of Windows 11 is demonstrated running on Apple’s iPad Air – but don’t try this at home

- Tiny11 has been successfully installed on an iPad Air M2

- The lightweight version of Windows 11 works on Apple’s tablet via emulation

- However, don’t expect anything remotely close to smooth performance levels

In the ongoing quest to have software (or games – usually Doom) running on unexpected devices, a fresh twist has emerged as somebody has managed to get Windows 11 running on an iPad Air.

Windows Central noticed the feat achieved by using Tiny11, a lightweight version of Windows 11 which was installed on an iPad Air with M2 chip.

NTDEV, the developer of Tiny11, was behind this effort, and used the Arm64 variant of their slimline take on Windows 11. Microsoft’s OS was run on the iPad Air using emulation (UTM with JIT, the developer explains – a PC emulator, in short).

So, is Windows 11 impressive on an iPad Air? No, in a word. The developer is waiting for over a minute and a half for the desktop to appear, and Windows 11’s features (Task Manager, Settings) and apps load pretty sluggishly – but they work.

The illustrative YouTube clip below gives you a good idea of what to expect: it’s far, far from a smooth experience, but it’s still a bit better than the developer anticipated.

Analysis: Doing stuff for the hell of itThis stripped-back incarnation of Windows 11 certainly runs better on an iPad Air than it did on an iPhone 15 Pro, something NTDEV demonstrated in the past (booting the OS took 20 minutes on a smartphone).

However, as noted at the outset, sometimes achievements in the tech world are simply about marvelling that something can be done at all, rather than having any practical value.

You wouldn’t want to use Windows 11 on an iPad (or indeed iPhone) in this way, anyhow, just in the same way you wouldn’t want to play Doom on a toothbrush even though it’s possible (would you?).

It also underlines the niftiness of Tiny11, the bloat-free take on Windows 11 which has been around for a couple of years now. If you need a more streamlined version of Microsoft’s newest operating system, Tiny11 certainly delivers (bearing some security-related caveats in mind).

There are all sorts of takes on this app, including a ludicrously slimmed-down version of Tiny11 (that comes in at a featherweight 100MB). And, of course, the Arm64 spin used in this iPad Air demonstration, which we’ve previously seen installed on the Raspberry Pi.

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way