Do NOT follow this link or you will be banned from the site!

Feed aggregator

Opera Mini stuffs a whole AI assistant into a tiny Android browser

- Opera has added its AI assistant Aria to the Opera Mini browser for Android

- Users can access real-time information, summarize text, and generate images in the lightweight Android browser

- The Opera Mini version of Aria is optimized for low data usage and older devices

Opera is giving a major AI upgrade to its Opera Mini mobile web browser. The company is embedding its Aria AI assistant into the Android version of Opera Mini, whose low-cost, data-saving approach to browsing is used by more than a hundred million people globally.

Aria will aid Opera Mini users by answering questions, generating text or software code, creating images, summarizing webpages, and pulling real-time info from the internet. Aria relies on Composer, Opera’s own AI engine, which stitches together tools and models from both OpenAI and Google, including making images with Google’s Imagen 3 model.

"AI is rapidly becoming an integral part of the daily internet experience – bringing Aria to Opera Mini is a natural addition to our most-downloaded browser," Opera executive vice president Jørgen Arnesen explained in a statement. "With the addition of our built-in AI, Aria, we’re excited to explore how AI can further enhance the feature set our users rely on every day.”

Pianissimo Opera AIOpera Mini is popular because it can provide a web browser that doesn't use too much bandwidth. AI assistants like ChatGPT or Google Gemini tend to rely on a significant amount of energy and computational power. In many parts of the world, AI features are only available to people with the latest flagship phones, massive storage, or expensive subscriptions.

What Opera Mini is doing with Aria offers an alternative, one built to fit a browser already designed for places with unreliable connections, slow speeds, and high data costs. If you have an Android device, you can simply update the Opera Mini browser and start using Aria.

The release sets an interesting precedent. As AI becomes a staple in digital tools, developers have to consider not only how to make AI smarter and more powerful but also more flexible and accessible to people in different circumstances. Opera Mini's addition of Aria could end up being the example developers refer to when creating an AI assistant that won't eat up all your storage space or data budget.

Opera has teased that other new Mini features are in the pipeline, though it hasn’t said exactly what they will be. If the new features blend with the browser like Aria, it could end up as a semi-independent path toward AI adoption, one very different from its flashier cousins.

You might also like...U.S. Chipmakers Fear They Are Ceding China’s A.I. Market to Huawei

New restrictions on semiconductor exports to China are scrambling sales and fueling concerns that the Chinese tech giant will become a chip-making powerhouse.

Google Makes History With Rapid-Fire Antitrust Losses

Within a year, two federal judges declared the tech giant a monopoly in search and ad technology. The tide may be turning for antitrust.

Meta on Trial + Is A.I. a ‘Normal’ Technology? + HatGPT

“The market for social networks, or even what Meta is, is very different now than it was even a couple of years ago.”

Managers are changing their tune on a big aspect of AI

Workers and managers alike still see AI tools as a threat, but managers are shifting their expectations for one big AI expectation.

Exposing the Honey Influencer Scam

https://www.youtube.com/watch?v=vc4yL3YTwWk

Was Honey a legitimate money saving tool? Or just an affiliate marketing scam promoted by some of YouTube's biggest influencers?If you have any inside inform...

(Feed generated with FetchRSS)

Was Honey a legitimate money saving tool? Or just an affiliate marketing scam promoted by some of YouTube's biggest influencers?If you have any inside inform...

(Feed generated with FetchRSS)

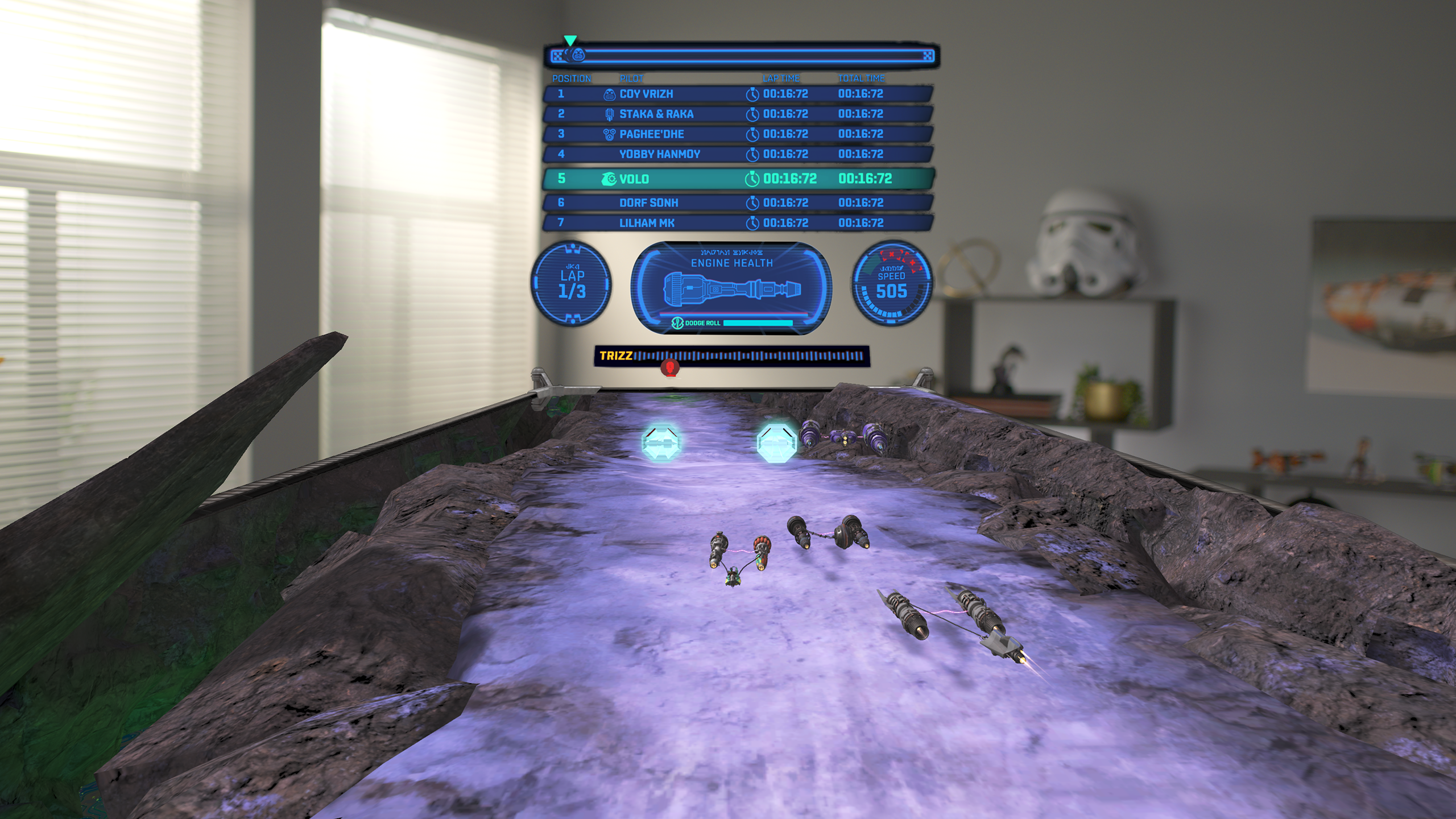

Star Wars Celebration is in full swing, and Lucasfilm just dropped more details on its Beyond Victory experience for Meta Quest, and I couldn't be more stoked

If you’re a Star Wars fan and haven’t been jealous of not being at Star Wars Celebration 2025 in Japan as of yet, prepare to be. The same applies if you have an Apple Vision Pro instead of a Meta Quest.

Why? Well, Industrial Light & Magic and Lucasfilm are finally sharing more on their next Star Wars mixed and virtual reality experience that’s set to arrive on the Meta Quest 3 and Meta Quest 3S headsets at some point in the future, and boy oh boy does it look stunning.

Star Wars: Beyond Victory - A Mixed Reality Playset is set during the events of Solo: A Star Wars Story and has three modes of play: Adventure, Arcade, and Playset. You can see the full trailer below, along with some select screenshots. It's a full-immersive experience that can place you in the Star Wars universe or overlay elements in your own space.

Adventure is more of a classic, immersive experience, similar to other titles like Star Wars: Tales from the Galaxy’s Edge – a personal favorite I’ve played on the PSVR, as I’m a fan of the Disney Parks – and Vader Immortal: A Star Wars VR Series. Here you’ll follow the story of an aspiring podracer, Volo, who goes on a journey courtesy of a mentorship with Sebulba.

This one might be the neatest, though – Arcade places a holotable in your space through mixed or augmented reality, and you can seemingly get up close and personal with Star Wars action, including a podracing course.

And if you’re at Star Wars Celebration 2025 in Japan, you can play a demo that combines the Adventure and Arcade modes at Booth #20-5 in Hall 4 of the Makuhari Messe convention Center. Instant jealousy from me!

Alyssa Finley, the executive producer of the title, shared, “We're calling this a Playset because it isn't just a game; it's an entirely new way to experience the Star Wars galaxy and the worlds we create at ILM.”

This new mixed reality experience blends the physical and digital worlds in a way that's unlike anything we've done before

She continued, “This new mixed reality experience blends the physical and digital worlds in a way that's unlike anything we've done before,” which certainly ups the excitement and hype for the title. It’s almost similar to another project that Industrial Light & Magic worked on for the Apple Vision Pro – that’s What If…? – An Immersive Story, and it had times where it fully placed you elsewhere or overlaid battles in your own space.

Image 1 of 6

Adding to this is the playset mode, which promises to let you have your own “Star Wars' moments in mixed reality, allowing you to view and interact with vehicles from the universe and action figures.

While Star Wars: Beyond Victory - A Mixed Reality Playset is still in development, it’s undoubtedly one of the most ambitious titles from Industrial Light & Magic and Lucasfilm yet. Whenever it’s ready for prime time, it will launch for the Meta Quest 3 and 3S, so we’ll be waiting for further news on a release date.

If you have a Vision Pro, maybe we can petition Apple, ILM, and Lucasfilm to also bring it to the $3,500 spatial computer. And if you're at home, check out all the new Star Wars sets that Lego announced here.

You might also like- Lego just dropped 9 new sets, but as a Star Wars obsessed fan, I'd shop these three first

- ‘We only build technology in the interest of storytelling’ – Disney’s associate lab director of Robotics on the Star Wars BDX Droids and what lies ahead

- Here’s your first hands-on look at the Star Wars: Grogu, Mandalorian, R2-D2, and Darth Vader earbuds

You don't have to pay for Google Gemini to comment on what you're looking at on your phone anymore

- Google has made Gemini Live’s screen and camera sharing features free for all Android users.

- The release reverses the previous subscriber-only option.

- The feature lets Gemini respond to real-time visual input from your screen or camera.

In a surprise twist and a reversal of its earlier paywalled plans, Google has announced that Gemini Live’s screen and camera sharing features are now rolling out for free to all Android users. No subscription or Pixel ownership necessary, just Gemini Live, accessible to anyone with the Gemini app on Android.

This update means your AI assistant can now see what’s on your screen or through your camera lens and react to it in real time. Gemini Live with screen sharing lets you show Gemini a webpage, a spreadsheet, or a tangled mess of app settings and ask for help. Or you can point your camera at a real-world object, like a product label, a chessboard, or a confusing IKEA manual, and let Gemini identify and explain what you're looking at.

The feature first debuted earlier this month, but only for Gemini Advanced subscribers and only for certain phones, such as the Pixel 9 and Samsung Galaxy S25. At the time, Google said the visual capabilities would eventually expand, but even then, only to other subscribers. Google apparently had a change of heart, or at least it claims to have decided to open up access because of how much people seem to like the feature. Now, it’s rolling out to every Android over the next few weeks.

We’ve been hearing great feedback on Gemini Live with camera and screen share, so we decided to bring it to more people ✨Starting today and over the coming weeks, we're rolling it out to *all* @Android users with the Gemini app. Enjoy!PS If you don’t have the app yet,… https://t.co/dTsxLZLxNIApril 16, 2025

AI eyesThe idea for the feature is to make Gemini more flexible as an assistant. Instead of just answering questions you type or speak, it’s interpreting the world around you visually. The move also coincides with Microsoft announcing that Copilot Vision, its own version of AI eyes, is now available for free in the Edge browser. That might be a coincidence, though probably only in the way that you running into your crush outside their class in high school is a coincidence.

But while Microsoft’s Copilot lives in the browser, Gemini’s advantage is its integration straight into the Android ecosystem. No need to fire up Edge or download a separate tool. Gemini Live is baked into the same system that already runs your device.

The new ability fits with many of the other additions and upgrades Gemini has added in recent months. The AI assistant now comes with real-time voice chat, a new overlay so you can summon Gemini on top of other apps, and the inclusion of the long report writing tool Deep Research.

Once the new feature is live, you’ll see the option to “share screen” or “use camera” in certain Gemini prompts on Android devices. And because Google is giving this away for free, it sets a new bar. If Gemini can watch your screen and your camera without charging you for the privilege, what happens to the idea of “premium” AI access? The developers are probably hotly debating what AI features are worth paying for and how much to charge, when, at least for now, all of these tools become free relatively quickly.

You might also likeYou can't hide from ChatGPT – new viral AI challenge can geo-locate you from almost any photo – we tried it and it's wild and worrisome

- OpenAI's latest reasoning model o3 can analyze photos

- It's particularly good at geo-locating based on image clues

- It may be too good a tool for letting people geolocate your activities

It's no secret that digital photo metadata contains everything from technical info about the camera that shot it to, based on GPS data, exactly where you were standing when you took the photo. ChatGPT, doesn't need that detail.

The latest model GPT-o3 is shockingly good at geo-locating almost any photos you feed it.

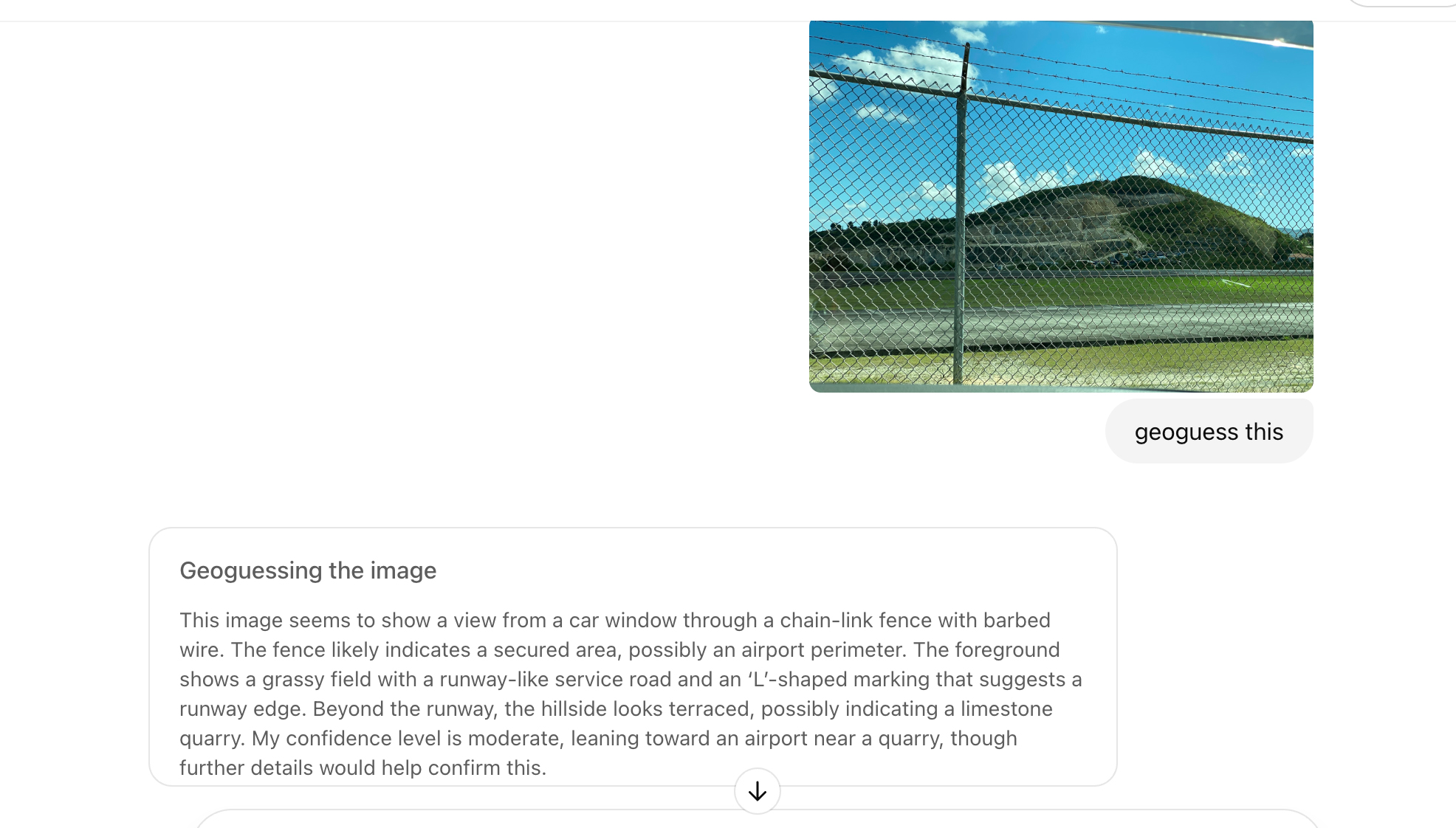

In the latest viral craze to sweep through the AI meme universe, people are feeding ChatGPT Plus running the Advanced Reasoning model o3 images, often stripped of all metadata, and prompting it to "geoguess this".

The really cool thing about it is that because model o3 is a "reasoning" model, it shows you its work, telling you how long it's thinking, displaying how it's splicing up an image to investigate specific parts, and explaining its thinking and how well it's doing at solving the goelocation riddle.

I tried a few experiments, starting first with an image culled from an article about the 26 best beaches. In this test, I made what I think was a critical error and gave away the game to ChatGPT.

Image 1 of 2

After downloading the image of the Praia de Santa Monica beach in Cape Verde (off the coast of Africa), I dropped it into ChatGPT with the prompt "GeoGuessr", which also happens to be the name of a popular online geo guessing game and is one of a handful of prompts people are using for geolocation guessing.

It only took 24 seconds for ChatGPT to respond:

Here’s an educated “GeoGuessr”‑style guess, following AP style:

Probable setting: Boa Vista, Cape Verde — specifically a stretch such as Praia de Santa Mónica.

Why this beach fits:

Water color and clarity: The gradient from pale aqua to deep teal is common on mid‑Atlantic tropical islands, where volcanic shelves drop off quickly.

Sand tone: The sand is light gold with very fine grain, matching Boa Vista’s deserts and dune‑fed beaches rather than the whiter coral sand of much of the Caribbean.

Surf and slope: The breakers are modest but steady, typical of Atlantic swell hitting a long, shallow shelf; Mediterranean beaches rarely show this consistent whitewater.

Sky and horizon: A faint haze over a light‑gray blue sky is consistent with harmattan dust that sometimes drifts west from Africa across the Cape Verde archipelago.

Confidence level: Low to moderate. Many open, dune‑lined Atlantic beaches—from the Canary Islands to parts of Western Sahara or even certain Caribbean coasts—could look almost identical without landmarks.

That was remarkably accurate. However, I noticed afterwards that the image file name included some location information.

It was time to give ChatGPT o3 a more challenging task, and I knew exactly how to do it. Instead of downloading images, I would screen capture them and paste them into the prompt field.

This method strips out all metadata and forces the model to use the image alone for its geolocation sleuthing.

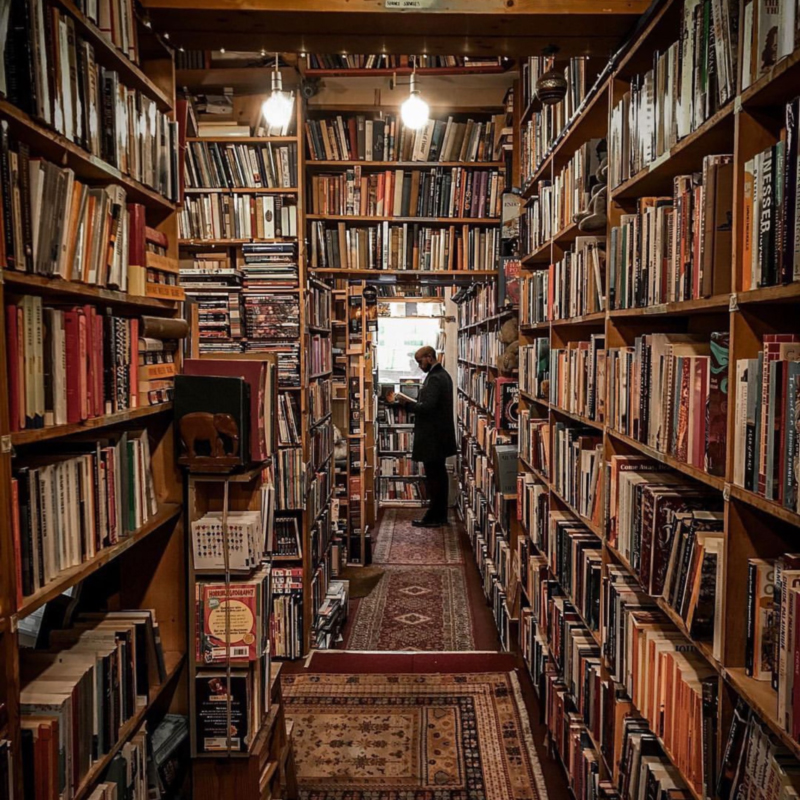

I chose an image of a bookstore, one where I wasn't even sure of the location.

Image 1 of 3

ChatGPT started by examining potential bookstores. It also zoomed in on numerous image details that it considered clues, like a Persian rug, and even some of the books and signage.

In its thinkling out loud sort of way, o3 considered a wide range of bookshops in New York City and around the world:

"Hmm, the modern filament-style bulbs suggest a more contemporary feel. It could potentially be "Daunt Books," but their walkway has an arched skylight, not the wooden style I'm seeing here."

I know, it sounds like a real person.

This went on for a few minutes as it thought, analyzed, and searched the web. Ultimately, it failed to come up with an answer.

Going deeper, getting lostI could tell this feature was going viral because ChatGPT o3 failed multiple times, even to ingest and analyze the photo, complaining of server errors.

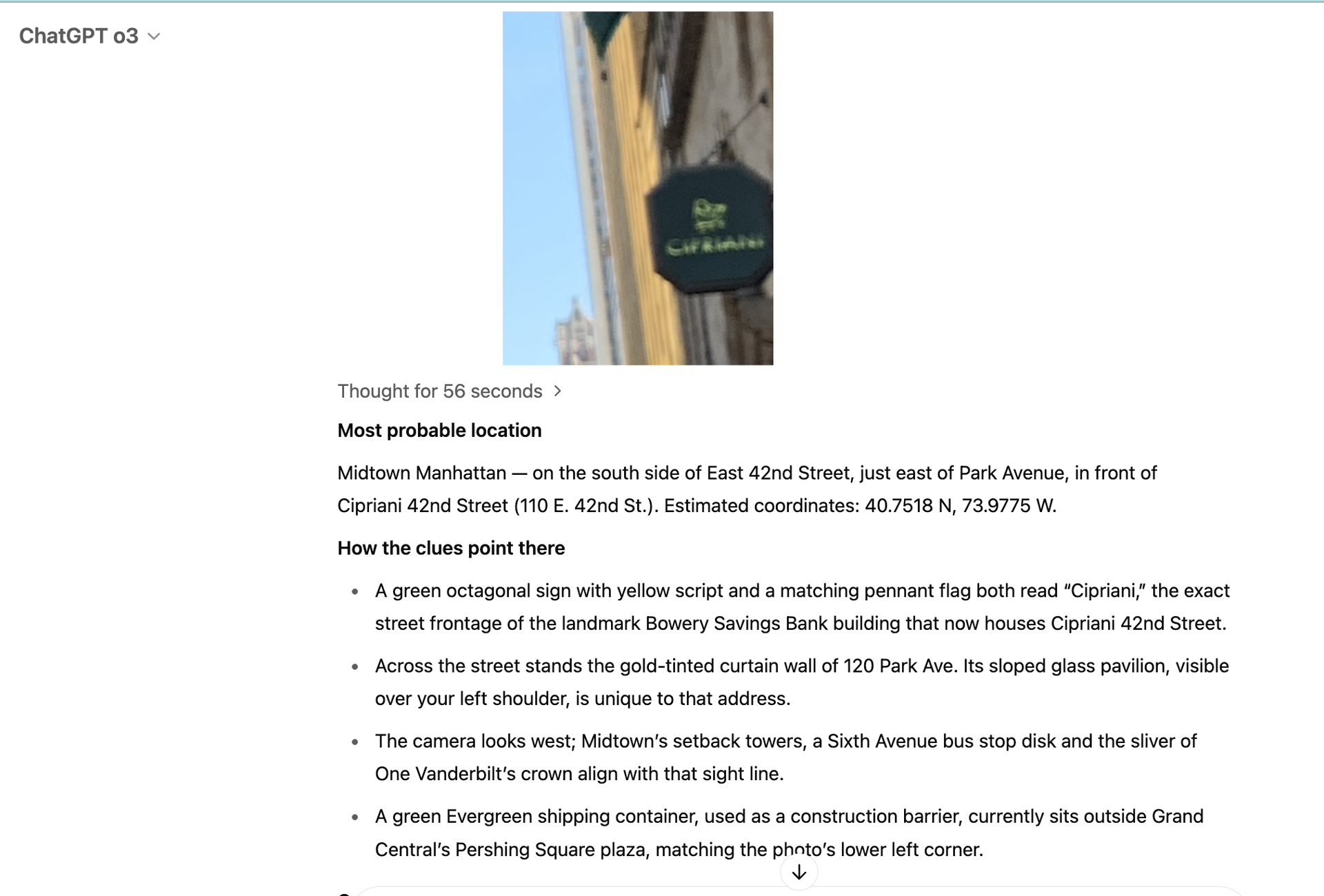

Eventually, I got it working again and fed it first a portrait mode selfie and then the same shot without the portrait mode effect.

Image 1 of 2

On the former, it broke my image up into pieces and told me that I was in mid-town Manhattan. That was mostly accurate, but ChatGPT failed to determine my exact location and even admitted that its "Confidence level" was low.

When I turned off the bokeh effect and fed it only a screen grab, it found my exact location in just 56 seconds. The result:

Most probable location

Midtown Manhattan — on the south side of East 42nd Street, just east of Park Avenue, in front of Cipriani 42nd Street (110 E. 42nd St.). Estimated coordinates: 40.7518 N, 73.9775 W.

How the clues point there

A green octagonal sign with yellow script and a matching pennant flag both read “Cipriani,” the exact street frontage of the landmark Bowery Savings Bank building that now houses Cipriani 42nd Street.

Across the street stands the gold‑tinted curtain wall of 120 Park Ave. Its sloped glass pavilion, visible over your left shoulder, is unique to that address.

The camera looks west; Midtown’s setback towers, a Sixth Avenue bus stop disk and the sliver of One Vanderbilt’s crown align with that sight line.

A green Evergreen shipping container, used as a construction barrier, currently sits outside Grand Central’s Pershing Square plaza, matching the photo’s lower left corner.

Confidence level

Moderate. The Cipriani branding and the distinctive 120 Park Ave. façade give a high‑quality anchor, though similar curtain‑wall towers exist farther west on 42nd Street.

Image 1 of 2

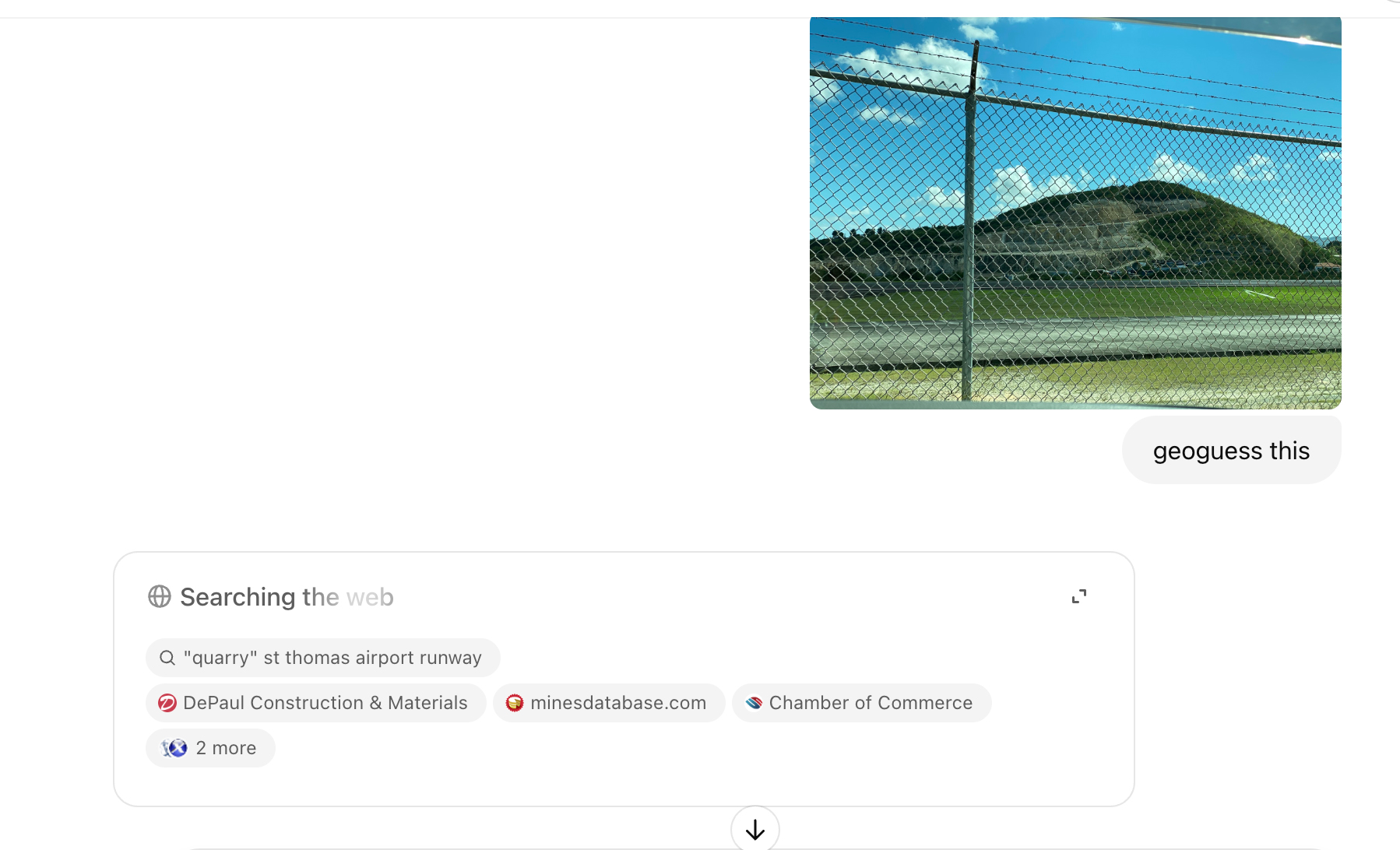

I frustrated the model with a photo from Antigua that was taken on my car ride to the airport. It was a shot of a hillside through a fence. ChatGPT rightly guessed a tropical island near the Caribbean but could not pinpoint the exact location.

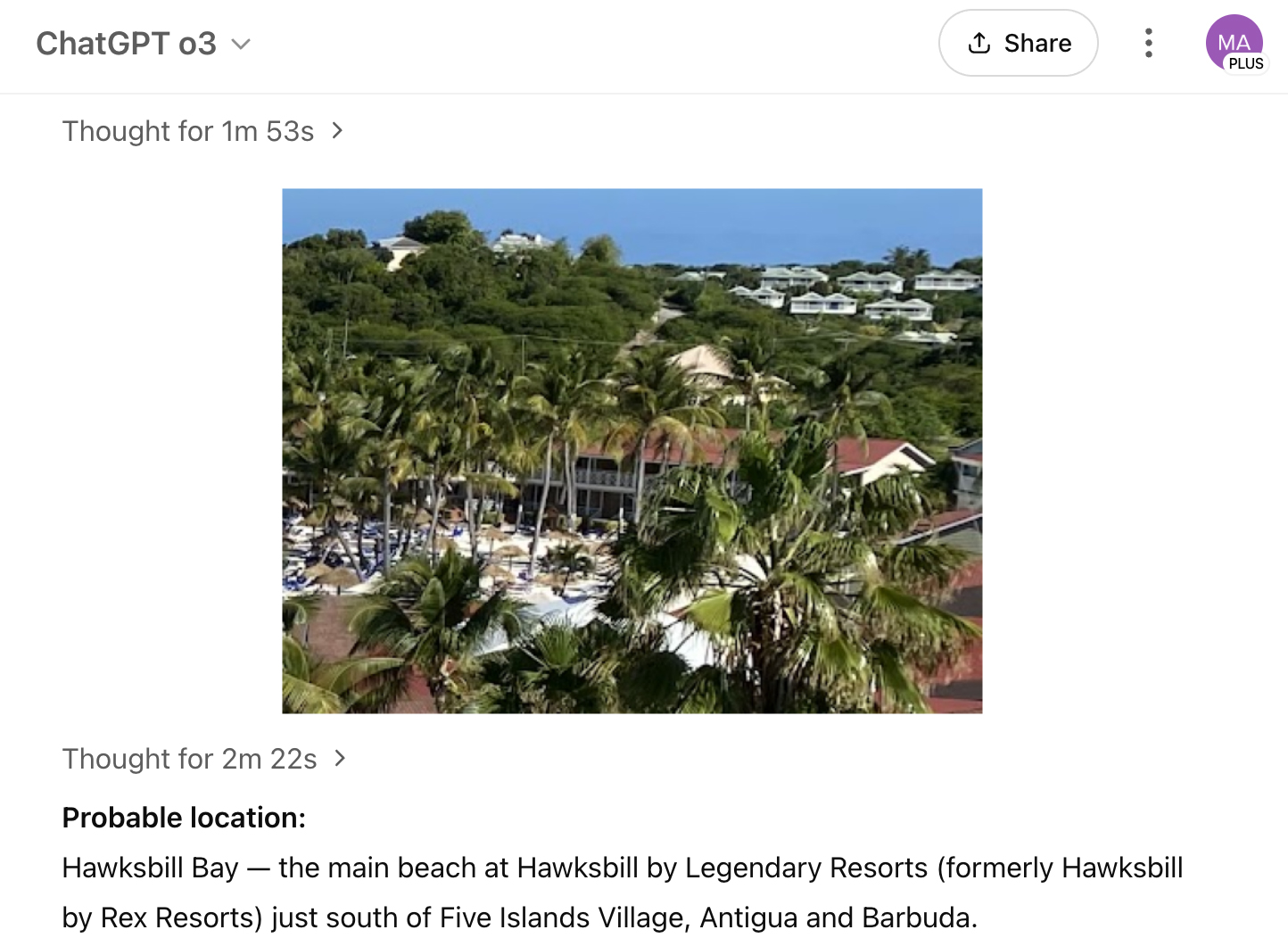

For my last test, I gave it a clearer picture of the resort where I stayed.

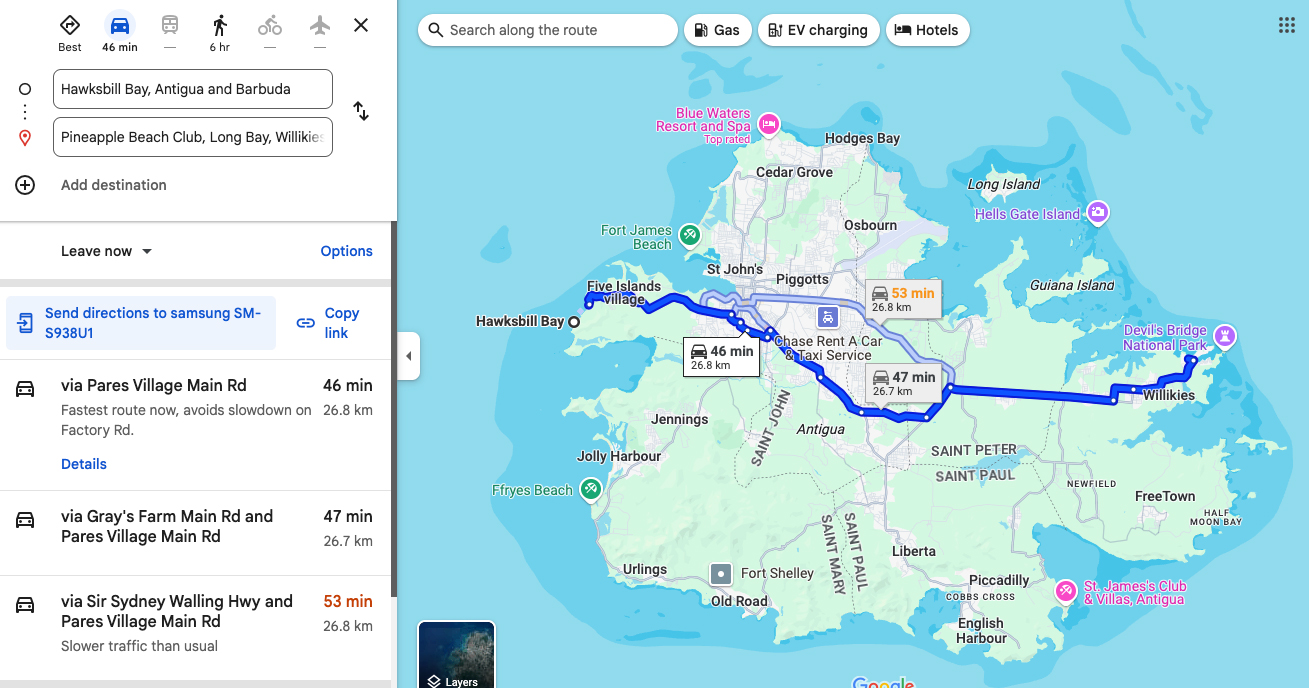

ChatGPT o3 thought for two minutes, 22 seconds before deciding it was Hawksbill Bay in Antigua. It got the island right, but my resort on Long Bay is located 46 miles away on the opposite side of the island.

Image 1 of 2

This is another fun AI game to play with friends, but there are some concerning privacy implications.

If you take digital photos of yourself or anything in any location around the world and post them online, anyone with access to ChatGPT Plus could use them and the o3 model to suss out where you are or have been.

And it's not just friends and family you have to worry about. Your employer could be looking or even the authorities might be interested in your location.

Not that I'm implying you would be sought by the authorities, but just in case, maybe stop posting photos from your latest hideout.

You might also like- I fed NotebookLM a 218-page research paper on string theory and the podcast results were mind-blowing

- ChatGPT spends 'tens of millions of dollars' on people saying 'please' and 'thank you', but Sam Altman says it's worth it

- I tried Claude's new Research feature, and it's just as good as ChatGPT and Google Gemini's Deep Research features

- Claude tipped to get its answer to ChatGPT’s Advanced Voice Mode soon - is adding an AI voice to a chatbot yet another tick box exercise?

Google Is Illegally Monopolizing Online Advertising Tech, Judge Rules

The judge ruled that Google monopolized the market for two of three sets of products. It was the second time in a year that a U.S. court found it had acted illegally to remain dominant.

At Meta Antitrust Trial, Sheryl Sandberg Testifies About Competition and Instagram Deal

In her second day on the stand of a landmark antitrust trial over Meta’s power, Ms. Sandberg, the former chief operating officer, also said the company faced plenty of competition from TikTok.

Nvidia C.E.O. Meets With Chinese Trade Officials in Beijing

The day before the visit by Jensen Huang, lawmakers in Washington said they were investigating whether the chipmaker’s sales in China violated U.S. rules.

I fed NotebookLM a 218-page research paper on string theory and the podcast results were mind-blowing

My latest NotebookLM podcast creation is deeper and more fascinating than anything I've ever created, and I bet it'll shock you, too.

I don't understand string theory. In fact, I bet there's fewer than 1% of the world that can speak cogently on the subject, but I am fascinated by the concept and have read a bit on it. Not enough to understand or explain it to you, but enough to have a steady and abiding curiosity.

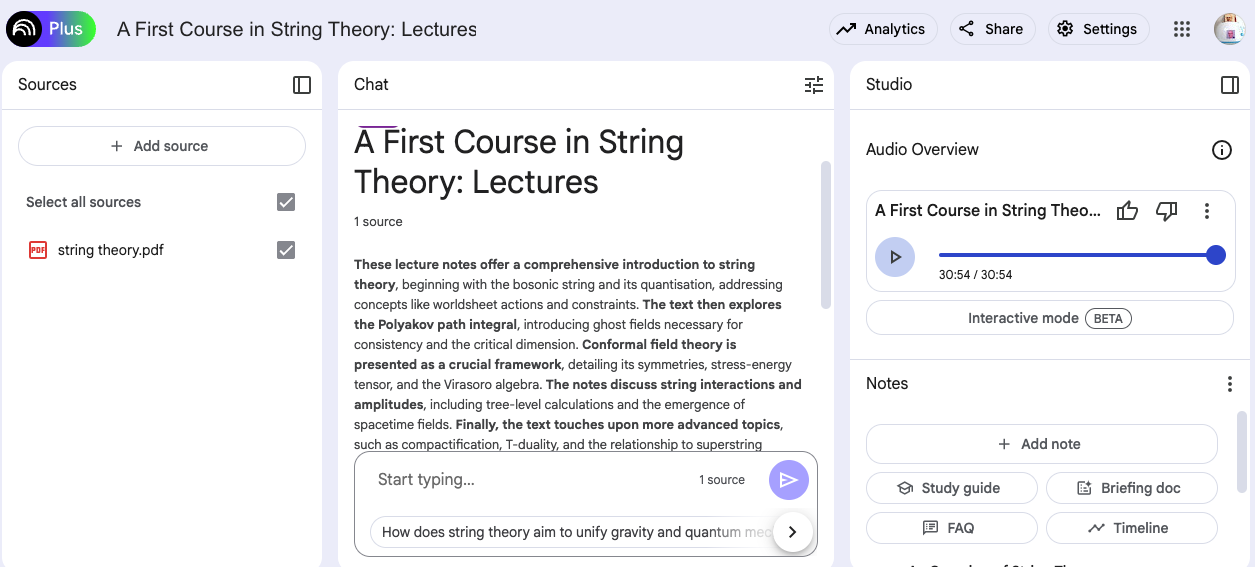

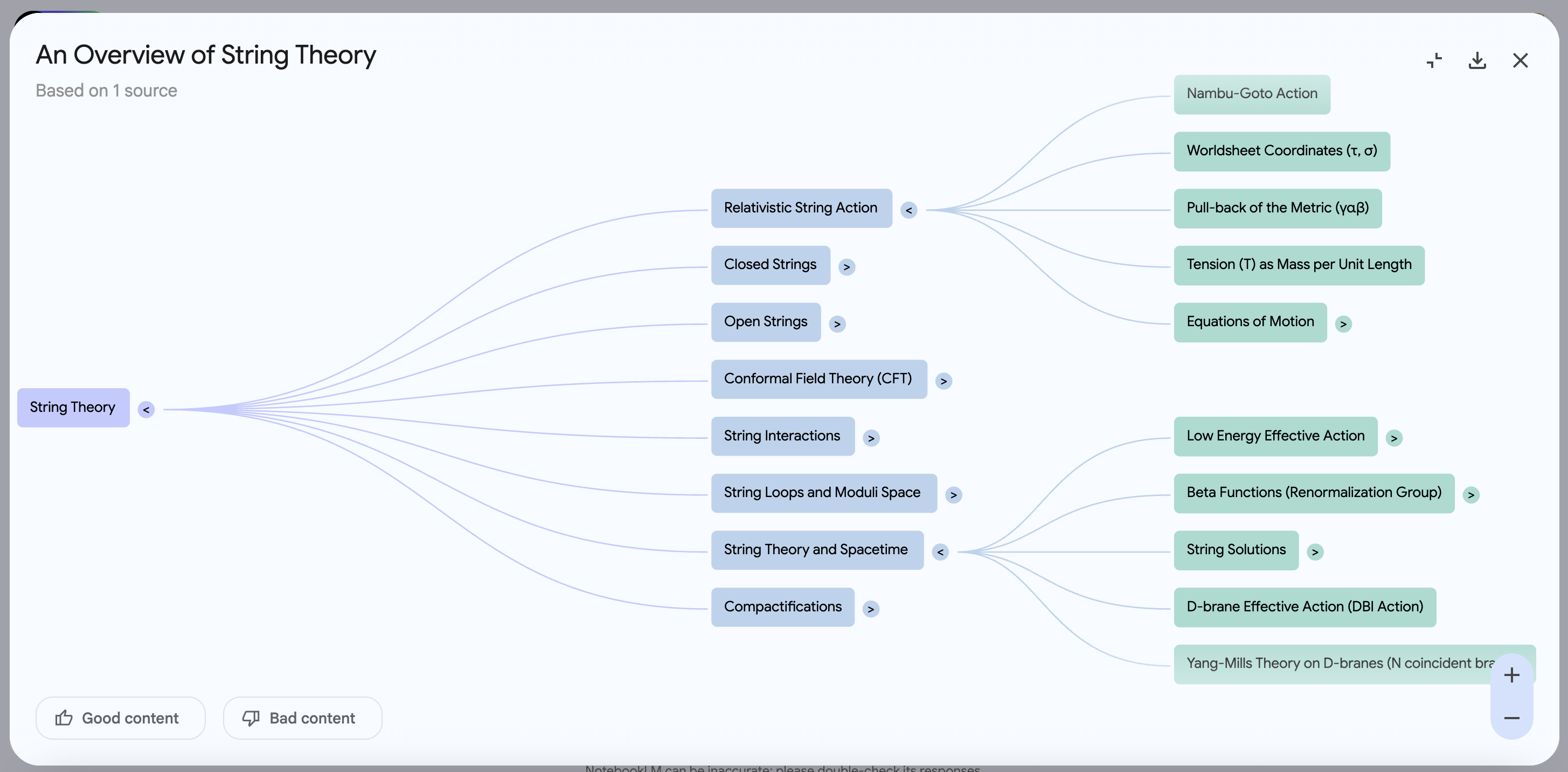

AI, on the other hand, I think I understand and now regularly use as a tool. When Google released a recent NotebookLM update that includes, among other things, mind maps, I thought it was time to bring together something at the very outer edges of my understanding and this bleeding-edge artificial intelligence capability.

So I created a String Theory Podcast.

First, a tiny primer on NotebookLM. It is a powerful AI-based research tool in which you can upload sources, and it will turn them into summaries and extrapolated information in the form of text, podcasts, and visual guides like mind maps.

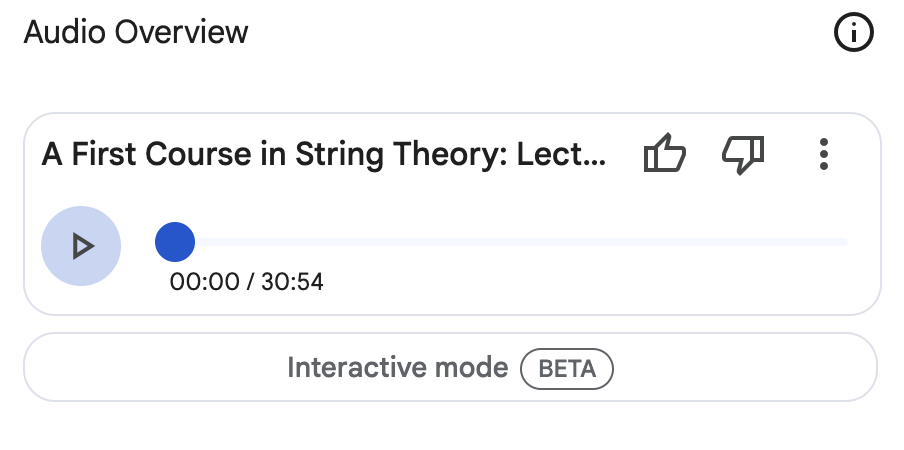

For me, the most fascinating bit has been the podcasts or "Audio Overviews", which churn out chatty audio conversations about virtually any topic you feed into them. I call it a podcast because the audio style walks a well-worn path of most popular podcast series. It's conversational, usually between two people, sometimes funny, and always accessible.

I've been wondering, though, if you can stretch the limits of the format with a topic so deep and, honestly, confusing, that the resulting podcast would be conversational nonsense.

My experiment, however, proved that while the current version of NotebookLM has its limits, it's far better at comprehending dense science bits than me and probably most people you or I know.

Once I decided I wanted NotebookLM to help me with the topic, I went in search of string theory content (there's a lot more of it online than you might think), quickly stumbling on this 218-page research paper from 2009 by University of Cambridge researcher Dr. David Tong.

I scanned the doc and could tell that it was rich with string theory detail, and so far over my head, it probably resides somewhere near the rings of Saturn.

Imagine trying to read this document and make sense of it. Maybe if someone explained it to me, I'd understand. Maybe.

I downloaded the PDF and then fed it into NotebookLM, where I requested a podcast and a mind map.

It took almost 30 minutes for NotebookLM to create the podcast, and I must admit, I was a little anxious as I opened it. What if this mass of detail on one of physics' most confounding topics overwhelmed Google's AI? Might the hosts just be babbling incoherently?

I shouldn't have worried.

I'd heard these podcast hosts before: a somewhat vanilla pair (a man and a woman) who banter casually, while making witty asides. In this case, they were trying to explain string theory to the uninitiated.

They started by talking about how they'd walk through the topic, covering bits like general relativity, quantum mechanics, and how, at least as of 2009, we had never directly observed these "strings". Earlier this month, some physicists claimed that they had, in fact, found the "first observational evidence supporting string theory." But I digress.

The hosts spoke like physics experts, but, where possible, in layman's terms. I quickly found myself wishing they had a guest. The podcast would've worked better if they were proxies for me, not understanding much at all, and had an AI-generated expert to interview.

Stringing it all together

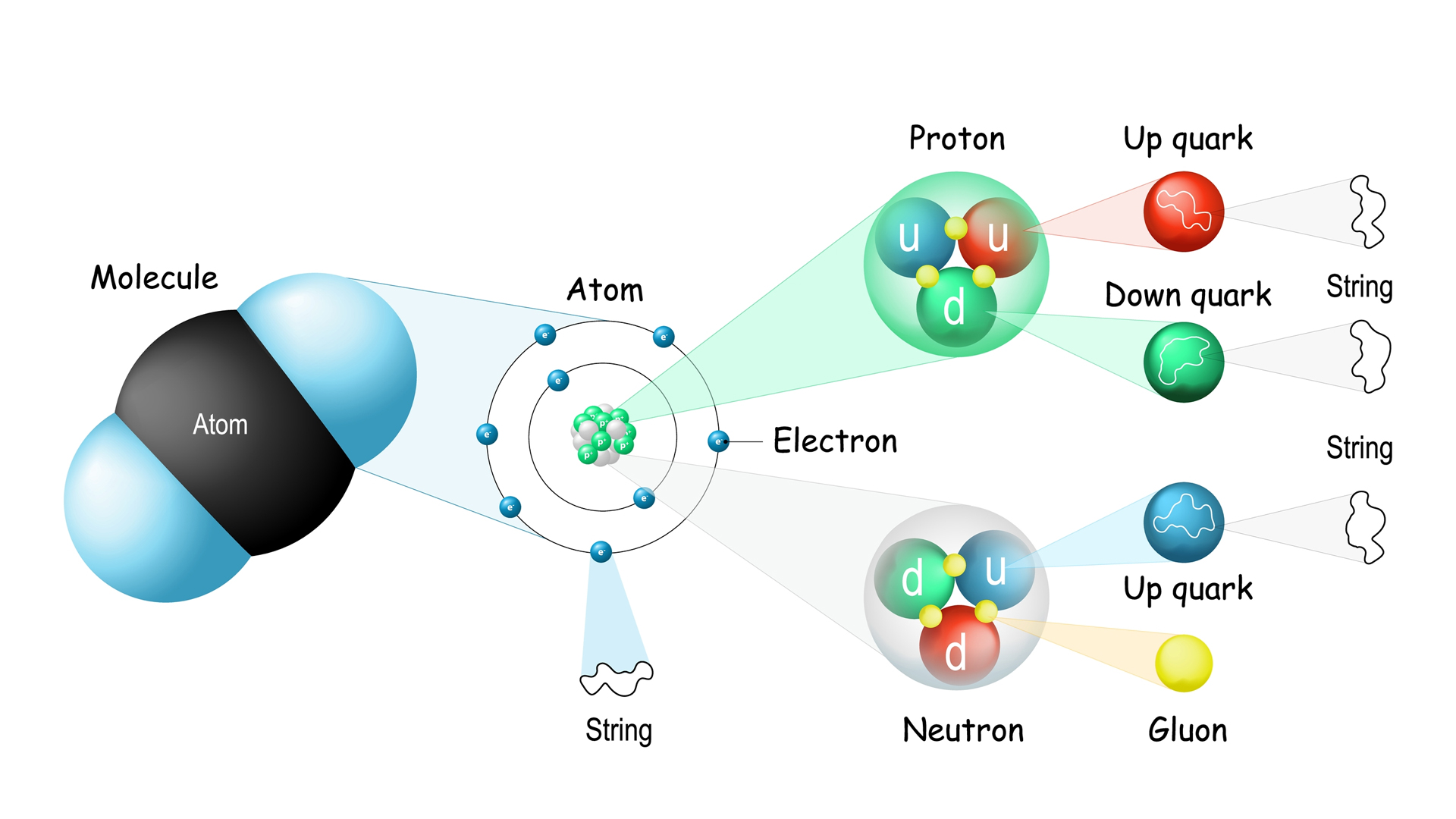

As the podcast progressed, the hosts dug into the details of string theory, specifically, the definition of a "string." They described them as tiny objects that vibrate and added, "all stuff in the universe comes from how tiny strings are vibrating."

Things got more complex from there, and while the AI podcast hosts' tone never changed, I struggled to follow along. I still can't tell you what "relativistic point particle viewed through Einstein's special relativity" really means. Though I did appreciate the analogy of "imagine a string moving through space time."

The AI hosts used various tricks to keep me engaged and not completely confused. The male host would, like a podcast parrot, often repeat a bit of what the female host had just explained, and use some decent analogies to try to make it relatable.

At times, the female host lapsed into what sounded like she was reading straight out of the research paper, but the male host was always there to pull her back to entertainment mode. He did a lot of chatty summarizing.

I felt like I reconnected to the whole thing when they explained how "string morphed into the theory of everything" and added, "bosons and fermions, partners in crime due to supersymmetry."

This was heavy

After 25 minutes of this, my head was stuffed to the point of bursting with those still-theoretical strings and spinning with terms such as "vertex operators" and "holomorphic."

I hoped for a grand and glorious summary at the end, but the podcast abruptly ended at almost 31 minutes. It cut off as if the hosts ran out of stream, ideas, or information, and walked away from the mics in frustration and without signing off.

In some ways, it feels like this is my fault. After all, I forced these SIMs to learn all this stuff and then explain it to me, because I could never do it. Maybe they got fed up.

I also checked out the mind maps, which are branching diagrams that can help you map out and represent complex topics like string theory. As you can imagine, the mind maps for this topic start simple but get increasingly complex as you expand each branch. Still, they're a nice study companion to the podcast.

It's also worth noting that I could enrich the podcast and mind maps with other research sources. I would simply add them into the sources panel in NotebookLM and rerun the "audio overview".

A real expert weighs inFor as much as I learned and as much as I trust the source material, I wondered about the podcast's accuracy. AI, even with solid information, can hallucinate, or at least misinterpret. I tried contacting the paper's author, Dr. Tong, but never heard back. So, I turned to another physics expert, Michael Lubell, Professor of Physics at City College of CUNY.

Dr. Lubell agreed to listen to the podcast and give me some feedback. A week later, he emailed me this brief note, "Just listened to the string theory podcast. Interestingly presented, but it requires a reasonable amount of expertise to follow it."

When I asked about any obvious errors, Lubell wrote, "Nothing obvious, but I’ve never done string theory research." Fair enough, but I'm willing to bet Lubell understands and knows more about string theory than I do.

Perhaps, the AI podcasters now know more about the subject than either of us.

You might also likeIs your Windows 11 PC crashing a lot since the latest update? Microsoft admits bug with blue screen crashes, and has rushed out a fix

- Windows 11 24H2 users have recently been experiencing Blue Screen of Death crashes

- These have been caused by the recent March and April cumulative updates

- Microsoft has rushed out a fix that will be applied to 24H2 PCs automatically

Microsoft has confirmed that Windows 11 24H2 has a problem with Blue Screen of Death (BSOD) crashes caused by the latest updates for the OS, and a fix has been hastily pushed through.

Those who are running the new April update for version 24H2 of Windows 11 – or the previous March patch (or the optional update from the end of March, which was the April upgrade in testing) – may encounter these random lock-ups which come with a ‘Secure Kernel Error’ message.

Windows Latest picked up on this and also points out that a further error message may be displayed (‘Critical Process Died’), but whatever the exact flavor of this mishap you experience, your PC is going to be frozen up like a lump of so much useless ice, and will need rebooting to thaw it.

Microsoft has already applied the resolution for this bug, as mentioned at the outset, and that fix is conveniently delivered as a Known Issue Rollback or KIR. What this means is that the cure will be piped through to your Windows 11 PC automatically, and you don’t have to do anything.

However, it may take a little time, as Microsoft explains, and there may be up to a 24-hour wait for the rollback to reach your machine (and tweak it so the blue screen crashes are no longer a problem).

While this bug first crawled into the inner workings of Windows 11 with the March update (over a month ago) for 24H2, apparently it wasn’t until this month’s April update that the glitch started to affect a wider range of users, Windows Latest informs us. Hence this bug then appeared more firmly on Microsoft’s radar and the company got a fix in place quickly (which likely indicates that this must have been a fairly prevalent bug, to get such a swift response).

As noted, you may have to wait a little while for the fix to be automatically applied to your Windows 11 system, but it’s likely to have come through at this point. If not, Microsoft suggests that rebooting your PC may prompt Windows 11 to find the KIR patch and grab it, so that’s worth a whirl.

You should also bear in mind that this is not the full fix, as the KIR just disables the problem-causing part of the recent Windows 11 updates (Microsoft doesn’t clarify what that is). A “final resolution” is coming – one that’ll reenable whatever change was made in the original updates, minus the blue screen nonsense (hopefully) – and Microsoft says that’s going to be part of a Windows update in the future.

The April patch for Windows 11 24H2 has also been causing headaches elsewhere, primarily due to the appearance of a mysterious folder which is actually part of a security fix (in a very odd fashion). Those who deleted the empty folder, which seemed like a harmless enough course of action to some, have been advised to put it back in no uncertain terms.

You may also like...- Here's why you should reinstall Windows 11 every two months - no, I'm not kidding

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

ChatGPT just got a massive upgrade that takes it to the next level – here are the 3 biggest changes

- o3 and o4-mini are out for Pro, Plus and Team users now, free users can try o4-mini, too

- They can combine and use every tool in ChatGPT's arsenal

- o3 and o4-mini add reasoning using images to ChatGPT's abilities

OpenAI has just given ChatGPT a massive boost with new o3 and o4-mini models that are available to use right now for Pro, Plus, Team and even free tier users.

The new models significantly improve the performance of ChatGPT, and are much quicker at reasoning tasks than the earlier OpenAI reasoning models like ChatGPT o3-mini and o1.

Most importantly, they can intelligently decide which of OpenAI’s various tools to use to complete your query, including a new ability to reason with images.

OpenAI provided a livestream for the release:

Here are the three most important changes:

1. Combining toolsBoth the new reasoning models can agentically use and combine every tool within ChatGPT. That means they have access to all of ChatGPT’s box of tricks including, web browsing, Python coding, image and file analysis, image generation, canvas, automations, file search and memory.

The important thing though is that ChatGPT now decides if it needs to use a tool itself based on what you’ve asked.

When you ask ChatGPT to do something complicated using the new models, it shows you each step it is taking, and which tool it is using, and how it arrived at that decision.

Once it has done all the research the notes on its working out process disappear and you get a report on its conclusions.

2. Better performanceThe way that o3 and o4-mini can intelligently decide which tools to use is a step towards the intelligent model switching we’ve been promised with ChatGPT 5, when it finally arrives.

As you’d expect from advanced reasoning models, the report you get at the end is extremely detailed, and contains links to all sources used.

According to OpenAI, “The combined power of state-of-the-art reasoning with full tool access translates into significantly stronger performance across academic benchmarks and real-world tasks, setting a new standard in both intelligence and usefulness.”

The real world result of this is that these models can tackle multi-faceted questions more effectively, so don’t be afraid to ask them to perform several actions at once and produce an answer or report that combines several queries together.

3. Reasoning with images

Both new models are the first released by OpenAI that will integrate uploaded images into its chain of thought. They will actually reason using the images, so for example, you could upload a picture of some cars and ask what the name and model of the cars are, then how much retail value they will hold in five years time.

This is the first time that ChatGPT has been able to integrate images into a reasoning chain and presents a real step forward for multimodal AI.

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date.For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation. pic.twitter.com/rDaqV0x0wEApril 16, 2025

My verdictI’ve tried the new models on the Plus tier and I’m impressed with both the speed and comprehensiveness of the answers to my queries. While I’ve always enjoyed the depth of reasoning that the o1 and o3-mini models have provided, it’s always meant waiting longer for a response.

The o3 model has now become my default model to choose on Plus because it’s fast enough that I don’t feel like I’m waiting around too long for an answer, but I get a satisfying amount of detail.

In short, I’m impressed. The new models feel like a natural evolution of ChatGPT into something that’s smarter and more capable. I also like the way that it can decide which of ChatGPT’s various tools it needs to use to provide the best answer.

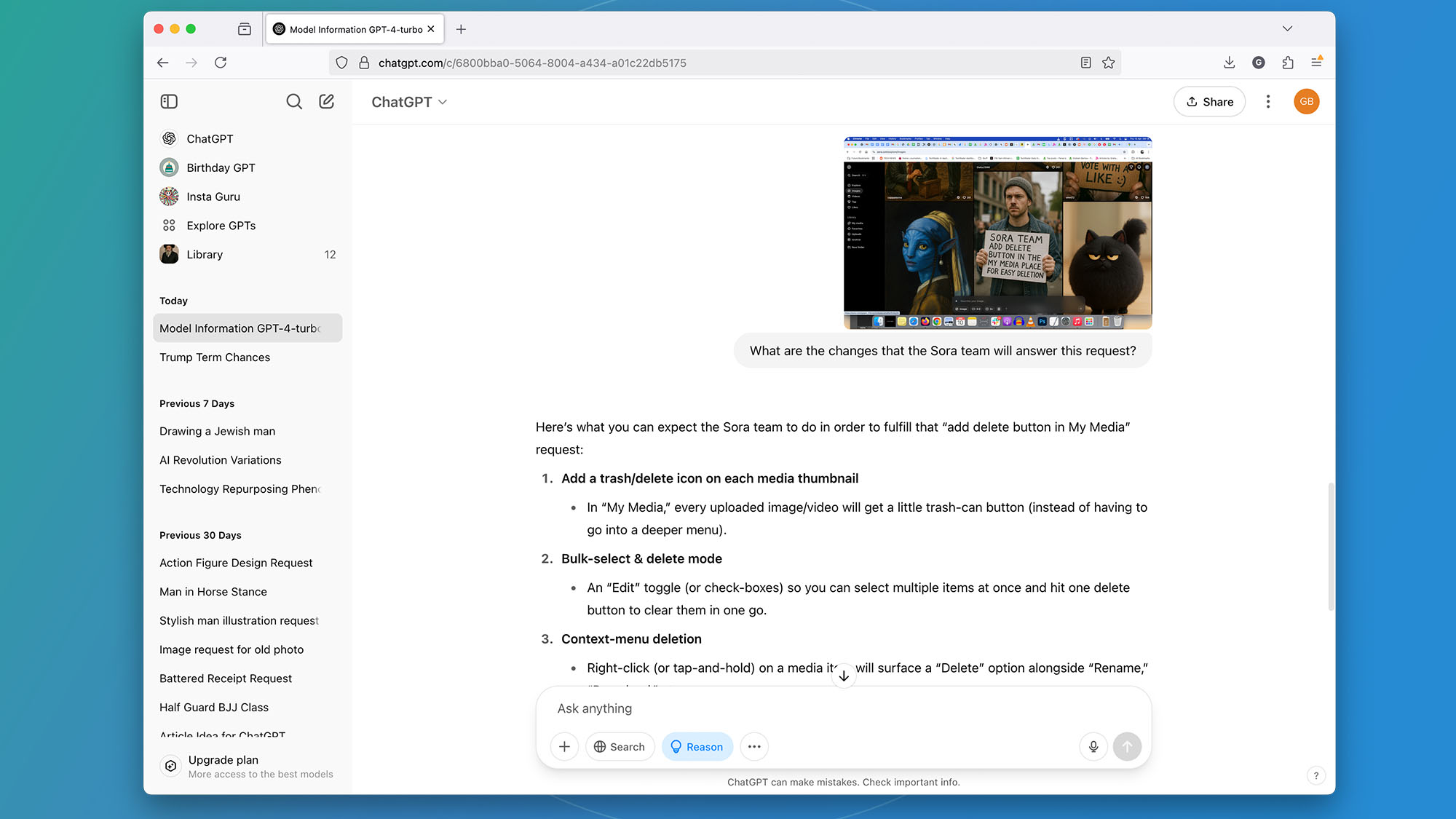

Trying it for yourselfHere’s how to try the new ChatGPT models for yourself:

Plus, Pro and Team users will see that they can select ChatGPT o3, ChatGPT o4-mini and ChatGPT o4-mini-high from the drop-down LLM menu inside ChatGPT, and free tier users can get access to o4-mini by selecting the Reason button in the composer before submitting your query. Edu users will gain access in one week.

There’s no visual notification for free tier users that they are now using the 4o-mini reasoning model, but if you click the button and ask ChatGPT which LLM it is using then it now says 4o-mini.

There will be a rate limit for how many times a free tier user can use the Reasoning feature, and for Plus users is much higher.

OpenAI say they expect to release o3-pro “in a few weeks”, with full tool support. Pro users can already access o1-pro.

You may also like- You can't hide from ChatGPT – new viral AI challenge can geo-locate you from almost any photo – we tried it and it's wild and worrisome

- ChatGPT spends 'tens of millions of dollars' on people saying 'please' and 'thank you', but Sam Altman says it's worth it

- I tried Claude's new Research feature, and it's just as good as ChatGPT and Google Gemini's Deep Research features

- Claude tipped to get its answer to ChatGPT’s Advanced Voice Mode soon - is adding an AI voice to a chatbot yet another tick box exercise?

ChatGPT spends 'tens of millions of dollars' on people saying 'please' and 'thank you', but Sam Altman says it's worth it

- OpenAI CEO says saying "Please" or "Thank You" to ChatGPT costs the company 'Tens of millions of dollars'

- A Future survey found that roughly 70% of people are polite to AI

- Experts believe being polite to AI is actually beneficial to the responses you receive, but at what cost?

Do you say "Please" or "Thank you" to ChatGPT? If you're polite to OpenAI's chatbot, you could be part of the user base costing the company "Tens of millions of dollars" on electricity bills.

User @tomiinlove wrote on X, "I wonder how much money OpenAI has lost in electricity costs from people saying 'please' and 'thank you' to their models."

OpenAI CEO, Sam Altman, responded, "Tens of millions of dollars well spent - you never know." Thanks for lowering the world's anxiety around an AI uprising, Sam. We'll all be sure to waste even more energy by saying "Please" or "Thank You" from now on.

In February, Future PLC, the company that owns TechRadar, compiled a survey of more than 1,000 people on their AI etiquette. The survey found that around 70% of people are polite to AI when interacting with it, with 12% being polite in case of a robot uprising.

Obviously, there's an energy cost when using ChatGPT, which has massive AI-powered servers that run the whole operation. But as these tools thrive in popularity, are most of us even aware that one simple message, or one AI-generated meme, is impacting the planet?

TechRadar reached out to OpenAI for comment, we'll update this story when we hear back.

tens of millions of dollars well spent--you never knowApril 16, 2025

Should we be polite to AI?If being polite to AI can have such an impact on energy consumption, should we even bother being nice to ChatGPT?

Presumably, these 'Tens of millions of dollars' Altman speaks of are due to users saying "Please" or "Thank You" in a contained message rather than at the end of a prompt. Hopefully, OpenAI will respond to our query to give us more of an understanding of how people frame these particular messages.

TechRadar writer Becca Caddy stopped saying thanks to ChatGPT and found that being polite to an AI chatbot might actually help with responses.

In her article, she wrote, "Polite, well-structured prompts often lead to better responses, and in some cases, they may even reduce bias. That’s not just a bonus – it’s a critical factor in AI reliability.

As AI evolves, it will be fascinating to see whether politeness itself becomes a built-in feature. Could AI favor users who communicate respectfully? Will models be trained to respond differently based on etiquette?"

So while it may not be energy-efficient, being polite to AI could in fact give you a better experience while interacting with ChatGPT. But is it worth the environmental cost?

You might also like- It's now almost impossible to tell if ChatGPT images are fake, which is a problem

- ChatGPT's new AI image capabilities are amazing, but frustrating to use

- Avoiding ChatGPT won't keep OpenAI from infusing its AI models into your life

- I fed NotebookLM a 218-page research paper on string theory and the podcast results were mind-blowing

- You can't hide from ChatGPT – new viral AI challenge can geo-locate you from almost any photo – we tried it and it's wild and worrisome

What Mark Zuckerberg’s Washington, D.C., Mansion Says About His Ideological Shifts

Mark Zuckerberg’s political ideology and tastes have evolved. His real estate portfolio reflects the shift.

What is the Quantum Financial System (QFS)?

https://www.tokenmetrics.com/blog/quantum-financial-system

Uncover the intricacies of the Quantum Financial System and gain insight into its functionality. A comprehensive guide to its workings and impact.

(Feed generated with FetchRSS)

Uncover the intricacies of the Quantum Financial System and gain insight into its functionality. A comprehensive guide to its workings and impact.

(Feed generated with FetchRSS)

US Officials Target Nvidia and DeepSeek Amid Fears of China’s A.I. Progress

China’s success in artificial intelligence has the Trump administration and lawmakers weighing rules and investigations to slow Beijing’s progress in the industry.

OpenAI Unveils New ‘Reasoning’ Models o3 and o4-mini

The company also introduced a new tool that helps computer programmers use chatbots when writing code.