Do NOT follow this link or you will be banned from the site!

Techradar

Starship blooper: Windows 10 update gets weirdest bug yet

- Windows 10 recently got a patch tinkering with the Recovery Environment

- Some users are seeing an error message saying the patch failed to install

- In fact, the update installed just fine, and the error message is the error

Some Windows 10 users are encountering an error message after applying a fresh patch for the operating system, informing them that the update failed – when in fact it didn’t.

Neowin spotted the update in question (known as KB5057589) which was released last week (separately from the main cumulative update for April) and tinkers with the Windows Recovery Environment (WinRE) on some Windows 10 PCs (versions 21H2 and 22H2).

Far from all Windows 10 users will get this, then, but those who do might be confronted by an error message after it has installed (which is visible in the Windows Update settings page).

It reads: “0x80070643 – ERROR_INSTALL_FAILURE.”

That looks alarming, of course, and seeing this, you’re going to make the fair assumption that the update has failed. However, as mentioned, the error isn’t with the update, but the actual error message itself.

Microsoft explains: “This error message is not accurate and does not impact the update or device functionality. Although the error message suggests the update did not complete, the WinRE update is typically applied successfully after the device restarts.”

Microsoft further notes that the update may continue to display as ‘failed’ (when it hasn’t) until the next check for updates, after which the error message should be cleared from your system.

There’s nothing wrong here, in short, except the error itself, but that’s going to confuse folks, and maybe send them down some unnecessary – and potentially lengthy – rabbit holes in order to find further information, or a solution to a problem that doesn’t exist.

The trouble is what’s compounding this is that the whole WinRE debacle has been a long-running affair. A patch for this was previously released in January 2025 most recently, and there were others before that, with some folks witnessing repeated installations of this WinRE fix, which is confusing in itself.

That’s why those rabbit holes that you might get lost down could end up seeming so deep if you don’t manage to catch Microsoft’s clarification on this matter.

Microsoft says it’s working to resolve this errant error and will let us know when that happens. At least you’re now armed with the knowledge that the update should be fine despite what the error – in block capitals plastered across your screen – tells you (and it should be cleared from your PC in a short time).

You may also like...- Microsoft is digging its own grave with Windows 11, and it has to stop

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Microsoft looks to be making a big change to how you install and log in to Windows 11 – and I’m not happy about it at all

James Cameron thinks VR is the future of cinema, but Meta needs to solve a major content problem first

- James Cameron sat down with Meta's CTO Andrew Bosworth on Bosworth's podcast to talk VR cinema

- Cameron says VR allows him to finally show his films the way they should be seen

- He teased the Quest 4, but Bosworth stopped Cameron revealing too much

James Cameron, the Hollywood director behind Titanic, The Terminator and Avatar – believes VR headsets are the future of cinema, based on his experience with next-gen Meta Quest headsets that he isn’t allowed to talk about.

And, Meta Quest 4 teasers aside, I think he’s got a point – but Meta needs to make some big changes before Cameron's vision can become a reality (and I’m not talking about its hardware).

In a sit-down interview with Meta CTO Andrew Bosworth on Bosworth’s Boz to the Future podcast, Cameron was keen to serve as VR cinema’s hype-man.

Cameron explained that VR headsets allow you to get all the benefits of a movie theatre – the curated, 3D, immersive experience – without the downsides – such as a “dim and dull” picture – resulting in an end product that much more closely matches the creator’s vision for the film.

“It was like the heavens parted, light shone down,” Cameron told Bosworth. “There was an angel choir singing. It's like, 'Ah'! This is how people can see the movie the way I created it to be seen!”

It seems that Cameron isn’t simply using a Meta Quest 3 or Quest 3S to enjoy his 3D movies either; instead he’s using some Quest prototypes that Andrew Bosworth wasn’t keen for him to talk more about.

While he couldn’t reveal much beyond the prototypes’ existence – which isn’t much considering that Meta very openly develops VR headset prototypes to inspire future designs (and even lets people try them from time to time at tech events) – we do know that the experience is apparently “at least as good as Dolby Laser Vision Cinema” according to Cameron.

It’s the “ne plus ultra” (read: ultimate) theater option according to the director, suggesting that Meta is focusing on visual performance with its prototypes, and therefore possibly making that the main upgrade for the Meta Quest 4 or Meta Quest Pro 2.

As with all leaks and rumors we can’t read too much into Cameron’s comments. Even with these prototypes Meta could focus on other upgrades instead of the display, or it could be designing for the Quest 5 or Quest Pro 3, but given that previous leaks have teased that upcoming Meta headsets will pack an OLED screen it feels safe to assume that visual upgrades are inbound.

That will certainly be no bad thing – in fact it would be a fantastic improvement to Meta’s headsets – but if Meta wants to capture the home cinema experience it shouldn’t just focus on its screens, it needs to focus on content too.

VR's 3D film problem

I’ve previously discussed how it’s an open secret that the simplest (and really the only) way to watch blockbuster 3D movies on a Quest headset involves some level of digital piracy.

3D movie files are difficult to acquire, and 3D movie rental services from the likes of Bigscreen aren’t currently available. And I’ve also complained how absurd this is because, as James Cameron points out, using your VR headset for cinema is superb, because it immerses you in your own portable, private theater.

So while the prospect of the Meta Quest 4 boasting high-end displays for visual excellency is enticing, I’m more concerned about how Meta will tackle its digital content library issue.

The simplest solution would be to form streaming deals like Apple did with Disney Plus. Disney’s service on the Vision Pro allows users to watch Disney’s 3D content library at no additional charge – though it frustratingly appears to be some kind of exclusivity deal, based on the fact the same benefits are yet to roll out to other headsets or the best AR smart glasses for entertainment.

Another option – which Cameron points to – is for Meta to make exclusive deals with creatives directly, so they create new 3D films just for Quest, although worthwhile films take time (and a lot of money) to produce, meaning that Meta’s 3D catalog can’t rely on fresh exclusives alone.

Hopefully this podcast is a sign that Meta is looking to tackle the 3D movies in VR problem from all sides – both hardware and software – as VR entertainment can be superb.

While it is more isolated than the usual home theater experience, the immersive quality or VR, combined with its ability to display your show or film of choice on a giant virtual screen, is a blast.

At the moment the big drawback is the lack of content – but here’s hoping that’s about to change.

You might also like- Google’s surprise Android XR glasses tipped to land in 2026 – and my only complaint is they aren’t launching sooner

- This beginner-friendly train-driving simulator is the chillest Meta Quest 3 game I've played – but it's not for everyone

- You might get a free Meta Quest 3 to use on your next flight, but I'm not keen on the advertising it'll serve you

Grok may start remembering everything you ask it to do, according to new reports

- xAI’s Grok 3 chatbot has added a voice mode with multiple personalities

- One personality is called “unhinged” and will scream and insult you

- Grok also has personalities for NSFW roleplay, crazy conspiracies, and an “Unlicensed Therapist” mode

xAI’s Grok may be about to start remembering your conversations as part of a broader slate of updates rolling out, all of which seek to match ChatGPT, Google Gemini, and other rivals. Elon Musk’s company tends to pitch Grok as a plucky upstart in a world of staid AI tools; it also seems to be aiming for parity on features like memory, voice, and image editing.

As spotted by one user on X, it appears that Grok will get a new "Personalise with Memories" switch in settings. This would be a big deal if it works and mark a shift from momentary utility to long-term reliability. Grok's reported memory system, which is still in development but already appearing in the web app, will allow Grok to reference previous chats.

This means if you’ve been working with it on something like planning a vacation, writing a screenplay, or just keeping track of the name of that documentary you wanted to watch, Grok could say, “Hey, didn’t we already talk about this?”

Grok’s memory is expected to be user-controlled as well, which means you’ll be able to manage what the AI remembers and delete specific memories or everything Grok has remembered all at once. That’s increasingly the standard among AI competitors, and it’ll likely be essential for trust, especially as more people start using these tools for work, personal planning, and remembering which child prefers which bedtime story.

This should put Grok more or less on par with what OpenAI has done with ChatGPT’s memory rollout, albeit on a much shorter timeline. The breakneck pace is part of the pitch for Grok, even when it doesn't quite work yet. Some users have reported already seeing the memory feature available, but it's not available to everyone yet, and the exact rollout schedule is unclear.

Remember GrokOf course, giving memory to a chatbot is a bit like giving a goldfish a planner, meaning it’s only useful if it knows what to do with it. Even so, xAI seems to be layering memory into Grok Web in tandem with a handful of other upgrades that lean toward making it feel more like an actual assistant and less like a snarky trivia machine.

This memory update is starting to appear as a range of other Grok upgrades loom on the horizon. Grok 3.5 is expected any day now, with Grok 4 slotted for the end of the year.

There’s also a new vision feature in development for Grok’s voice mode, allowing users to point their phones at things and hear a description and analysis of what's around them.

It's another feature that ChatGPT and Gemini users will find familiar, and Grok’s vision tool is still being tested. Upgrades are also coming to the recently released image editing feature that lets users upload a picture, select a style, and ask Grok to modify it.

It’s part of the ongoing competition among AI chatbots to make AI models artistically versatile. Combine that with the upcoming Google Drive integration, and Grok starts to look a little more serious as a competitor.

Also on the horizon is Grok Workspaces, a kind of digital whiteboard for collaborating with Grok on a more significant project. These updates suggest that xAI is pivoting to make Grok seem less like a novelty and more like a necessity. xAI clearly sees Grok’s future as being more useful than just a set of sarcastic and mean voice responses.

Still, even as Grok gains these long-awaited features, questions remain about whether it can match the depth and polish of its more established counterparts. It’s one thing to bolt a memory system onto a chatbot. It’s another thing entirely to make that memory meaningful.

Whether Grok becomes your go-to assistant or stays a curious toy used only when some aspect goes viral depends on how well xAI can connect all these new capabilities into something cohesive, intuitive, and a little less chaotic. But for now, at least, it finally remembers your name.

You might also likeApple has a plan for improving Apple Intelligence, but it needs your help – and your data

Apple Intelligence has not had the best year so far, but if you think Apple is giving up, you're wrong. It has big plans and is moving forward with new model training strategies that could vastly improve its AI performance. However, the changes do involve a closer look at your data – if you opt-in.

In a new technical paper from Apple's Machine Learning Research, "Understanding Aggregate Trends for Apple Intelligence Using Differential Privacy," Apple outlined new plans for combining data analytics with user data and synthetic data generation to better train the models behind many of Apple Intelligence features.

Some real dataUp to now, Apple's been training its models on purely synthetic data, which tries to mimic what real data might be like, but there are limitations. In Genmoji's, for instance, Apple's use of synthetic data doesn't always point to how real users engage with the system. From the paper:

"For example, understanding how our models perform when a user requests Genmoji that contain multiple entities (like “dinosaur in a cowboy hat”) helps us improve the responses to those kinds of requests."

Essentially, if users opt-in, the system can poll the device to see if it has seen a data segment. However, your phone doesn't respond with the data; instead, it sends back a noisy and anonymized signal, which is apparently enough for Apple's model to learn.

The process is somewhat different for models that work with longer texts like Writing tools and Summarizations. In this case, Apple uses synthetic models, and then they send a representation of these synthetic models to users who have opted into data analytics.

On the device, the system then performs a comparison that seems to compare these representations against samples of recent emails.

"These most-frequently selected synthetic embeddings can then be used to generate training or testing data, or we can run additional curation steps to further refine the dataset."

A better resultIt's complicated stuff. The key, though, is that Apple applies differential privacy to all the user data, which is the process of adding noise that makes it impossible to connect that data to a real user.

Still, none of this works if you don't opt into Apple's Data Analytics, which usually happens when you first set up your iPhone, iPad, or MacBook.

Doing so does not put your data or privacy at risk, but that training should lead to better models and, hopefully, a better Apple Intelligence experience on your iPhone and other Apple devices.

It might also mean smarter and more sensible rewrites and summaries.

You might also like5 reasons why Apple making iPadOS 19 more like macOS is a great idea – and 3 reasons why it could be a disaster

Notorious Apple leaker Mark Gurman has reported that Apple is planning a major overhaul of iPadOS (the operating system iPads use) to make it work a lot like macOS – and I think this could be a great move, though one that also comes with plenty of danger.

Gurman is very well respected when it comes to Apple leaks, so while we probably won’t get any official idea of how iPadOS 19 is shaping up until Apple’s WWDC event in June, this could still be a big hint at the direction Apple is planning to take its tablet operating system.

In his weekly Power On newsletter for Bloomberg, Gurman claims that “this year’s upgrade will focus on productivity, multitasking and app window management — with an eye on the device operating more like a Mac,” and that Apple is keen to make its operating systems (macOS, iPadOS, iOS and visionOS primarily) more consistent.

As someone who uses the M4-powered iPad Pro, this is music to my ears. Ever since I reviewed it last year, I’ve been confused by the iPad Pro. It was Apple’s first product to come with the M4 chip, a powerful bit of hardware that is now more commonly found in Macs and MacBooks (previous M-class chips were only used in Apple’s Mac computers, rather than iPad tablets).

However, despite offering the kind of performance you’d expect from a MacBook, I found the power of the M4 chip largely went to waste with the iPad Pro due to it still using iPadOS, and was confined to running simplified iPad apps, rather than full desktop applications.

Even if this move still means you can’t run macOS apps on the iPad Pro, it could still make a massive difference, especially when it comes to multitasking (running multiple apps at the same time and switching between them). If Apple nails this, it would go a long way to making the iPad Pro a true MacBook alternative.

But, making iPadOS more like macOS could bring downsides as well, so I’ve listed five reasons why this could be a great move – and three reasons why it could all go wrong.

5 reasons why making iPadOS more like macOS is a great idea 1. It means the iPad Pro makes more sense

The biggest win when it comes to making iPadOS more like macOS is with the powerful iPad Pro. Hardware-wise, the iPad Pro is hard to fault, with a stunning screen, thin and light design, and powerful components.

However, despite its cutting-edge hardware, it can only run iPad apps. These are generally simple and straightforward apps that have been designed to be used with a touchscreen. These apps also need to be able to be run on less powerful iPads as well.

This means advanced features are often left for the desktop version of the app, and any performance improvement owners of the iPad Pro get over people using, say, the iPad mini will be modest. Certainly, when I use the iPad Pro, it feels like a lot of its power and potential is limited by this – so a lot of the expensive hardware is going to waste.

Making iPadOS more like macOS could – in an ideal world – lead to the ability to run Mac applications on the iPad Pro. At the very least, it could mean some app designers make their iPad apps come with a Mac-like option.

If it means multitasking is easier, then that will be welcome as well. One of the things I struggled with when I tried using the iPad Pro for work instead of my MacBook was having multiple apps open at once and quickly moving between them. Cutting and pasting content between apps was particularly cumbersome, not helped by the web browser I was using (Chrome) being the mobile version that doesn’t support extensions.

It made tasks that would take seconds on a MacBook a lot more hassle – a critical problem that meant I swiftly moved back to my MacBook Pro for work.

2. It could be just in time for M5-powered iPad Pros

If, as rumored, this major change to iPadOS will be announced at Apple’s WWDC 2025 event, then it could nicely coincide with the rumored reveal of a new iPad Pro powered by the M5 chip.

While I’m not 100% convinced about an M5 iPad Pro, seeing as Apple is still releasing M4 devices, the timing would make sense. If Apple does indeed announce an even more powerful iPad Pro, then iPadOS, in its current form, would feel even more limiting.

However, if Apple announces both a new M5 iPad Pro and an overhaul of iPadOS to make use of this power, then that could be very exciting indeed. And, with WWDC being an event primarily aimed at developers, it could be a great opportunity for Apple to show off the new-look iPadOS and encourage those developers to start making apps that take full advantage of the new and improved operating system.

3. It makes it easier for Mac owners to get into iPad ecosystem

Gurman’s mention of Apple wanting to make its operating systems more consistent is very interesting. One of Apple’s great strengths is in its ecosystem. If you have an iPhone, it’s more likely that you’ll get an Apple Watch over a different smartwatch, and it means you might also have an Apple Music subscription and AirPods as well.

Making iPadOS more like macOS (and iOS and other Apple operating systems) can benefit both Apple and its customers.

If a MacBook owner decides to buy an iPad (Apple’s dream scenario) and the software looks and works in a similar way, then they’ll likely be very happy as it means their new device is familiar and easy to use. And that could mean they buy even more products, which will again be just what Apple wants.

4. It would give iPadOS more of an identity

I don’t know about you, but I just think of iPadOS as just iOS (the operating system for iPhones) with larger icons. Maybe that’s unfair, but when the iPad first launched, it was running iOS, and even with the launch of iPadOS in 2019, there are only a handful of features and apps that don’t work on both operating systems.

By making iPadOS a combination of iOS and macOS, it would ironically mean that iPadOS would feel like a more unique operating system, and it could finally step out of the shadow cast by iOS while still benefitting from being able to run almost all apps found in the iPhone’s massive app library.

5. It could mean macOS becomes a bit more like iPadOS

iPadOS getting macOS features could work both ways – so could we get some iPad-like features on a Mac or MacBook? There are things that iPadOS does better, such as being more user-friendly for beginners and turning an iPad into a second display for a nearby MacBook. All this would be great to see in macOS.

Having the choice of a larger interface that works well with touchscreens could even pave the way for one of the devices people most request from Apple: a touchscreen MacBook.

3 reasons why making iPadOS more like macOS is a bad idea 1 . It could overcomplicate things

One of iPadOS’ best features is its simplicity, and while I feel that simplicity holds back a device like the iPad Pro, for more casual users on their iPad, iPad mini, or iPad Air, that ease-of-use is a huge bonus.

If iPadOS were to become more like macOS, that could delight iPad Pro owners, but let’s not lose sight of the fact that the iPad Pro is a niche device that’s too expensive for most people. macOS-like features on an iPad mini, for example, just doesn’t make sense, and Apple would be silly to make a major change that annoys the majority of its customers to please just a few.

2. It could cause a divergence with iOS – and lead to fewer apps

The iPad initially launching with iOS was an excellent decision by Apple, as it meant that people who had bought the new product had instant access to thousands of iPhone apps.

While it wasn’t perfect at first – some apps didn’t work well with the iPad’s larger screen- it was likely much easier than if the iPad had launched with a completely new operating system that then needed developers to create bespoke applications for it.

Think of it this way: if you were an app developer with limited resources (both time and money), would you make an app for a system that already had millions of users or risk making an app for a new product with a tiny user base? The answer is simple – you’d go for the large user base (almost) every time, so if it hadn’t launched with iOS and access to the App Store, then the original iPad could have been a flop. Just look at Microsoft’s attempts with the Windows Phone – it needed developers to create a third version of their apps, alongside iOS and Android versions. Very few developers wanted to do that, which meant Windows Phone devices launched with far fewer apps than Android and iPhone rivals.

If iPadOS moves closer to macOS, could we see fewer apps make it to iPad? While iPads are incredibly popular, they are still nowhere near as popular as iPhones, so if devs have to choose between which audience to make an app for, you can bet it’ll be for the iPhone.

However, if future iPadOS apps will remain essentially iOS apps but with an optional macOS-like interface, that could still mean the new look is dead on arrival, as developers will prefer to concentrate on the interface that can be used by the widest audience rather than just iPad Pro users.

3. You’ll probably need expensive peripherals to make the most of it

iPadOS works so well because it’s been designed from the ground up to be used on a touchscreen device. You can buy a new iPad, and all you need to do is jab the screen to get going.

However, macOS is designed for keyboard and mouse/trackpad, so if you want to make the most out of a future version of iPadOS that works like macOS, you’re going to need to invest in peripherals – and some of them can be very expensive.

The Magic Keyboard for iPad Pro is a brilliant bit of kit that quickly attaches to the iPad and turns it into a laptop-like device with a physical keyboard and touchpad, but it also costs $299 / £299 / AU$499 – a hefty additional expense, and I can almost guarantee that to use any macOS-like features in iPadOS, you’ll really need some sort of peripherals. This will either make things too expensive for a lot of people, or if you choose a cheaper alternative such as a Bluetooth keyboard and mouse, it then takes away from the simplicity of using an iPad.

This could mean fewer people actually use the macOS-like elements, which in turn would mean there’s less incentive for app developers to implement features and designs that only a small proportion of iPad users will use.

So, I’m all for more macOS features for my iPad Pro – but I am also very aware that I am in the minority when it comes to iPad owners, and Apple needs to be careful not to lose what made the iPad so successful in the first place just to placate people (like me) who moan about iPads being too much like iPads. Maybe it would just be better if I stuck with my MacBook instead.

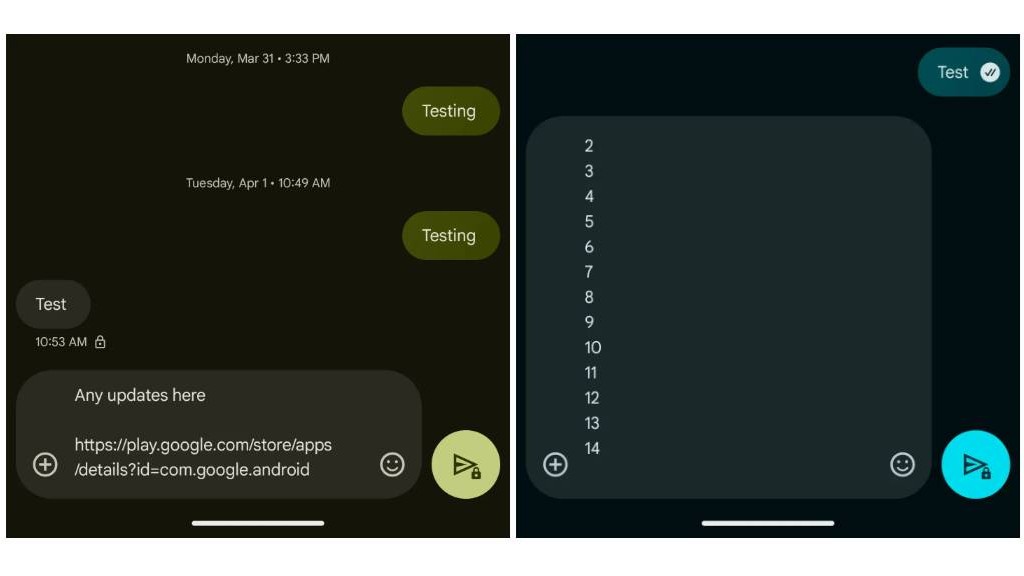

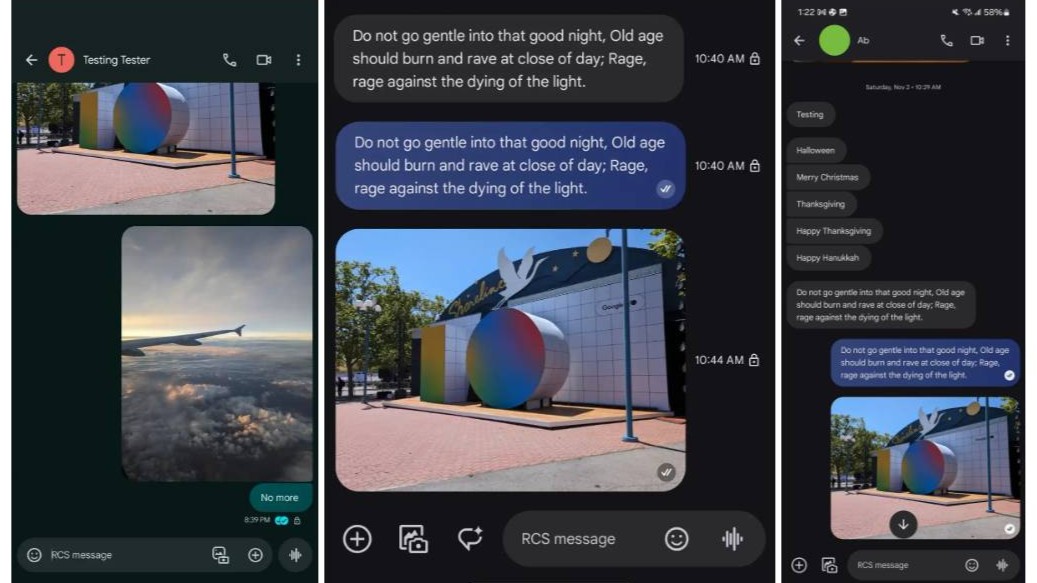

You might also likeGoogle Messages is testing some useful upgrades – here are 5 features that could be coming

- Google is testing even more new features in its Messages beta app

- These include an expanded 14-line message view and new RCS message labels

- While these are still in beta testing, they could start rolling out to users this month

Over the past couple of months, Google has been doubling down on eradicating all traces of Google Assistant to make Gemini its flagship voice assistant, but amidst the organized Gemini chaos, Google has been paying a lot of attention to improving its Messages app, giving it some much-needed TLC.

It’s safe to say that the new revisions to the Google Messages app have significantly improved its UI. Its new snooze function for group chats also comes to mind, but Google is still in its beta testing era. For a while, Google was experimenting with an easier way to join group chats, following WhatsApp’s footsteps. Now, it’s testing five more features that could make up the next wave of Google Messages upgrades this month.

Although these features are in beta, there’s been no comment on whether they’ll be officially rolling out to users. With that said, we’ll be keeping an eye out for any further updates.

Google expands its 4-line text field limit

Just a few weeks ago, we reported on a new upgrade found in Google Messages beta indicating that Google would get better at handling lengthy text messages.

For a while, Google Messages users have been restricted to a four-line view limit when sending texts, meaning that you would need to scroll to review your entire message before sending. This is particularly frustrating when sending long URL links.

But that could soon be a thing of the past, as 9to5Google has picked up a new beta code that reveals an expanded message composition field on the Pixel 9a that now reaches up to 14 lines.

New RCS labelsRecently, Google has been testing new in-app labels that could distinguish whether you’re sending an SMS or RCS message.

Thanks to an APK teardown from Android Authority, the labels found in beta suggest that soon you’ll be able to see which of your contacts are using RCS in Messages, adding a new RCS label to the right side of a contact’s name or number.

Unsubscribe from automated textsThis is a feature we’re quite excited to see, and we’re hoping for a wider rollout this month. A few weeks ago, an unsubscribe button was spotted at the bottom of some messages, which could give users an easier way of unsubscribing to automated texts and even the option to report spam.

When you tap this, a list of options will appear asking you for your reasons for unsubscribing, which include ‘not signed up’, ‘too many messages’, and ‘no longer interested’ as well as an option for ‘spam’. If you select one of the first three, a message reading ‘STOP’ will be sent automatically, and you’ll be successfully unsubscribed.

Read receipts gets a new look

Google could introduce another revamp of how you can view read receipts in the Messages app. In November 2024, Google tested a redesign of its read receipts that placed the checkmark symbols inside the message bubbles, which used to appear underneath sent messages.

In January, Google tested another small redesign introducing a new white background, which could roll out soon, and while this isn’t a major redesign, it’s effective enough to make read receipts stand out more.

Camera and gallery redesign, and sending ‘original quality’ mediaWe first noticed that Google Messages was prepping a new photo and video quality upgrade. In March, more users started to notice a wider availability, but it’s still not yet fully rolled out, meaning it could be one of the next new updates in the coming weeks.

Essentially, Google could be rolling out a new option that allows you to send media, such as photos and videos, in their original quality. This will give you the choice of the following two options:

‘Optimize for chat’ - sends photos and videos at a faster speed, compromising quality.

‘Original quality’ - sends photos and videos as they appear in your phone’s built-in storage.

You might also like- Google Messages emoji reaction 'bug' is actually just a test for beta users, Google confirms

- Google Messages finally reverses an annoying change, making it easier to organize your contacts

- Google Messages could soon tell you which group chat members have read your messages - and I'm ready to snoop like never before

OpenAI promises new ChatGPT features this week – all the latest as Sam Altman says ‘we've got a lot of good stuff for you’

Another week, another OpenAI announcement. Just last week the company announced ChatGPT would get a major memory upgrade, and now CEO, Sam Altman, is hinting at more upgrades coming this week.

On X (formerly Twitter), Altman wrote last night, "We've got a lot of good stuff for you this coming week! Kicking it off tomorrow."

Well, tomorrow has arrived, and we're very excited to see what the world's leading AI company has up its sleeve.

We're not sure when to expect the first announcement, but we'll be live blogging throughout the next week as OpenAI showcases what it's been working on. Could we finally see the next major ChatGPT AI model?

Good afternoon everyone, TechRadar's Senior AI Writer, John-Anthony Disotto, here to take you through the next few hours in the lead up to OpenAI's first announcement of the week.

Will we see something exciting today? Time will tell.

Let's get started by looking at what Sam Altman said on X yesterday. The OpenAI CEO hinted at a big week for the company, and it's all "kicking off" today!

we've got a lot of good stuff for you this coming week!kicking it off tomorrow.April 13, 2025

One of the announcements I expect to see this week is ChatGPT 4.1, the successor to 4o. Just last week, a report from The Verge said the new AI model was imminent, and considering Altman's tweet, it very well could arrive today.

GPT-4.1 will be the successor to 4o, and while we're not sure what it will be called, it could set a new standard for general use AI models as OpenAI's competitors like Google Gemini and DeepSeek continue to catch up, and sometimes surpass ChatGPT.

ChatGPT was the most downloaded app in the world for March, surpassing Instagram and TikTok to take the crown.

That's an impressive feet for OpenAI's chatbot which has become the go-to AI offering for most people. The recently released native 4o image generation has definitely helped increase the user count, as I've started to see more and more of my friends and family jump on the latest trends.

Whether that's creating a Studio Ghibli-esque image, an action figure of yourself, or turning your pet into a human, ChatGPT is thriving thanks to its image generation tools.

Speaking of the pet-to-human trend, I tried it earlier, and I was horrified by the results.

If you've not been on social media over the weekend, you may have missed thousands of people sharing images of what their dogs or cats would like as humans.

This morning I decided to give it a go, and then I went even further and converted an image of myself into a dog. Let's just say this is one of my least favorite AI trends of 2025 so far, and I don't want to think about my French Bulldog as a human ever again!

There's no information on when to expect OpenAI's announcement today, but based off of previous announcements we should get something around 6 PM BST / 1 PM ET / 11 AM PT.

Your guess is as good as mine on whether we'll get daily announcements this week like the 12 days of OpenAI announcements in December.

We'll be running this live blog over the next few days so as soon as Altman and Co makes an announcement you'll get it here. Stay tuned!

One hour to go?

Around an hour to go until the expected OpenAI announcement. What will it be?

Could we see GPT-4.1? Or will we see some new agentic AI capabilities that take OpenAI's offerings to a whole new level?

Last week's memory upgrade was a huge deal, will today's announcement top that, or are we getting excited over a fairly minimal update? Stay tuned to find out!

Hello, Jacob Krol – TechRadar's US Managing Editor News – stepping in here as we await whatever OpenAI has in store for us today.

As my colleague John-Anthony has explained thus far, CEO Sam Altman teased, "We've got a lot of good stuff for you" this week, and it's a waiting game now.

OpenAI drops GPT-4.1 in the API, which is purpose-built for developersThis one is for developers, that is unless OpenAI has something else up its sleeve for later today. The AI giant has just unveiled GPT-4.1 in the API, a model purpose-built for developers. It's a family consisting of these models: GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano.

While Sam Altman is not on the live stream for this unveiling, the OpenAI team – Michelle Pokrass, Ishaan Singal, and Kevin Weil – is walking through the news. GPT-4.1 is specifically designed for coding, following instructions, and long-context understanding.

It seems that with the instruction following, the focus is on letting the models understand the prompt as intended. I've experienced this in the standard GPT-4o mini with ChatGPT Plus, but it seems that with the evaluation metrics, the GPT-4.1 in the API is much better at following instructions since it's trained for it.

And the ideal result will be a much more straightforward experience, where you might need to converse further to get the results you were after.

On the live stream, the OpenAI team walks through several demos that show how GPT-4.1 focuses on its three specialties – coding, following instructions, and long-context understanding – and that it's less degenerate and less verbose.

OpenAI says these models are the fastest and cheapest models it has ever built, and all three are out now in the API. And if you have access to the API, these are available right now.

Sam Altman, OpenAI's CEO, wasn't on the livestream today but still took to X (formerly Twitter) to discuss the updates. He later retweeted a few others, including one user who said GPT-4.1 has already helped with workflows.

Again, it's not available to the general consumer, but it is out in the API already with major enhancements promised. So, while you might not encounter it, some websites or services you use might be employing it.

GPT-4.1 (and -mini and -nano) are now available in the API!these models are great at coding, instruction following, and long context (1 million tokens).benchmarks are strong, but we focused on real-world utility, and developers seem very happy.GPT-4.1 family is API-only.April 14, 2025

Yesterday's announcements

Just in case you missed yesterday's major announcements: OpenAI unveiled GPT-4.1 in the API — a model purpose-built for developers. It's a family consisting of GPT-4.1, GPT-4.1 Mini, and GPT-4.1 Nano.

These models are expected to become available later this year, replacing GPT-4o. While consumers won't see any immediate benefits, there's plenty to be excited about going forward.

It's unclear whether OpenAI will announce more updates today, but there's a chance, especially given Sam Altman’s suggestion that this could be a full week of announcements.

Either way, stay tuned to the live blog — we’ll update it as soon as we hear anything new in the world of ChatGPT.

Can’t securely login to your PC using facial recognition anymore? New Windows 11 update patch is likely to blame – if you can install the update at all

- Windows 11 24H2’s April patch is causing some odd bugs

- There are multiple problems with Windows Hello logins

- The update is also completely failing to install for some people

Windows 11 24H2’s latest patch is causing some trouble with failures to install (once again) and also Windows Hello is ending up broken in some cases.

Let’s start with the bug in Windows Hello, which is the feature that allows for secure logins to your PC (via facial recognition using a webcam, or other methods besides).

Windows Latest reports that some users have found that the cumulative update for April 2025 (known as KB5055523) is affecting those who use Windows Hello for facial recognition with a privacy shutter over the webcam.

How does a facial login even work with a privacy shutter obscuring the camera? Well, it works just fine because the tech leverages an infrared sensor on the camera which can function through a physical shutter (a plastic slider that more privacy conscious folks use to rule out any danger of them being spied on through the webcam).

The problem is that the April update appears to mess with the infrared sensor so login no longer works unless you open that privacy shutter – which is hardly ideal.

Windows Latest found the issue present on an HP Spectre laptop, also highlighting a report from a Windows 11 user who has been affected by this bug and tells us: “I’ve reproduced the issue several times, with several clean installs. The webcam we’re using is the Logitech Brio 4k, with the latest firmware and drivers, which is compatible with Windows Hello.”

There are other reports on Reddit, too, such as this one: “Just wanted to see if anyone else is experiencing issues with Windows Hello face recognition since the latest update (KB5055523). Before, I had the camera (Brio 4K) covered and it would work fine. Now, I have to remove the privacy cover for it to recognize me. It doesn’t seem to be able to unlock with the infrared camera alone now.”

There are a number of replies from folks echoing that they have the same bug affecting their logins. The only solution is seemingly to uninstall the April update (which strongly suggests this is a problem caused by this latest patch from Microsoft).

That said, Windows Latest does offer a possible solution, which is achieved by heading into Device Manager (just type that in the Windows search box, and click on it). In there, you need to click on the little arrow next to where it says ‘Cameras’ and then you might see two cameras listed – the IR (infrared) camera and RGB/color (normal) camera. Right click on the latter and disable it, but leave the infrared camera on. Now, if you go and set up Windows Hello again, it may work correctly.

Windows Latest doesn’t guarantee this, though, and says this will only do the trick for some Windows 11 users. If you’re desperate for a fix, you can give this a whirl, as you could be waiting a while for the official solution from Microsoft. Just don’t forget that you will, of course, have to reenable the main (RGB) camera after any fix is applied.

Interestingly, in the official patch notes for the April update, Microsoft does flag a problem with Windows Hello – but not this one. Rather, this is a separate issue, albeit one that shouldn’t affect those running Windows 11 Home.

Microsoft explains: “We’re aware of an edge case of Windows Hello issue affecting devices with specific security features enabled … Users might observe a Windows Hello Message saying ‘Something happened and your PIN isn't available. Click to set up your PIN again’ or ‘Sorry something went wrong with face setup.’”

Microsoft provides instructions on what to do if you encounter this particular problem via its April patch notes (under known issues).

However, this bug only affects those using System Guard Secure Launch (or Dynamic Root of Trust for Measurement), which is only supported on Windows 11 Pro (or enterprise editions). So as noted, if you’re on Windows 11 Home, you shouldn’t run into this hiccup.

Windows Latest also picked up a second major problem, namely installation failures (which are nothing new). These seem to be happening again with the April 2025 update, with the usual meaningless error messages accompanying an unsuccessful attempt (hexadecimal stop errors such as ‘0x80070306’).

The tech site notes that it has verified reports of the update failing to install in this way, or its progress getting stuck at either 20% or 70% in some cases, never actually completing. Windows Latest informs us that Microsoft is seemingly investigating these installation failures.

There are other reports of this kind of problem on Microsoft’s Answers.com help forum (and some possible suggestions of solutions from a customer service rep, some of which have reportedly worked for some, but not for others). In the case of the original poster of this thread, the update was repeatedly failing to install and showing as ‘pending restart’ which is an odd twist in the tale.

Overall, then, there’s some degree of weirdness going on here, as the Windows Hello failures are rather odd, as are some of these installation glitches. Am I surprised at that, though? No, because Windows 11 24H2 has produced some very off-the-wall bugs (and general bizarreness besides) since it first came into being late last year.

If you’re in the mood for some highlights of the more extreme oddities that have been inflicted on us by version 24H2, read on…

1. Language swap goes completely off the rails

Some of the most peculiar bugs I’ve ever seen have surfaced in Windows 11, and particularly in the 24H2 update.

One of my favorites – if that’s the right word (it probably isn’t if you were affected by this problem) – is the spanner in the works somewhere deep in Windows 11 that caused the operating system to be displayed as a mix of two different languages. This happened when some users changed the language in Windows 11 from one choice to another, after which a good deal of the operating system’s menus and text remained in the original language. Confusing? No doubt. How did it even happen? I haven’t got a clue.

2. Baffling deletion of Copilot

Last month, Microsoft managed a real doozy by allowing a bug through that actually ditched Copilot. Yes, in a time where the software giant is trying desperately to promote its AI assistant and rally support, last month’s patch uninstalled the Copilot app for some Windows 11 users. That was highly embarrassing for Microsoft, especially as some folks felt it was the first Windows bug they were pleased to be hit with.

3. See more – or less – of File Explorer

A really memorable one for me was late last year when Windows 11 24H2 was beset by a problem whereby a menu in File Explorer (the folders on your desktop) flew off the top of the screen. Yes, the ‘See more’ menu offering more options to interact with files went past the border of the screen, so most of it wasn’t visible – you saw less of it, ironically. And that meant you couldn’t use those non-visible options.

Again, how did Microsoft break a major part of the Windows 11 interface in such a fundamentally crude way? Your guess is as good as mine, but I suspect the transition to a new underlying platform for Windows 11 24H2 had something to do with it. (This bug has only just been fixed, by the way, and that happened with this most recent April update).

You may also like...- Microsoft is digging its own grave with Windows 11, and it has to stop

- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Microsoft looks to be making a big change to how you install and log in to Windows 11 – and I’m not happy about it at all

Google’s surprise Android XR glasses tipped to land in 2026 – and my only complaint is they aren’t launching sooner

- Google revealed prototype AR glasses at TED

- Those glasses are apparently being made by Samsung under Android XR

- The AR glasses are reportedly set to release in 2026

The Ray-Ban Meta smart glasses have been a wild success for Meta, and it appears that Samsung and Apple have taken notice as the duo plans to launch their own Android XR and Apple XR glasses.

We got our first peek at the Android XR glasses last week during a TED talk hosted by Google's head of augmented reality and extended reality, Shahram Izadi.

The specs are a significantly smaller package than the Project Moohan Android XR headset Google and Samsung are collaborating on (via Axios) – and you can see the headset behind him on a shelf to get some sense of the size difference.

In the surprise demo, Izadi used the glasses to perform a few tasks including live translation from Farsi to English, scanning a book using its in-built cameras, and helping them find their keys using a feature called ‘Memory.’ They also pack in a display so the wearer can receive information visually, not just via audio cues.

Now The Korea Economic Daily is claiming this prototype is Samsung-made under the duo’s existing Android XR partnership – and it’s slated to launch next year.

While leaks should be taken with a pinch of salt we’ve heard plenty of reports that Meta plans to launch its Ray-Ban Meta smart glasses with a display later this year, so it’s not out of the question that an Android XR device could follow soon after.

What’s more, given how impressive the prototype seems to be already – being able to perform a slew of tasks in a sleek package – it again seems likely that Google and Samsung aren’t far from having a consumer-ready product. I just hope they aren’t as pricey as Meta’s glasses are rumored to be.

What's Apple up to?

Apple is also keen to create lightweight AR specs, according to Bloomberg's Mark Gurman (behind a paywall). According to people familiar with Apple's AR plans "It’s the only thing he’s really spending his time on from a product development standpoint.”

Though according to Gurman, Apple’s glasses might still be a few years away.

Because true AR glasses aren't yet achievable in Apple's mind (according to people in the know) it apparently first wants to focus on equipping its Apple Watch and AirPods with AI cameras to achieve some of the functionality of the Ray-Ban Meta AI glasses we have today.

Given Apple’s struggles with AI on the iPhone 16 it makes sense that it wouldn’t currently want to focus on AI smart glasses, though it does feel it could be late to the glasses party considering how soon Samsung, Google and Meta are expected to be launching specs.

As with all leaks and speculation we’ll have to wait and see what Apple has up its sleeve, but it could benefit from the same advantage Google will – one that Meta lacks.

The smartphone synergy strategyThe most striking part of Google’s prototype is the XR device looks an awful lot slimmer than other prototype AR glasses we’ve seen – such as the Meta Orion AR specs and Snap’s 5th-gen Spectacles developer kit. That’s because it is apparently leveraging Google’s biggest advantage: an Android phone according to Izadi.

He explained, "These glasses work with your phone, streaming back and forth, allowing the glasses to be very lightweight and access all of your phone apps."

Because they lack their own phone brands to rely on, Meta and Snap would ideally want users to rely solely on their own standalone platform – one which they have complete control over to introduce the apps and experiences they most want to build. Control they don’t have when merely piggybacking off Android via phone apps.

Google, seeing as it runs the Android ecosystem, likely isn’t keen for people to abandon its phones quite yet.

So its glasses are instead designed to leverage the processors in your phone rather than a sophisticated chipset built into the glasses themselves (though we suspect they still have a little onboard processing power for simple display and camera operations).

This allows Google to remove some of the bulk a beefy chipset requires – such as sophisticated cooling and a bigger battery – to create a slimmer end product without sacrificing overall performance (at least in theory).

Apple could leverage a similar smartphone-to-glasses relationship with its own AR specs, though Meta and Snap’s third-party approach has one advantage in that they are system agnostic. You can use which smartphone you want, or you might not even need a smartphone at all.

Hopefully Google’s symbiotic relationship between its glasses and phones results in smart specs that aren’t as pricey as its competitors, as they don’t need to pack in as many components. For example, Meta’s rumored upcoming XR glasses with a screen are expected to cost as much as $1,400 (£1,100 / AU$2,200).

For now we'll need to wait and see, but Google and Samsung's smart specs look promising, and if they are set to launch next year 2026 can't come soon enough.

You might also like...- Pico 4 Ultra VS Meta Quest 3: the battle of the best mid-range VR headsets

- This beginner-friendly train-driving simulator is the chillest Meta Quest 3 game I've played – but it's not for everyone

- You might get a free Meta Quest 3 to use on your next flight, but I'm not keen on the advertising it'll serve you

Apple Vision Pro 2 tipped to fix two of its biggest flaws – here's why I might actually buy one

- A new report claims Apple is developing two new Vision Pro headset models

- One version will apparently be lighter and more affordable

- The other will connect to a Mac for a lower-latency experience

Despite its technical brilliance, Apple’s Vision Pro headset has struggled to sweep consumers off their feet, with serious questions being raised about the future of the virtual reality (VR) headset. In spite of this potential crisis, though, Apple apparently has a plan to right the ship and get the Vision Pro back on track.

At least, that’s according to Bloomberg journalist Mark Gurman’s latest Power On newsletter. There, Gurman says that Apple is working on two new Vision Pro models that could shake up the device and potentially give it a new foothold in the industry.

Neither of these models will be simple refreshes. While Gurman says that Apple had previously considered a few minor tweaks – like changing the headset’s chip from the M2 to the M5 chip – Apple is apparently now mulling more ambitious plans.

Instead, Apple is “now looking to go further,” Gurman says, with one device that is both cheaper and more lightweight than the current Vision Pro, which should make it both more accessible to consumers and reduce the neck strain that can sometimes occur after long periods of use.

The second Vision Pro in development is “more intriguing,” Gurman believes. Supposedly, this model will connect directly to a Mac, which will allow for greatly reduced latency. Some Vision Pro headsets are being used in surgery and in flight simulators, Gurman says, which are two areas where reduced latency will be incredibly important.

Addressing two key problems

Interestingly, Gurman likens the second Vision Pro to a product Apple reportedly canceled in January: a set of lightweight glasses outfitted with augmented reality (AR) capabilities that would be tethered to a Mac. The ultimate goal for Apple is to create an entirely wireless pair of AR glasses that a user can wear all day, but it will take many years before that device is ready for prime time.

Still, even if the next Vision Pro isn’t as lightweight as a pair of glasses, anything that reduces its weight will be a welcome change. I don’t own a Vision Pro myself, and one of the main reasons is that I’ve heard the stories of the pain caused by wearing it for too long. If I’m going to make a significant investment in a product like this, I want to be able to use it without risking soreness after just a few hours’ usage.

The other reason I’ve steered clear is the eye-watering price, with $3,500 feeling incredibly steep when the Vision Pro is still in its early stages. I know I’m not alone here, with multiple reports suggesting that sales of the device have been very slow.

If Apple can address both of those problems, I might finally be tempted to fork out for a Vision Pro, particularly for its Ultrawide Mac Virtual Display mode. We've previously described that as a "lightbulb moment" for the device, given it gives you a massive 32:9 aspect ratio – the equivalent of two displays side-by-side. That would definitely be more enticing on a headset that's more comfortable, and also potentially not too much more expensive than buying an extra monitor.

I’m not entirely convinced yet, but at least Apple seems to be heading in the right direction.

You might also likeMicrosoft warns that anyone who deleted mysterious folder that appeared after latest Windows 11 update must take action to put it back

- The April update for Windows 11 24H2 created a mysterious empty folder

- Some advised this could be deleted, but this isn’t actually the case

- If you delete the ‘inetpub’ folder, a security fix applied by the April patch won’t work, so you need to reinstate it if you removed the folder

Windows 11 24H2 users who were confused by a mysterious empty folder appearing on their system drive after applying the latest update for the OS should be aware that this is not a bug, but an intentional move – and that said folder shouldn’t be deleted.

In case you missed it, last week Windows 11 24H2 received its cumulative update for April 2025, and it created an ‘inetpub’ folder that was the source of some bewilderment or annoyance for those who noticed it.

You may also recall that some folks advised that it was fine to just delete the folder, not an unreasonable conclusion to reach seeing as it was empty, didn’t appear to do anything, and was related to Microsoft’s Internet Information Services (IIS) web server software for developers (and was appearing for those who didn’t have IIS installed).

Still, at the time, I advised that you removed it at your own risk and that it might be best left alone – seeing as it was empty and appeared harmless (and also just because you never quite know what’s going on with Windows). It seems I was right, as Microsoft has now warned against removing the folder, as noted at the outset.

Microsoft told Windows Latest that the folder is created as part of a security fix for a vulnerability that “can let local attackers trick the system into accessing or modifying unintended files or folders.”

In its advisory for this security patch, Microsoft notes: “After installing the updates listed in the Security Updates table for your operating system, a new [inetpub folder] will be created on your [system drive]. This folder should not be deleted, regardless of whether Internet Information Services (IIS) is active on the target device. This behavior is part of changes that increase protection and does not require any action from IT admins and end users.”

In short, it doesn’t matter whether you use IIS or not, you need to leave this folder alone. Without the folder being present, the mentioned security hole will remain present in Windows 11, offering attackers a potential opportunity to compromise your PC (at least if they are local to the device, meaning they have physical access).

This is an odd affair, and I assumed this was a bug when I wrote about it last week, since it seemed like a weird way of implementing a security fix. Of course, what Microsoft should have done is made it very clear in the release notes for this patch that it creates the folder and that users should leave it alone (while noting that it’s empty and harmless).

As I already observed, though, it’s best to proceed with caution when tinkering with Windows. What’s particularly strange about this affair is that there are reports in the past of this same folder being created, so maybe this was part of the implementation of security patches in these cases too – who knows.

What about if you’ve already deleted the folder? Well, in that case, you need to reinstate it, and Windows Latest is on hand with the solution (as advised by Microsoft). You need to open the Control Panel, then go into Programs > Programs and Features. On the left, you’ll see an option to ‘Turn Windows features on or off’ – click on this. Scroll down the alphabetical list of features and find ‘Internet Information Services’ and tick the box next to this, then click on the OK button.

The folder will now be recreated. If you did delete it last week, be sure to complete these steps.

You may also like...- Windows 11 is getting a very handy change to the taskbar, as Microsoft takes a leaf from Apple’s Mac playbook

- Windows 11 fully streamlined in just two clicks? Talon utility promises to rip all the bloatware out of Microsoft’s OS in a hassle-free way

- Microsoft looks to be making a big change to how you install and log in to Windows 11 – and I’m not happy about it at all

Some of Siri's delayed Apple Intelligence features are tipped to arrive with iOS 19

- Siri is reportedly getting more AI later this year

- It's not clear if all the delayed features will be added

- Deeper app integrations are tipped to appear

Apple has got itself into quite the tangle with Siri and Apple Intelligence, with promised AI upgrades now officially delayed and the company taking plenty of criticism for it – but at least some of these delayed features could make an appearance later this year.

In a New York Times article recapping some of Apple's recent AI woes, including internal friction over future plans, there's a mention that Siri would get certain upgrades "in the fall" in the US – so probably September time, with the iPhone 17 and iOS 19.

"Apple hasn’t canceled its revamped Siri," the article states. "The company plans to release a virtual assistant in the fall capable of doing things like editing and sending a photo to a friend on request, three people with knowledge of its plans said."

That's not a whole lot to go off – are we just getting some extra photo editing capabilities, or the full suite of delayed features? Ultimately, Apple wants to get Siri on a par with ChatGPT or Gemini, but that may not happen in 2025.

Delayed features

The photo editing feature mentioned in the NYT article is one of the Apple Intelligence upgrades promised back in 2024: the ability for Siri to dig deeper into iPhone apps and take actions on behalf of users via voice commands (known as App Intents).

That's just part of the picture though. Apple has also said Siri will get much smarter in terms of context, understanding more about what's happening on your iPhone and more about you (by tapping into some of your personal data, in a private and secure way).

Once Siri gets those features, it'll be better able to compete with its AI chatbot rivals. Just this week, for example, ChatGPT added a memory upgrade that means it can tap into your full conversation history for answers, if needed.

Apple Intelligence features already added to Siri include support for more natural language in conversations, and improved tech help support for your devices. As for iOS 19, expect to hear more about the software update at the start of June.

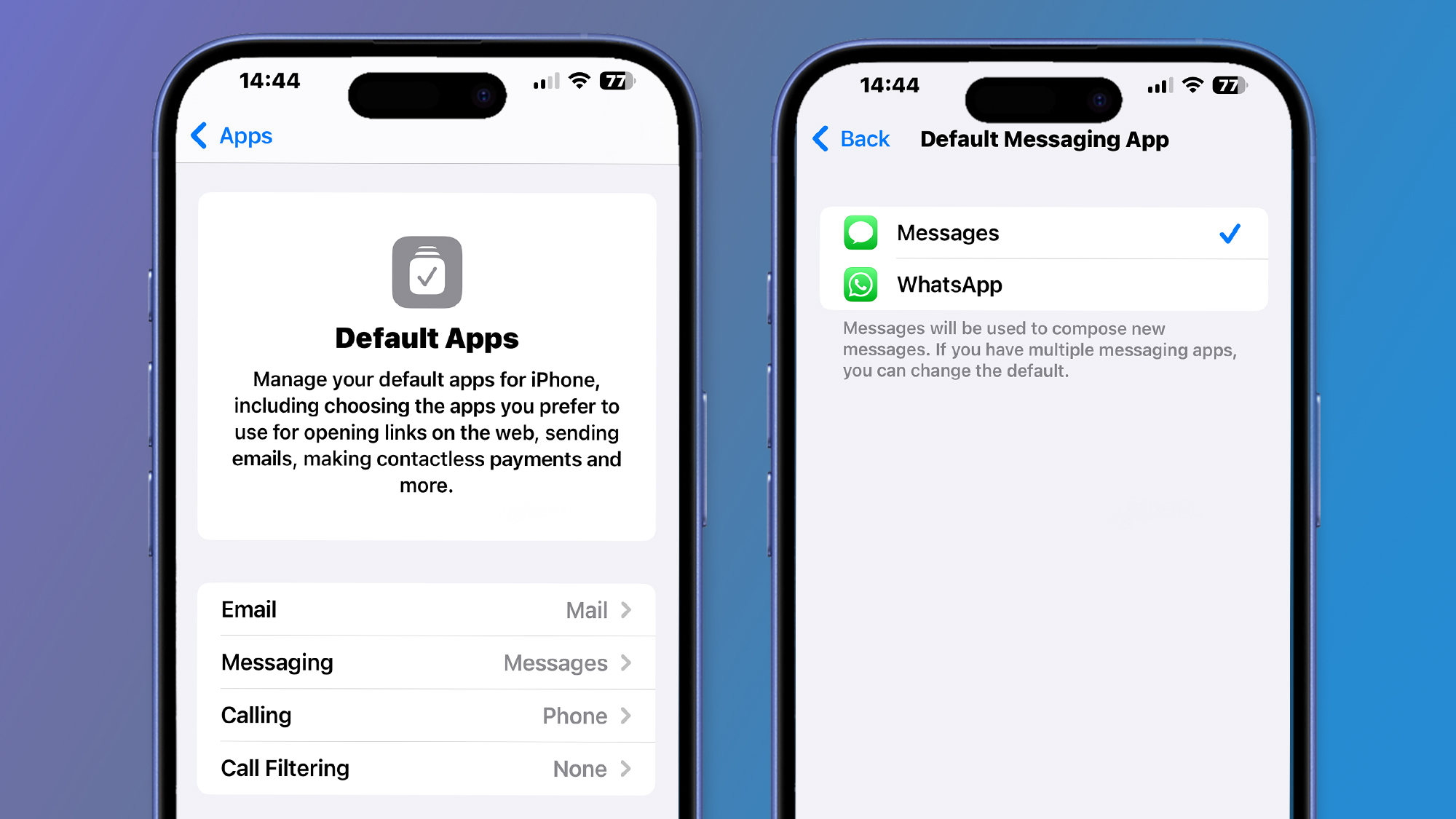

You might also likeWhatsApp has just dropped these 9 new features – including 2 that I'm not happy about

- WhatsApp has just rolled out a plethora of new features and functions for chats and calls

- This includes zoom for video calls, scanning and sending documents directly from WhatsApp, and a new way to prioritize group chat notifications

- Out of all the new additions however, there are two that haven't sat right with users

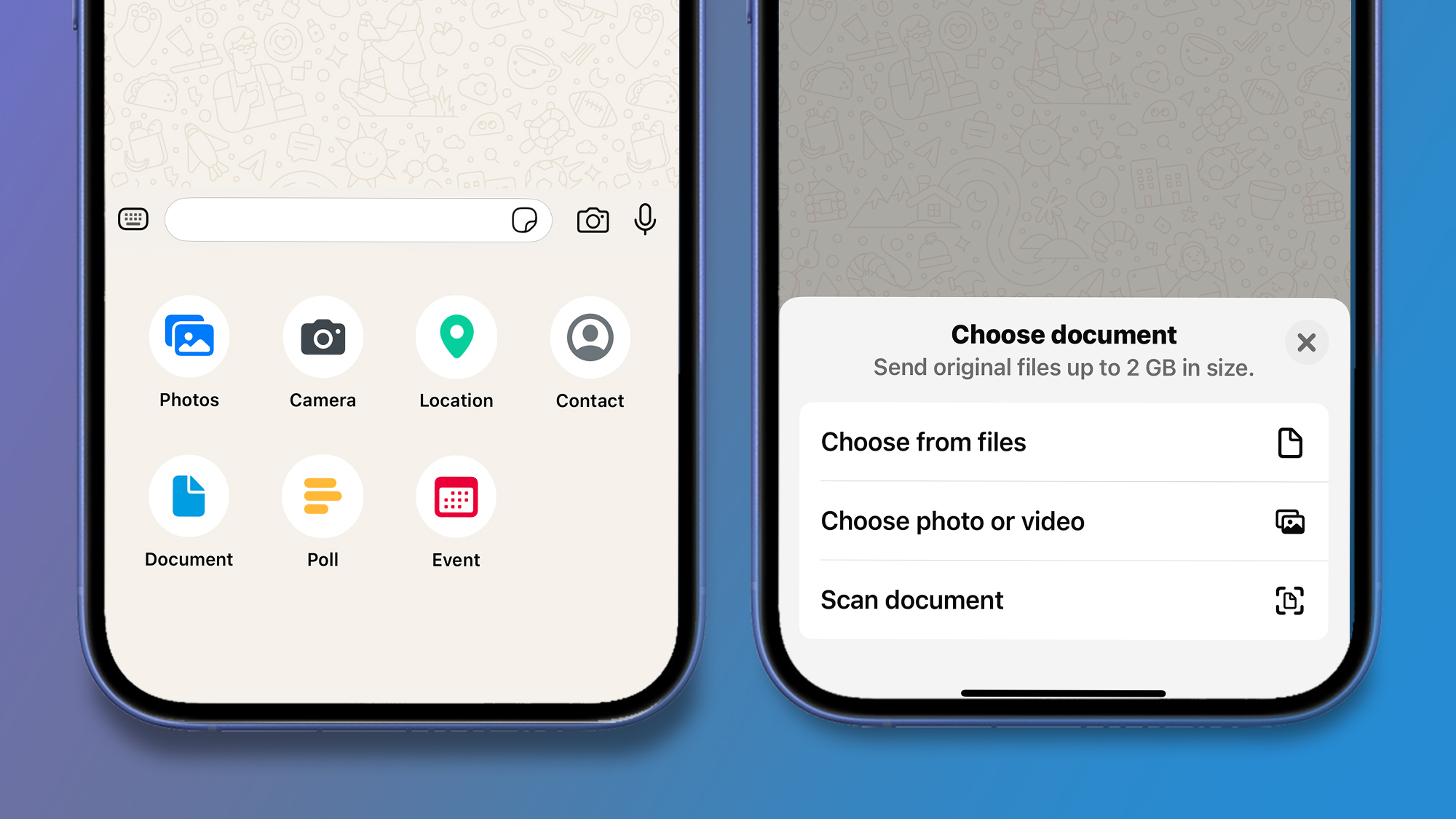

The aftermath of Apple’s latest iOS 18.4 update is still lingering, and we’re all coming to grips with its roster of new features. With that said, WhatsApp has also just dropped its own big update, announcing brand new additions to its existing call, chats, and channels sections.

Now I say ‘big update’, but that’s a bit of an understatement as WhatsApp’s list of new upgrades is extensive, and includes minor new features such as smoother video call quality, and voice message transcripts on Channels. However there are nine stand-out features we think are interesting additions to the messaging platform, but two of them have rubbed us the wrong way.

A plethora of new functionsFirst of all, despite how small-scale some of the new features are, there’s a handful that could make a significant impact on your WhatsApp experience, starting with its simple pinch-to-zoom feature in video calls.

It’s taken WhatsApp a considerable amount of time to catch up to the likes of FaceTime where users have been able to zoom for a while, but regardless of the delay on WhatsApp’s part, this is a benefit I know frequent video call users like myself will reap. In addition to this new call feature, WhatsApp now allows you to add someone to an ongoing 1:1 call right from a chat thread.

Calls aside, WhatsApp has brought even more functions to its chat section. If you’re an iPhone user that gravitates towards WhatsApp as opposed to using its flagship iMessage service, there’s now a way for you to set WhatsApp as your default, provided you’ve installed the latest version of iOS. All you have to do is go to your iPhone Settings, select Default Apps, and choose WhatsApp.

It doesn’t stop there. WhatsApp is doubling down on its integration with your daily and personal life, introducing new events updates in 1:1 chats as well as groups, as well as a new feature that allows you to scan and send documents directly from WhatsApp on iPhone.

The last two helpful features that could benefit WhatsApp users include video notes (like voice notes) for channels, allowing admins to instantly record and share videos up to 60 seconds. Finally, you can highlight notifications in groups, giving you an easier way to prioritize your group chat notifications.

Overall, the new additions to WhatsApp pack a lot of value, even though some may have a slightly less impact than others. But of course, there are two other functions that WhatsApp has added that haven’t quite sat well with users – one which made quite a bad impression this week.

Meta AI is interfering once againIf you’ve been out of the loop, WhatsApp introduced a new Meta AI button in EU regions that you can’t remove from the app’s UI – leading to an uproar of angry WhatsApp users.

Essentially an AI chatbot feature, Meta’s new button in WhatsApp is a place for users to go to for a number of functions like answering questions or generating content. But the function itself isn’t necessarily what users are mad about, and it’s more to do with the fact they’ve not been given the option to remove it.

Quietly introduced in WhatsApp’s recent wave of new functions, there’s now a way of seeing who in your group chats are online, thanks to a new function that shows you the real-time status of each member in a group chat. This is obviously less of a headache compared to the untouchable Meta button, but it means that there's a lot more pressure to be swift in your replies.

Don't get me wrong, I'm a punctual text-replier, but we all have those days where we just don't have the energy to deal with our group chats – especially when plan-making goes horribly wrong, or you just don't want to reply to particular person. This new feature makes it easier for your friends and family to call you out, and WhatsApp knows exactly what it's doing.

You might also like...Pages

- « first

- ‹ previous

- 1

- 2

- 3